Abstract

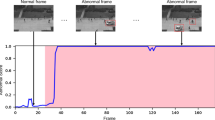

Network transmission is liable to errors and data loss. In movie transmission, packets of video frames are subject to loss or even explicit elimination for many reasons including congestion handling and the achievement of higher compression. Not only does the loss of video frames cause significant reduction in video quality, but it could also cause a loss of synchronization between the audio and video streams. If not corrected, this cumulative loss can seriously degrade the motion picture's quality beyond viewers' tolerance. In this paper, we study and classify the effect of audio-video de-synchronization. Afterwards, we develop and examine the performance and appropriateness of the application of many client-based techniques in the estimation of lost frames using the existing received frames, without the need for retransmissions or error control information. The estimated frames are injected at their appropriate locations in the movie stream to restore the loss. The objective is to enhance video quality by finding a very close estimate to the original frames at a suitable computation cost, and to contribute to the restoration of synchronization within the tolerance level of viewers.

Similar content being viewed by others

References

D. Aaron and S. Hemami, “Dense motion field reduction for motion estimation,” in Proc. Asilomar Conference on Signals, Systems, and Computers, Nov. 1998. 2. S. Almogbel and A. Youssef, “Flat and hierarchical segmentation,” Dsc. Dissertation, the GeorgeWashington University, 2000.

S. Aly and A. Youssef, “Synchronization-sensitive frame estimation techniques for audio-video synchronization restoration,” in Proc. Internet Computing 2000 Conference, Las Vegas, Nevada, 2000.

S. Aly and A. Youssef, “Frame estimation for restoring audio-video synchronization using parallelized quadratic frame interpolation,” in Proc. PDPTA'2000 Conference, Las Vegas, Nevada, 2000.

M. Bennamoun, “Application of time-frequency signal analysis to motion estimation,” in Proc. ICIP97, 1997.

G. Bruck, “A comparison between the luminance compensation method and other color picture transmission systems,” IEEE Transactions on Consumer Electronics Vol. 36, No. 4, pp. 922–932, 1990.

G. Conklin and S. Hemami, “Multi-resolution motion estimation,” in Proc. ICASSP '97, April 1997.

L. Ehley, B. Furht, and M. Ilyas, “Evaluation of multimedia synchronization techniques,” in Proc. International Conference on Multimedia Computing and Systems, 1994.

C. Fan and N. Namazi, “Simultaneous motion estimation and filtering of image sequences,” in Proc. ICIP97, 1997.

G. Haskell, A. Puri, and A. Netravali, DigitalVideo: An Introduction to MPEG-2, Chapman & Hall: NewYork, 1997.

J. Magarey et al., “Optimal schemes for motion estimation using color image sequences,” in Proc. ICIP97, 1997.

K. Naik, “Specification and synthesis of a multimedia synchronizer,” in Proc. International Conference on Multimedia Computing and Systems, 1994.

I. Rhee, “Retransmission-based error control for interactive video applications over the internet,” in Proc. IEEE Conference on Multimedia Computing and Systems, 1999.

V. Ruiz et al., “An 8×8-block based motion estimation using Kalman filter,” in Proc. ICIP97, 1997. 15. S. Son and Nipun Agarwal, “Synchronization of temporal constructs in distributed multimedia systems with controlled accuracy,” in Proc. International Conference on Multimedia Computing and Systems, 1994.

C. Tomasi, “Pictures and trails: A new framework for the computation of shape and motion from perspective image sequences,” in Proc. IEEE Conference on Computer Vision and Pattern Recognition, June 1994.

B. Wah and X. Su, “Streaming video with transformation-based error concealment and reconstruction,” in Proc. IEEE Conference on Mutlimedia Computing and Systems, 1999.

T.Wahl and K. Rothermel, “Representing time in multimedia systems,” in Proc. International Conference on Multimedia Computing and Systems, 1994.

Y. Yang and S. Hemami, “Rate-constrained motion estimation and perceptual coding,” in Proc. IEEE Conference on Image Processing, Oct. 1997.

T. Yoshida et al., “Block matching motion estimation using block integration based on reliability metric,” in Proc. ICIP97, 1997.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Aly, S.G., Youssef, A. Synchronization-Sensitive Frame Estimation: Video Quality Enhancement. Multimedia Tools and Applications 17, 233–255 (2002). https://doi.org/10.1023/A:1015781104433

Issue Date:

DOI: https://doi.org/10.1023/A:1015781104433