Abstract

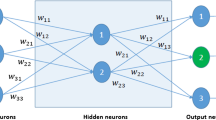

This article considers the cost dependent construction of linear and piecewise linear classifiers. Classical learning algorithms from the fields of artificial neural networks and machine learning consider either no costs at all or allow only costs that depend on the classes of the examples that are used for learning. In contrast to class dependent costs, we consider costs that are example, i.e. feature and class dependent. We present a cost sensitive extension of a modified version of the well-known perceptron algorithm that can also be applied in cases, where the classes are linearly non-separable. We also present an extended version of the hybrid learning algorithm DIPOL, that can be applied in the case of linear non-separability, multi-modal class distributions, and multi-class learning problems. We show that the consideration of example dependent costs is a true extension of class dependent costs. The approach is general and can be extended to other neural network architectures like multi-layer perceptrons and radial basis function networks.

Similar content being viewed by others

References

L. Saitta (Ed.), Machine Learning-A Technological Roadmap, University of Amsterdam. ISBN: 90-5470-096-3, 2000.

R.O. Duda and P.E. Hart, Pattern Classification and Scene Analysis, John Wiley & Sons: New York, 1973.

C.M. Bishop, Neural Networks for Pattern Recognition, Oxford University Press: Oxford, 1995.

D. Michie, D.H. Spiegelhalter, and C.C. Taylor, Machine Learning, Neural and Statistical Classification, Series in Artificial Intelligence, Ellis Horwood, 1994.

D.D. Margineantu and T.G. Dietterich, “Bootstrap methods for the cost-sensitive evaluation of classifiers,” in Proc. 17th International Conf. on Machine Learning, Morgan Kaufmann: San Francisco, CA, 2000, pp. 583–590.

C. Elkan, “The foundations of cost-sensitive learning,” in Proceedings of the Seventeenth International Conference on Artifi-cial Intelligence (IJCAI-01), edited by B. Nebel, Morgan Kaufmann Publishers, Inc.: San Francisco, CA, 2001, pp. 973–978.

L.C. Thomas, “A survey of credit and behavioural scoring: Forecasting financial risk of lending to consumers,” International Journal of Forecasting, vol. 16, pp. 149–172, 2000.

B. Schulmeister and F. Wysotzki, “DIPOL-A hybrid piecewise linear classifier,” in Machine Learning and Statistics: The Interface, edited by R. Nakeiazadeh and C.C. Taylor, Wiley, 1997, pp. 133–151.

F. Wysotzki, W. Müller, and B. Schulmeister, “Automatic construction of decision trees and neural nets for classification using statistical considerations,” in Learning, Networks and Statistics, edited by G. DellaRiccia, H.-J. Len, and R. Kruse, No. 382 in CISM Courses and Lectures. Springer, 1997.

A. Lenarcik and Z. Piasta, “Rough classifiers sensitive to costs varying from object to object,” in Proceedings of the 1st International Conference on Rough Sets and Current Trends in Computing (RSCTC-98), edited by L. Polkowski and A. Skowron, vol. 1424 of LNAI. Springer: Berlin, pp. 222–230, 1998.

S. Unger and F. Wysotzki, Lernf¨ahige Klassifizierungssysteme (Classifier Systems that are able to Learn), Akademie-Verlag: Berlin, 1981.

J. Yang, R. Parekh, and V. Honavar, “Comparison of performance of variants of single-layer perceptron algorithms on nonseparable data,” Neural, Parallel and Scientific Computation, vol. 8, pp. 415–438, 2000.

Y. Freund and R.E. Schapire, “Large margin classification using the perceptron algorithm,” in COLT: Proceedings of the Workshop on Computational Learning Theory, Morgan Kaufmann Publishers, ACM Press: New York, 1998, pp. 209–217.

F.H. Clarke, Optimization and Nonsmooth Analysis, Canadian Math. Soc. Series of Monographs and Advanced Texts, John Wiley & Sons, 1983.

A. Nedic and D. Bertsekas, “Incremental subgradient methods for nondifferentiable optimization,” SIAM Journal on Optimization, pp. 109–138, 2001.

M. Hasenjaeger and H. Ritter, “Perceptron learning revisited: The sonar targets problem,” Neural Processing Letters, vol. 10, no. 1, pp. 17–24, 1999.

V.N. Vapnik, The Nature of Statistical Learning Theory, Springer: New York, 1995.

C.J.C. Burges, “Atutorial on support vector machines for pattern recognition,” Knowledge Discovery and Data Mining, vol. 2, no. 2, 1998.

N. Cristianini and J. Shawe-Taylor, An Introduction to Support Vector Machines (and Other Kernel-Based Learning Methods), Cambridge University Press, 2000, pp. 955–974.

S. Knerr, L. Personnaz, and G. Dreyfus, “Handwritten digit recognition by neural networks with single-layer training,” IEEE Transactions on Neural Networks, vol. 3, no. 6, pp. 962–968, 1992.

D. Price, S. Knerr, L. Personnaz, and G. Dreyfus, “Pairwise neural network classifiers with probabilistic outputs,” in Advances in Neural Information Processing Systems, edited by G. Tesauro, D. Touretzky, and T. Leen, vol. 7. The MIT Press, 1995, pp. 1109–1116.

P. Geibel and F. Wysotzki, “Using costs varying from object to object to construct linear and piecewise linear classifiers,” Technical Report 2002-5, TU Berlin, Fak. IV (WWW http://ki.cs.tuberlin. de/~geibel/publications.html), 2002.

R.I. Damper, S.R. Gunn, and M.O. Gore, “Extracting phonetic knowledge from learning systems: Perceptrons, support vector machines and linear discriminants,” Applied Intelligence, vol. 12, pp. 43–62, 2000.

B.-J. Falkowski, “Risk analysis using perceptrons and quadratic programming,” in Computational Intelligence, edited by B. Reusch, Springer Verlag, 1999, vol. 1625.

Rights and permissions

About this article

Cite this article

Geibel, P., Wysotzki, F. Learning Perceptrons and Piecewise Linear Classifiers Sensitive to Example Dependent Costs. Applied Intelligence 21, 45–56 (2004). https://doi.org/10.1023/B:APIN.0000027766.72235.bc

Issue Date:

DOI: https://doi.org/10.1023/B:APIN.0000027766.72235.bc