Abstract

Machine perception uses advanced sensors to collect information about the surrounding scene for situational awareness1,2,3,4,5,6,7. State-of-the-art machine perception8 using active sonar, radar and LiDAR to enhance camera vision9 faces difficulties when the number of intelligent agents scales up10,11. Exploiting omnipresent heat signal could be a new frontier for scalable perception. However, objects and their environment constantly emit and scatter thermal radiation, leading to textureless images famously known as the ‘ghosting effect’12. Thermal vision thus has no specificity limited by information loss, whereas thermal ranging—crucial for navigation—has been elusive even when combined with artificial intelligence (AI)13. Here we propose and experimentally demonstrate heat-assisted detection and ranging (HADAR) overcoming this open challenge of ghosting and benchmark it against AI-enhanced thermal sensing. HADAR not only sees texture and depth through the darkness as if it were day but also perceives decluttered physical attributes beyond RGB or thermal vision, paving the way to fully passive and physics-aware machine perception. We develop HADAR estimation theory and address its photonic shot-noise limits depicting information-theoretic bounds to HADAR-based AI performance. HADAR ranging at night beats thermal ranging and shows an accuracy comparable with RGB stereovision in daylight. Our automated HADAR thermography reaches the Cramér–Rao bound on temperature accuracy, beating existing thermography techniques. Our work leads to a disruptive technology that can accelerate the Fourth Industrial Revolution (Industry 4.0)14 with HADAR-based autonomous navigation and human–robot social interactions.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

$199.00 per year

only $3.90 per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

The data supporting the findings of this study are available in the paper. The HADAR database is available at https://github.com/FanglinBao/HADAR.

Code availability

The custom-designed codes are provided along with the HADAR database at https://github.com/FanglinBao/HADAR.

References

Rogers, C. et al. A universal 3D imaging sensor on a silicon photonics platform. Nature 590, 256–261 (2021).

Floreano, D. & Wood, R. J. Science, technology and the future of small autonomous drones. Nature 521, 460–466 (2015).

Jiang, Y., Karpf, S. & Jalali, B. Time-stretch LiDAR as a spectrally scanned time-of-flight ranging camera. Nat. Photonics 14, 14–18 (2020).

Maccone, L. & Ren, C. Quantum radar. Phys. Rev. Lett. 124, 200503 (2020).

Tachella, J. et al. Real-time 3D reconstruction from single-photon lidar data using plug-and-play point cloud denoisers. Nat. Commun. 10, 4984 (2019).

Lien, J. et al. Soli: ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph. 35, 142 (2016).

Kirmani, A. et al. First-photon imaging. Science 343, 58–61 (2014).

Geiger, A., Lenz, P. & Urtasun, R. in Proc. 2012 IEEE Conference on Computer Vision and Pattern Recognition 3354–3361 (IEEE, 2012).

Nassi, B. et al. in Proc. 2020 ACM SIGSAC Conference on Computer and Communications Security 293–308 (Association for Computing Machinery, 2020).

Popko, G. B., Gaylord, T. K. & Valenta, C. R. Interference measurements between single-beam, mechanical scanning, time-of-flight lidars. Opt. Eng. 59, 053106 (2020).

Hecht, J. Lidar for self-driving cars. Opt. Photon. News 29, 26–33 (2018).

Gurton, K. P., Yuffa, A. J. & Videen, G. W. Enhanced facial recognition for thermal imagery using polarimetric imaging. Opt. Lett. 39, 3857–3859 (2014).

Treible, W. et al. in Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2961–2969 (IEEE, 2017).

Schwab, K. The fourth industrial revolution: what it means, how to respond. Foreign Affairs https://www.foreignaffairs.com/world/fourth-industrial-revolution (2015).

Risteska Stojkoska, B. L. & Trivodaliev, K. V. A review of Internet of Things for smart home: challenges and solutions. J. Cleaner Prod. 140, 1454–1464 (2017).

By 2030, one in 10 vehicles will be self-driving globally. Statista https://mailchi.mp/statista/autonomous_cars_20200206?e=145345a469 (2020).

How robots change the world. What automation really means for jobs and productivity. Oxford Economics https://resources.oxfordeconomics.com/how-robots-change-the-world (2020).

Garcia-Garcia, A. et al. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 70, 41–65 (2018).

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Jordan, M. I. & Mitchell, T. M. Machine learning: trends, perspectives, and prospects. Science 349, 255–260 (2015).

Gade, R. & Moeslund, T. B. Thermal cameras and applications: a survey. Mach. Vis. Appl. 25, 245–262 (2014).

Tang, K. et al. Millikelvin-resolved ambient thermography. Sci. Adv. 6, eabd8688 (2020).

Henini, M. & Razeghi, M. Handbook of Infrared Detection Technologies (Elsevier, 2002).

Haque, A., Milstein, A. & Li, F.-F. Illuminating the dark spaces of healthcare with ambient intelligence. Nature 585, 193–202 (2020).

Beier, K. & Gemperlein, H. Simulation of infrared detection range at fog conditions for enhanced vision systems in civil aviation. Aerosp. Sci. Technol. 8, 63–71 (2004).

Newman, E. & Hartline, P. Integration of visual and infrared information in bimodal neurons in the rattlesnake optic tectum. Science 213, 789–791 (1981).

Gillespie, A. et al. A temperature and emissivity separation algorithm for Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) images. IEEE Trans. Geosci. Remote Sens. 36, 1113–1126 (1998).

Baldridge, A., Hook, S., Grove, C. & Rivera, G. The ASTER spectral library version 2.0. Remote Sens. Environ. 113, 711–715 (2009).

Szeliski, R. Computer Vision: Algorithms and Applications (Springer, 2011).

Khaleghi, B., Khamis, A., Karray, F. O. & Razavi, S. N. Multisensor data fusion: a review of the state-of-the-art. Inf. Fusion 14, 28–44 (2013).

Lopes, C. E. R. & Ruiz, L. B. in Proc. 2008 1st IFIP Wireless Days 1–5 (IEEE, 2008).

Wright, W. F. & Mackowiak, P. A. Why temperature screening for coronavirus disease 2019 with noncontact infrared thermometers does not work. Open Forum Infect. Dis. 8, ofaa603 (2020).

Ghassemi, P., Pfefer, T. J., Casamento, J. P., Simpson, R. & Wang, Q. Best practices for standardized performance testing of infrared thermographs intended for fever screening. PLoS One 13, e0203302 (2018).

Magalhaes, C., Tavares, J. M. R., Mendes, J. & Vardasca, R. Comparison of machine learning strategies for infrared thermography of skin cancer. Biomed. Signal Process. Control 69, 102872 (2021).

Reddy, D. V., Nerem, R. R., Nam, S. W., Mirin, R. P. & Verma, V. B. Superconducting nanowire single-photon detectors with 98% system detection efficiency at 1550 nm. Optica 7, 1649–1653 (2020).

Masoumian, A. et al. GCNDepth: self-supervised monocular depth estimation based on graph convolutional network. Neurocomputing 517, 81–92 (2023).

Qu, Y. et al. Thermal camouflage based on the phase-changing material GST. Light Sci. Appl. 7, 26 (2018).

Li, M., Liu, D., Cheng, H., Peng, L. & Zu, M. Manipulating metals for adaptive thermal camouflage. Sci. Adv. 6, eaba3494 (2020).

Li, H., Xiong, P., An, J. & Wang, L. Pyramid Attention Network for semantic segmentation. Preprint at https://arxiv.org/abs/1805.10180 (2018).

Fu, J. et al. in Proc. IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 3146–3154 (IEEE, 2019).

Acknowledgements

This work was supported by the Invisible Headlights project from the Defense Advanced Research Projects Agency (DARPA). We thank the Army night-vision team (Infrared Camera Technology Branch, DEVCOM C5ISR Center, U.S. Army) for the help in collecting HADAR prototype-2 experimental data. We thank Z. Yang for her help in experiments.

Author information

Authors and Affiliations

Contributions

F.B. and Z.J. conceived the idea. F.B. led and Z.J. supervised the project. F.B. developed and L.Y. contributed to the HADAR estimation theory. F.B. generated the HADAR database and designed experiments. X.W. built HADAR prototype-1 and conducted experiments. F.B. analysed experimental data. S.H.S., G.S., F.B., V.A. and V.N.B. contributed to machine learning. F.B. prepared and all authors revised the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature thanks Manish Bhattarai, Moongu Jeon and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer reviewer reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

Extended Data Fig. 1 HADAR TeX vision algorithms.

a, Architecture of TeX-Net for inverse TeX decomposition. TeX-Net is physics-inspired for three aspects. First, TeX decomposition of heat cubes relies on both spatial patterns and spectral thermal signatures. This inspires the adoption of spectral and pyramid (spatial) attention layers39 in the UNet model. Second, owing to TeX degeneracy, the mathematical structure, \({X}_{\alpha \nu }={\sum }_{\beta \ne \alpha }{V}_{\alpha \beta }{S}_{\beta \nu }\), has to be specified to ensure the uniqueness of inverse mapping and hence it is essential to learn thermal lighting factors V instead of texture X. That is, TeX-Net cannot be trained end to end. Here α, β and γ are indices of objects and ν is the wavenumber. Xα is constructed with V and Sβν indirectly, in which Sβν is the downsampled Sαν to approximate k most notable environmental objects. Third, the material library \({\mathscr{M}}\) and its dimension are key to the network. TeX-Net can be trained with either ground-truth T, m and V in supervised learning or, alternatively, with material library \({\mathscr{M}}\), Planck’s law Bν(Tα) and the mathematical structure of Xαν in unsupervised learning. In supervised learning, the loss function is a combination of individual losses with regularization hyperparameters. In unsupervised learning, the loss function defined on the reconstructed heat cube is based on physics models of the heat signal. In practice, a hybrid loss function with T, e and V contributions (50%), as well as the physics-based loss (50%), is used. In this work, we have also proposed a non-machine-learning approach, the TeX-SGD, to generate TeX vision. TeX-SGD decomposes TeX pixel per pixel, based on the physics loss and a smoothness constraint; see sections SIII.A and SIII.B of the Supplementary Information for more details. Res-1/2/3/4 are ResNet50 with downsampling. The plus symbol is the addition operation followed by upsampling. b, Texture distillation reconstructs the part of scattered signal that originates only from sky illuminations. The texture-distillation process is to mimic daylight signal as X to form TeX vision and it is done by evaluating the HADAR constitutive equation in a forward way, with the physical attributes solved out in TeX-SGD or TeX-Net. It removes the unwanted effect of other environmental objects being the light source, which is unfamiliar in daily experience. The process can be described in four steps. Here step 1 is the initialization that keeps only the sky illumination on and turns other radiations off. Step 2 is the iterative HADAR constitutive equation without direct emission. Evaluating it several times gives the multiple-scattering effect. Note that the ground-truth texture partly remains in the physics-based loss, res, owing to cutoffs on scattering and/or number of environmental objects. The final estimated texture in step 4 is a fusion of distilled scattered signal \({\bar{X}}_{\alpha \nu }\) and the physics-based loss res. Arrows in b indicate thermal radiation emitted/scattered along the arrow direction. The TeX-Net code, pre-trained weights and a sample implementation of texture distillation is available at https://github.com/FanglinBao/HADAR.

Extended Data Fig. 2 HADAR database and demonstrated TeX vision show that HADAR overcomes the ghosting effect in traditional thermal vision and sees through the darkness as if it were day.

TeX vision (colour hue H = material e; saturation S = temperature T; brightness V = texture X) provides intrinsic attributes and enhanced textures of the scene to enable comprehensive understanding. Our HADAR database consists of 11 dissimilar night scenes covering most common road conditions that HADAR may find applications in. Particularly, the indoor scene is designed for robot helpers in smart-home applications, whereas others are for various self-driving applications. Scene-11 is a real-world off-road scene and shall be shown in Extended Data Fig. 3. The database is a long-wave infrared stereo-hyperspectral database with crowded (for example, crowded street) and complicated (for example, forest) scenes, having several frames per scene and 30 different kinds of material. The database is available at https://github.com/FanglinBao/HADAR. See Supplementary Fig. 18 for the TeX-Net performance on the HADAR database.

Extended Data Fig. 3 HADAR TeX vision demonstrated in real-world experiments (Scene-11 of the HADAR database) overcomes the ghosting effect in traditional thermal vision and sees through the darkness as if it were day.

Here TeX vision was generated by both TeX-Net and TeX-SGD for comparison. We used a semantic library instead of the exact material library for the TeX vision; see section SV.C of the Supplementary Information for more details. The semantic library consists of tree (brown), vegetation (green), soil (yellow), water (blue), metal (purple) and concrete (chartreuse). Water gives mirror images of trees and part of the sky beyond the view. Most of the water pixels can be correctly estimated as ‘water’, except for a small portion corresponding to sky image that has been estimated as ‘metal’, as metal also reflects the sky signal. TeX-Net uses both spatial information and spectral information for TeX decomposition and hence its TeX vision is spatially smoother. By contrast, TeX-SGD mainly makes use of spectral information and decomposes TeX pixel per pixel. Compared with TeX-Net, we observed that TeX-SGD is better at material identification and texture recovery for fine structures, such as the fence of the bridge, bark wrinkles and culverts. Note that the current TeX-Net was trained partially with TeX-SGD outputs. The above observations are not used to claim performance ranking between TeX-SGD and TeX-Net. Both TeX-Net and TeX-SGD confirm that HADAR TeX vision has achieved a semantic understanding of the night scene with enhanced textures comparable with RGB vision in daylight.

Extended Data Fig. 4 HADAR TeX vision recovers textures and overcomes the ghosting effect.

Here TeX vision is generated by TeX-SGD. From top to bottom are TeX/thermal/TeX/thermal vision of an off-road night scene at two different positions. HADAR recovers fine textures such as water ripples, bark wrinkles, culverts, as well as the great details of the grass lawn. The HADAR prototype-2 sensor is a focal plane array focusing on infinity. Close objects exhibit focus blur, whereas distant objects are beyond the spatial resolution to show fine details. Therefore, fine textures are mostly observed in a certain distance range.

Extended Data Fig. 5 HADAR TeX vision overcomes the ghosting effect in traditional thermal vision and beats the state-of-the-art approach to enhance visual contrast.

This scene consists of several humans (dark red in TeX vision), robots (purple), cars and buildings on a summer night. Geometric textures of the road and pavements are vivid in TeX vision but invisible in raw thermal vision and poor in enhanced thermal vision. The mean texture density (standard deviation; see section SII.D of the Supplementary Information for more details) in TeX vision is 0.0788, about 4.6-fold more than the texture density of 0.0170 in the state-of-the-art enhanced thermal vision. This scene is the Street-Long-Animation in the HADAR database.

Extended Data Fig. 6 HADAR estimation theory for multi-material library.

a, Sample incident spectra of five materials generated by Monte Carlo simulations. T = 60 °C, T0 = 20 °C and V0 = 0.5. b, Minimum statistical distance of each material. Spectra of silica and paint have non-trivial features that are distinct from other materials in the library. Statistical distance larger than 1 (dashed line) consistently indicates that silica and paint are identifiable. Note that aluminium is similar to human skin under TeX degeneracy and non-identifiable, as discussed in Fig. 3, even though with the same temperature its spectrum is much weaker than human skin. Emissivity of human skin was approximated as a constant 0.95. Other emissivity profiles were drawn from the NASA JPL ECOSTRESS spectral library. This figure intuitively shows that HADAR identifiability based on semantic/statistical distance is an effective figure of merit to describe identifiability. For more details on generalizing HADAR estimation theory to several materials, see section SII.B of the Supplementary Information.

Extended Data Fig. 7 HADAR detection (TeX vision + AI) beats widely used state-of-the-art thermal detection (conventional thermal vision + AI).

a, Human body detection results based on thermal imaging. b, Human and robot identification results based on HADAR. Detection is performed by thermal-YOLO (YOLO-v5 fine-tuned on the thermal automotive dataset; https://github.com/MAli-Farooq/Thermal-YOLO-And-Model-Optimization-Using-TensorFlowLite), with detection score/confidence shown together with the bounding box. Owing to TeX degeneracy and the ghosting effect, human body, robot (aluminium at 72.5 °C) and the car (paint at 37 °C) emit similar amounts of thermal radiation and, hence, the human body visually merges into the car in thermal imaging, whereas the robot is mis-recognized as a human body. With our proposed TeX vision, which captures intrinsic attributes, HADAR can distinguish them clearly and yield correct detection. Explicitly, we first extract the material regions corresponding to human (c) and robot (d), then we perform people detection individually and, finally, we combine detection results to form the final HADAR detection (b). We observed that the above results showing the advantage of HADAR TeX vision versus thermal vision is robust and independent of the AI algorithms. Standard computer vision toolbox (people detector in Matlab R2021b) also confirms the results. We also observed that HADAR detection is robust against wrong material predictions, even though a few road pixels under the car and around the human leg are predicted as ‘aluminium’ in b and d.

Extended Data Fig. 8 HADAR physics-driven semantic segmentation beats state-of-the-art vision-driven semantic segmentation (thermal vision + AI).

a, Thermal semantic segmentation with DANet (pre-trained on the Cityscapes dataset)40. b, HADAR semantic segmentation transformed from the material map in estimated TeX vision. c, Ground-truth material map in the ground-truth TeX vision. d, Semantic segmentation transformed from c to approximate the ground-truth segmentation; see section SIII.E of the Supplementary Information for more details of the non-machine-learning transformation. Statistics in the upper table were done on the first four on-road scenes in the HADAR database with fivefold cross validation. e–h and the lower table show the typical performance comparison between HADAR versus thermal semantics, in which the off-road scene is beyond the training set of DANet. We have also observed consistent results on other non-city scenes in the HADAR database (not shown). This real-world off-road scene is a general example to show the importance of material fingerprint in detection/segmentation. Because AI enhancement is only used in thermal semantics, the advantage of HADAR semantics is clear from TeX vision with physical attributes. In the future, learning-based approaches to convert the material map to semantic segmentation with the help of spatial information may further improve HADAR semantics. mIoU, pixelwise mean intersection over union. Ground truths of the real-world scene were manually annotated.

Extended Data Fig. 9 Unmanned HADAR thermography reaching the Cramér–Rao bound.

By exploiting spectral information and automatically identifying the target, HADAR maximizes temperature accuracy beyond traditional methods. Demonstrated in a–d is a HADAR alphabet sample made of plastics at 312.15 K on an unpolished silicon wafer at 317.15 K. a, Optical image. b, Thermograph using FLIR A325sc shows camouflage and lack of information. c, HADAR material readout. d, HADAR temperature readout. b and d sharing the same colour bar clearly demonstrate the HADAR advantage. Shown in e–f is the measurement of a uniform n-type SiC sample kept on a heating plate with varying heating power. e, Mean temperature readout shows that HADAR is unbiased and beats commercial infrared thermograph. f, Root mean square error shows that HADAR reaches the Cramér–Rao bound (CRB) for the given detector and imaging system. HADAR also beats commercial thermocouple in precision.

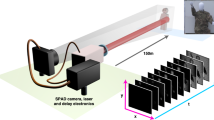

Extended Data Fig. 10 Prototype HADAR calibration and data collection.

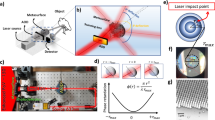

a, Experimental setup of our HADAR prototype-1. b,c, 3D schematics of our prototype HADAR. In our prototype HADAR, we used a sturdy stereo mount to take stereo heat cube pairs. d, Raw HADAR data with self-radiation of the detector reflected by filters. e, HADAR signal calibrated with a uniform reference object, to remove self-radiation of the detector. For more details of calibration, see section SIV of the Supplementary Information.

Supplementary information

Supplementary Information

This document is a merged file containing supplementary methods and discussions.

Supplementary Video 1

TeX vision for a real-world off-road scene.

Supplementary Video 2

Infrared vision for a real-world off-road scene.

Supplementary Video 3

TeX vision for a synthetic on-road scene.

Supplementary Video 4

Infrared vision for a synthetic on-road scene.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Bao, F., Wang, X., Sureshbabu, S.H. et al. Heat-assisted detection and ranging. Nature 619, 743–748 (2023). https://doi.org/10.1038/s41586-023-06174-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41586-023-06174-6

This article is cited by

-

Reconfigurable memlogic long wave infrared sensing with superconductors

Light: Science & Applications (2024)

-

Heat-vision based drone surveillance augmented by deep learning for critical industrial monitoring

Scientific Reports (2023)

-

Heat-assisted imaging enables day-like visibility at night

Nature (2023)

-

Extraordinary Optical Transmission Spectrum Property Analysis of Long-Wavelength Infrared Micro-Nano-Cross-Linked Metamaterial Structure

Plasmonics (2023)

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.