Abstract

Choosing optimal revascularization strategies for patients with obstructive coronary artery disease (CAD) remains a clinical challenge. While randomized controlled trials offer population-level insights, gaps remain regarding personalized decision-making for individual patients. We applied off-policy reinforcement learning (RL) to a composite data model from 41,328 unique patients with angiography-confirmed obstructive CAD. In an offline setting, we estimated optimal treatment policies and evaluated these policies using weighted importance sampling. Our findings indicate that RL-guided therapy decisions outperformed physician-based decision making, with RL policies achieving up to 32% improvement in expected rewards based on composite major cardiovascular events outcomes. Additionally, we introduced methods to ensure that RL CAD treatment policies remain compatible with locally achievable clinical practice models, presenting an interpretable RL policy with a limited number of states. Overall, this novel RL-based clinical decision support tool, RL4CAD, demonstrates potential to optimize care in patients with obstructive CAD referred for invasive coronary angiography.

Similar content being viewed by others

Introduction

Coronary artery disease (CAD) is a highly prevalent disease that results in a broad spectrum of clinical presentations from stable angina through to life threatening acute coronary syndromes (ACS), inclusive of unstable angina, non-ST elevation myocardial infarction (NSTEMI) and ST-elevation myocardial infarction (STEMI)1,2. Globally, CAD remains the leading cause of death, affecting 295 million people and causing 9.5 million deaths in 2021, along with a significant loss of disability-adjusted life years2,3. Coronary angiography is the reference standard test that is used to define the presence and regional severity of CAD across the heart’s vascular territories, with its findings dominantly used to guide revascularization decisions4. Revascularization generally aims to relieve myocardial ischemia and related downstream complications; however, despite technical advancements in percutaneous and surgical techniques, uncertainty remains about which strategy is best for individual patients5,6.

Randomized controlled trials (RCTs) have been conducted to directly compare the three available treatment strategies of percutaneous coronary intervention (PCI), coronary artery bypass graft (CABG), and medical therapy only (MT) in patients with obstructive CAD. The COURAGE trial, involving 2287 patients with obstructive CAD, found that PCI combined with medical treatment, did not reduce the risk of mortality or major cardiovascular events compared to MT alone in patients with stable disease7. Similarly, the BARI-2D trial, enrolling 2368 patients with type 2 diabetes, showed no significant difference in mortality or major cardiovascular events between intensive MT alone versus revascularization (PCI or CABG) plus MT8. Other studies have also compared PCI or CABG with MT across various CAD patient groups9,10,11,12,13. However, these RCTs have been consistently conducted and reported at the population level, applying strict inclusion/exclusion criteria, and not considering patient-specific variables that might influence individual treatment outcomes. Therefore, personalized approaches, particularly those leveraging machine learning (ML) methods, are of expanding interest.

Personalized revascularization decision making is uniquely challenged by a need to simultaneously consider coronary anatomic and lesion variation in the context of complex medical profiles and current patient health status. Choices between PCI and CABG are also highly dependent on individual circumstances, inclusive of single-vessel versus multi-vessel disease14 where CABG has been shown to offer a superior reduction in mortality and adverse events15,16,17,18,19. In contrast, other studies have shown no significant difference between PCI and CABG in less complex cases and the guidelines recommend PCI for these cases and those at higher surgical risk5,6,13. However, patients with greater disease complexity, such as those with high SYNTAX scores20 (a standardized scoring of CAD extent on invasive coronary angiography), demonstrate improved outcomes when managed by CABG versus PCI21. Despite their utility22,23,24, standardized coronary anatomic lesion scores do not account for other clinical variables highly relevant to clinical decision making24. Increasingly, a migration toward composite, multi-domain electronic health information with ML-based personalized decision making is being explored to address these challenges25,26,27,28,29,30,31.

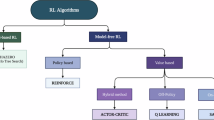

Reinforcement learning (RL) is a type of ML focused on choosing the best possible actions to maximize rewards from a given environment, generally modeled using a Markov decision process (MDP). MDP is defined by the tuple {S, A, T, R, γ}, where S is a finite state space, A is a finite action space, T is the transition matrix determining the probability of moving to a new state, R is the reward function, and γ is the discount factor. A given policy π is defined as the probability distribution over possible actions for each state. An RL agent learns best policies through sequential decision-making by interacting with the test environment. One major RL algorithm, Q-learning (QL), optimizes sequential problems by predicting the future rewards for actions of specific states and storing them in a Q-matrix32. With deep learning advancements, Deep Q-learning (DQN) can leverage neural networks to estimate these action-values, improving the stability and efficiency of training33. RL algorithms have been used in healthcare applications34 such as treating cancer (managing chemotherapy and radiotherapy)35,36,37, diabetes38, HIV39, and managing patients in intensive care units40,41,42. These studies have demonstrated that RL policies can outperform current clinical policies in improving patient outcomes.

In this study we aimed to explore the value of RL to learn optimal revascularization decisions that result in a maximal reduction of major adverse cardiovascular events (MACE) in a large cohort of patients with obstructive CAD. The performance of our optimal RL policies (RL4CAD models) developed using traditional QL, DQN, and Conservative Q-learning (CQL) algorithms were compared to conventional physician-based policies in an offline setting.

Results

Population characteristics

Table 1 provides a summary of the study cohort extracted from the Alberta Provincial Project for Outcome Assessment in Coronary Heart Disease (APPROACH)43,44 Registry with a description of relevant disease characteristics.

From the available 41,328 patient datasets, a total of 43,312 discrete episodes of care were identified. From these we randomly selected (at a patient level) 30,300 and 8682 episodes for training and test sets, respectively, reserving 4330 for hold-out validation (for neural networks). The average number of transitions per episode and the average accumulated reward (without discounting) for each episode was 1.14 ± 0.45 and 0.62 ± 0.60 for both sets, respectively. Figure 1 demonstrates histograms of all episode lengths and accumulated rewards. Additionally, the average number of episodes per patient was 1.05 ± 0.22 for both sets.

Evaluation of traditional Q-learning models

We used the Weighted Importance Sampling (WIS) method and evaluated \({\pi }_{{O}_{n}}\) for \(\forall {n}\in \left[\mathrm{2,1000}\right)\), where n represents the number of states extracted from K-means clustering and \({\pi }_{{O}_{n}}\) is the optimal policy in that state space. The behavior policy in all evaluations is described as \({\pi }_{{B}_{best}}\). Figure 2 demonstrates the expected rewards calculated by this analysis for both stochastic and greedy types of RL and the physician policy. In this analysis the lower bound (LB) of the stochastic optimal policy was higher than the upper bound (UB) of the stochastic physician policy for \(\forall {n}\in \left[\mathrm{2,1000}\right)\) in the test set. This also applied to the greedy policies for \(\forall {n}\in \left[\mathrm{10,1000}\right)\).

This figure illustrates the expected rewards from optimal QL-based policies and physician policies as the number of states (clusters) increases. Here \({\pi }_{{B}_{best}}\) (the policy best representing physicians’ decision-making patterns) is used as the behavior policy for off-policy evaluation. Blue circles and green triangles represent the stochastic optimal and physician policies, respectively, while orange squares and red rhombuses represent the greedy optimal and physician policies. Panel (a) shows results from the training dataset, and panel (b) shows results from the test dataset.

Furthermore, we used \({\pi }_{{B}_{n}}\), the physicians’ policy in a state setting with n clusters, as behavior policy for each \({\pi }_{{O}_{n}}\) \(\forall {n}\in \left[\mathrm{2,1000}\right)\) to ensure that the optimal policies outperform the physicians’ model in the same setting as well. The expected rewards in this analysis are shown in Fig. 3. Similar to the previous analysis, the LB of the stochastic optimal policy exceeded the UB of the stochastic physician policy for\(\,\forall {n}\in \left[\mathrm{2,208}\right)\), in the test set. This trend was also observed for the greedy policies for \(\forall {n}\in \left[\mathrm{10,253}\right)\).

This figure illustrates the expected rewards from optimal QL-based policies and physician policies as the number of states (clusters) increases. Here \({\pi }_{{B}_{n}}\) (the physician policy with the same number of clusters as n) is used as the behavior policy for off-policy evaluation. Blue circles and green triangles represent the stochastic optimal and physician policies, respectively, while orange squares and red rhombuses represent the greedy optimal and physician policies. Panel (a) shows results from the training dataset, and panel (b) shows results from the test dataset.

We selected \({\pi }_{{O}_{84}}\) as the representative of these models for the remaining portions of our analyses, as it achieved the maximum expected rewards for both stochastic and greedy policies among models with fewer than 100 clusters. The reason for this choice was to select a robust, high-performance model while keeping it as simple as possible for interpretability (i.e., fewer states). This model achieved expected rewards of 0.78825 (CI: 0.78823, 0.78827) and 0.73016 (CI: 0.73015, 0.73017) for its greedy and stochastic policies, respectively. These represent 27% and 18% improvements, respectively, compared to the greedy behavior policy’s expected rewards of 0.62 (CI: 0.617, 0.621). We also shuffled the recommended actions by this policy among test patients (repeat = 100 times) and the average WIS-based expected rewards were 0.77 (CI: 0.770, 0.778) and 0.72 (CI: 0.715, 0.720) for greedy and stochastic policies, respectively. This indicates that the performance of this policy consists of both choosing better actions and assigning them to the right patients.

We also found that a simple average of the stochastic optimal and physician’s policies in this state setting could achieve expected rewards of 0.6668 (CI: 0.6646, 0.6690), this remaining higher than the stochastic behavior policy’s expected rewards of 0.6136 (CI: 0.6124, 0.6147).

Interpretation of the optimal Q-learning model

The discrete and limited number of states in the traditional Q-learning model allows us to effectively interpret the optimal policy. Supplementary Fig. 1 compares the greedy optimal policy of \({\pi }_{{O}_{84}}\) and the greedy physician policy of \({\pi }_{{B}_{84}}\) at the cluster level and Fig. 4 represents a heatmap of the stochastic policies. Generally, the optimal policy tends to prescribe more CABG treatment in most states, whereas the physicians usually performed PCI.

This figure presents a heatmap showing the probability of each possible action across different states for three policies: the Optimal Policy derived from Traditional Q-Learning, the Physician Policy based on the physicians’ decision-making model, and an Average Policy combining the two. All policies are evaluated in a setting with 84 states.

Figure 5 shows the top 20 features contributing to that cluster setting. The details of the trained XGBoost model to calculate the Shapley values are provided in Supplementary Table 4.

This heatmap shows the relative importance of the top 20 features calculated using the Shapley method. The feature center for each state (the center of the cluster in the k-means results) is shown inside the corresponding cell. The chosen optimal treatment for each state is color-coded on the state number (Red: CABG, Blue: PCI, Green: MT). The trained XGBoost model details for calculating Shapley values are in Supplementary Table 4.

Evaluation of deep Q-network and conservative Q-learning models

We evaluated the DQN and CQL models on the test set using the WIS method and the behavior policy of \({\pi }_{{B}_{best}}\). Table 2 presents the expected rewards of each model for both stochastic and greedy policies. Since the α parameter of the CQL method significantly influences the model’s conservatism (i.e., the extent to which it takes actions similar to those of physicians), we present results for various values of α. Lower α values result in a policy similar to the DQN policy, while higher α values align more closely with behavior cloning, thereby mimicking the physicians’ actions. According to these results, the most conservative policy (CQL, α = 0.5) led to approximately a 3% increase in expected rewards on the test set. In contrast, the least conservative policy (CQL, α = 0.001), and the DQN policy, resulted in 32% and 31% increases in expected rewards, respectively. Furthermore, we provided the results of the shuffled policies associated with each RL policy in the same table (Shuffled – Test column). These results demonstrate that the RL policies not only choose better-performing actions, but also, in cases where they are constrained by the behavior policy, attempt to assign the actions to the best-suited patients.

We also provided the hyperparameter tuning details of DQN and CQL methods in Supplementary Table 3. Additionally, we have provided the Per-Decision Importance Sampling (PDIS) and Per-Decision Weighted Importance Sampling (PDWIS) evaluation results in Supplementary Table 5. In these evaluations, all of the RL policies outperformed the behavior policy, both for the stochastic and greedy variants.

Policy similarities

We visualized the recommendation by each policy using t-SNE method in Fig. 6.

These plots use t-SNE visualization to illustrate the treatment decisions made by each greedy policy in the test set. Green, blue, and red dots represent Medical Therapy alone, PCI, and CABG, respectively. Each panel shows decisions from the following: (a) Real treatments received, (b) the best behavior policy from physicians’ decisions, (c) the best Traditional QL policy, (d) DQN policy, (e) CQL policy with α = 0.001, (f) CQL policy with α = 0.01, (g) CQL policy with α = 0.1, and (h) CQL policy with α = 0.5.

Furthermore, we provide relevant statistics for choices made by each policy in Supplementary Fig. 2. Generally, policies that recommended more CABG tended to show better expected rewards. The DQN policy, specifically, recommended the highest rate of CABG, despite this not being common in the behavior policy. Traditional QL and CQL policies, however, have different mechanisms to prevent the policy from deviating too much from the behavior policy. In traditional QL, we employed k-means clustering to summarize features into clusters, and if an action is very rare in a cluster, it is less likely to be recommended after QL training. On the other hand, the CQL policy incorporates a regularization term into the loss function that prevents overly optimistic estimation of Q-values for actions not taken in the behavior policy. This mechanism is controllable by the α parameter. As observed in Fig. 6 and Supplementary Fig. 2, as the α increases in CQL, the distribution of recommended actions becomes more similar to the behavior policy.

Additionally, we calculated the agreement of different policies with the treatments that patients in the test set received. Table 3 shows the ratios of total agreement between the two policies and the agreement for revascularization treatment (PCI or CABG). CQL policy with α = 0.5 (most conservative case) has the highest agreements with the received treatments.

Subgroup and sensitivity analyses

We provided a subgroup analysis of the expected rewards of each greedy policy stratified based on sex (female or male), age (below or above median), acuteness of CAD (ACS or non-ACS), and the APPROACH jeopardy score (below or above median). As shown in Fig. 7, RL policies, unlike the behavior policy, generally tend to reduce the gap between the stratified groups while achieving superior or nearly equivalent rewards.

Moreover, we conducted a sensitivity analysis by replacing our original imputation method (median imputation) with multiple imputation by chained equations using random forests. This change improved the best greedy policy of the Traditional QL model by approximately 4%; however, it did not result in any significant improvement for DQN and CQL policies. The slight improvement in the Traditional QL method may be attributed to better imputation of missing values, which could lead to more accurate state determinations.

We also provided the results of the sensitivity analyses for different rewards in Supplementary Table 7. Although the absolute expected reward values changed with the application of different functions (due to the removal of certain criteria from the MACE definition), the overall superiority of the RL models compared to the behavior policy remained consistent.

Discussion

In this study we demonstrated the potential for QL and RL to optimize CAD revascularization decision making using offline population data. Policies recommended by our trained RL algorithms demonstrated significant improvements in patient outcomes compared to a physician behavior policy, suggesting strong potential for the delivery of personalized clinical decision making in this patient population.

We observed a majority of the optimal RL policies to prioritize CABG over other treatment strategies for the achievement of freedom from MACE. These RL-based recommendations are in alignment with prior clinical trials and observational studies similarly demonstrating a superior capacity for CABG to improve long-term clinical outcomes in patients with obstructive CAD15,16,17,18,19. However, we recognize that increased adoption of surgical revascularization may not be feasible nor desired due to inherent system constraints or patient preference toward minimally invasive approaches. To address this, we trained CQL policies that are particularly well-suited for mitigating marked shifts from current clinical behavior. Even the most conservative of these policies (α = 0.5) achieved an improvement in clinical outcomes while maintaining similar engagement rates of invasive revascularization strategies. Using such approaches the level of conservatism can be fine-tuned to align final treatment distributions to match each healthcare system’s access to scarce yet optimal resources.

Traditional QL models remained the most interpretable of our RL4CAD models due to their limited number of states. As illustrated in Fig. 5, the impact of each feature on policy recommendations was observed across each unique patient state. For example, treatment location (Calgary versus Edmonton) strongly influenced defining patient states. Probabilities for PCI and MT in physicians’ policies were significantly different (two-tailed t-test P < 0.05) between cities, whereas no differences were observed for our RL policy when modeled across both locations. This suggests clinical decision making was strongly influenced by local practice cultures, but this was effectively balanced by the RL policy that was trained across both environments. Therefore, the RL policy offers the potential to harmonize decision making across health systems to promote equality and equity.

The inherent complexity of patients with obstructive CAD and high-dimensionality of data captured for this patient population makes therapeutic decision making a suitable problem for the application of ML. ML techniques have now been effectively utilized to diagnose CAD25,26 and predict clinical CAD-related outcomes, including future mortality and MACE using various types of data, such as angiography, demographics, symptoms and examinations, electrocardiograms, laboratory results, and echocardiography27,28,29,30,31. The ML algorithms trained across all of these studies used supervised learning methods, and were not aimed toward directly recommending treatment strategies for individual patients. Further, none evaluated the performance of AI-driven policies using off-policy evaluation. This study, to the best of our knowledge, is the first to utilize RL and QL-based methods with off-policy evaluation to optimize CAD treatments.

Bertsimas et al.31 developed an ML-based algorithm called ML4CAD based on a supervised outcome prediction model to recommend the best CAD treatment strategy among CABG, PCI, and three types of drug strategies (combinations of statin and/or blockers). They simulated performance of their policy (outcome prediction model) and indicated that their policy could provide significant advantages over a conventional physician-guided policy. Importantly, their analysis treated each cardiac catheterization independently and did not consider the sequential and multi-step nature of CAD treatments (i.e., choosing a different treatment after failure of the first one). They observed that their policy agreed with the physicians’ policies only 28.2% of the time, similarly identifying a need for higher adoption of CABG for the optimal reduction in MACE. In contrast, we introduced CQL, which allows this agreement to be adjustable to some degree, achieving more than 50% agreement while still recommending a better policy. Conversely, their physicians’ policy included more CABG treatments, whereas ours predominantly featured PCI and MT. This difference could be attributed to variations in healthcare systems.

Traditionally, RL algorithms are trained in an online setting through extensive interactions with the target environment. This is largely not feasible in live healthcare settings due to safety and ethical concerns surrounding “trial and error” learning. However, training offline RL models that objectively and consistently imitate or out-perform physician behavior may provide a viable option to safely introduce RL models into the decision-making process.

The choice of which RL algorithms should be deployed into clinical settings depends on the preferences of all stakeholders (clinicians, patients, and providers) and their conditions of use. The reward function for optimization used in this study was focussed on major clinical outcomes, which are not the sole consideration for decisions made by patients, healthcare providers, or payers. CAD treatments are frequently influenced by patient preferences (e.g., reluctance to undergo surgery), local resource access (e.g., operating room time), and economic feasibility (e.g., payer capacity), which are not reflected in our reward function. Given the challenge of considering all such factors, models that are strongly driven toward achieving maximal clinical reward (e.g., DQN model) may not always be realistic or feasible for clinical implementation. In this context CQL models present a unique solution to improve alignment with real world practice, being tuned to maximally align with local practice when treatment decisions are predicted to lead to a desired outcome, deviating only when this condition is not met.

On the other hand, traditional QL provides a valuable tool for physicians to interpret and evaluate the recommended optimal policy. The discrete and limited state space of this algorithm allows for greater interpretability within each cluster of patients, helping users to understand the reasons behind the optimality of specific treatments. We demonstrated these interpretations for one of the best models trained by this approach. This type of model may be ideal for settings where clinicians are actively involved in the decision pipeline (i.e., human in the loop) to refine recommendations based on their knowledge and experience. Furthermore, the cluster centers provided by this method can be used as representatives of each cluster’s patients in future studies to explore discrepancies between RL and other policies.

In general, while online RL may not be feasible or appropriate for training clinical care models, models initially trained using offline data can, once deemed clinically acceptable, be refined through future online optimization. This approach is especially promising for CAD treatments, where data shifts can change the optimality of policies.

Despite its strengths, this study had some limitations. First, the major limitation of this study was the retrospective (offline) approach to modeling and evaluation of RL policies. Although WIS is a widely used technique to estimate the expected rewards of new policies from behavior policies, it cannot guarantee the accuracy of estimations in real world settings. Many factors, such as the choice of a calibrated stochastic behavior policy and complexity of the problem in trajectories that are not sufficiently explored through the behavior policy (the physicians’ trajectories), can affect accuracy. Therefore, we believe that policies which deviate significantly from the behavior policy (like the DQN policy in this study) should be analyzed further and engaged cautiously. Future studies, inclusive of randomized clinical trials, will be required to test the capacity of RL policies to improve clinical outcomes versus regular physicians’ policies, and to evaluate the impact on healthcare resource use.

Second, the formulation of the clinical problem as an MDP increased the complexity of the model. This decision was based on the fact that CAD treatment is a clinical care journey with multistep encounters (e.g., multiple catheterizations and interventions). The majority of the trajectories used in this study remained one step, making these similar to a multi-armed contextual bandit problem. However, we did not utilize this formulation as we were interested in estimating cumulative and long-term outcomes across all patients. Future works may explore this option and compare it with the MDP assumption. A multi-armed contextual bandit is a simpler model to implement, and particularly in online applications (after deploying the offline-trained policies in practice), as it can be updated and interpreted more easily.

Third, our choice of a three-year horizon to assess future outcomes was a limitation. This choice was based on data availability, as three years of clinical follow-up was the minimum follow up consistently available across all subjects. We normalized rewards according to the time to event, which provided relative importance to earlier occurring events. In post-hoc evaluation we formed MDP episodes with a five-year follow-up period and the average number of transitions per episode did not differ significantly. However, the average rewards decreased to 0.65, this was anticipated as more adverse outcomes are expected during longer follow-up periods leading to lower net benefit. The overall distribution of the rewards was similar to the distribution observed for the three-year follow-up. This indicates the latter is a good trade-off, and in our case providing over 20% more data to train the model. Nevertheless, future work may investigate larger windows to understand the effects of treatments over longer periods.

Fourth, our design of the reward function focussed only on clinical outcomes. In real world practice, CAD treatment, and especially choice of revascularization (i.e., PCI vs. CABG), depends on numerous complex factors such as cost, patient preference, and anticipated impact on health-related quality of life. Incorporating these factors into the reward function using proper weights are excellent candidates for future research.

Fifth, the action space used in this study was simple. CAD treatments are complex and vary depending on each individual patient’s condition contextualized by contemporary clinical practice recommendations. Multiple types of PCI (e.g., drug-eluting versus bare-metal stents or the use of fraction flow reserve) and CABG techniques (e.g., arterial versus venous conduits) are available that have been accompanied by dynamic changes in the availability and adoption of medical therapeutics over the past decade. Our simplified modeling of three therapeutic strategies, trained over 10 years of longitudinal clinical data, must be acknowledged to be a partial, albeit pragmatic representation of clinical acumen. However, future studies may explore therapeutic options in more detail. One potential approach to address this complexity is to prune the action space by first determining the major level of treatment using a general model (such as ours) and then employing additional models to decide on more detailed actions45,46.

Sixth, the generalizability of the RL4CAD models across different sites needs to be further investigated. Although we used a hold-out test set to evaluate the models, factors such as patient differences, healthcare systems, and local clinical practices may affect the performance of policies in various groups. These differences raise questions about whether the same model can be directly applied in different clinical contexts. External validation of RL-based policies across different datasets (e.g., different locations or patient groups) using off-policy evaluation could be a promising area for future research.

In this study, we investigated the potential for offline RL algorithms to optimize treatment decision making in patients with obstructive CAD. Optimal RL models were shown to outperform physician behavior in terms of cumulative rewards, leading to improved clinical outcomes. This benefit was dominantly appreciated through the prioritized recommendation of surgical revascularization in patients at greatest likelihood of benefit. Incrementally, we demonstrated the capacity of CQL to permit for the titration of RL-based practice modifications to maximally align with current physician practice. Overall, the results of our study advance our understanding of how CAD treatment may be personalized through the adoption of ML-based clinical decision support.

Methods

In this study, we performed various types of QL, a well-known off-policy algorithm for RL. While RL algorithms beyond the healthcare field are typically employed in online settings where agents can interact with an environment to learn the optimal policy, implementation in healthcare must rely on offline data. This is due to safety concerns and regulatory limitations surrounding the active modification of clinical decisions for algorithm learning purposes. Our offline data consisted of a large volume of clinical decisions previously made by physicians across a broad spectrum of clinical patients and circumstances. We employed traditional QL, DQN, and CQL to optimize treatments for CAD based on reward functions derived from downstream clinical outcomes. We searched different hyperparameter spaces for each method and compared extracted optimal RL policies with the policy established by the behavior of practicing physicians. In this section, we provide an overview of the data resources and techniques utilized in the study.

Data sources

This study used a cohort of 41,328 unique adult (≥18 years old) patients with obstructive CAD undergoing 49,521 unique cardiac catheterizations. Data was sourced from the APPROACH registry43,44 and included index encounters from January 2009 to March 2019. APPROACH is among the world’s most extensive databases for CAD management, linking unique disease phenotypes with detailed clinical data and relevant patient outcomes for individuals in Alberta, Canada who have undergone diagnostic cardiac catheterization and/or revascularization procedures. The data schema of APPROACH included core demographics, point of service clinical health variables (inclusive of comorbidities, laboratory tests, medications, referral indications for coronary catheterization, vital signs, all current and prior procedural parameters collected during catheterization and/or revascularization procedures. Standardized outputs of the CARATTM (Cohesic Inc., Calgary, AB) reporting tool were also provided, a specialized CAD reporting software tool that provides clinicians an interactive tool for generation of patient-specific coronary anatomic models to characterize the location, extent, and specific features of coronary lesions and the corresponding revascularization procedures. Obstructive CAD was defined as any stenosis ≥50% in the left main coronary artery or ≥70% in any other coronary artery, or both. Index presentations for STEMI were a-priori excluded given established guideline recommendations to deliver immediate primary PCI of the culprit lesion.

Alberta provincial health records of the studied cohort were collected through Alberta Health Services (AHS) administrative databases. The dataset consisted of various tables, as outlined in Supplementary Table 1. This study was approved by the Conjoint Health Research Ethics Board at the University of Calgary (REB20-1879). Informed consent was waived due to the retrospective nature of the data and the impracticality of obtaining consent from the large number of patients involved. All data were deidentified, and no compensation was provided to participants as there was no direct interaction with them. Additionally, our study complies with TRIPOD + AI47 guidelines for reporting. The checklist is included in Supplementary Information.

Data preprocessing

Decision models were developed using an MDP, provided access to an expert-reviewed selection of candidate patient health features across all data resources to determine a variety of patient states. The majority of selected patient features were from the APPROACH data schema, including core demographic information, comorbidities, and standardized angiography findings provided by the CARATTM system. Selected additional features from administrative databases, including specific ICD-10-CA codes, medication ATC codes, and routinely performed laboratory tests were included. ICD-10-CA and ATC codes were selected based on our previous study48, while the lab tests were chosen for their relevance to CAD and availability. These features were aggregated for a one-year period prior to each catheterization encounter. In total, 402 features were presented to the decision models, with details provided in Supplementary Table 2.

The cohort dataset was divided into three parts at a patient level: 70% for training, 20% for testing, and 10% for validation purposes (for neural networks). We normalized the continuous features using a Min-Max scaler and one-hot encoded all categorical variables. Missing data were imputed using the median value of each feature.

MDP modeling

Each coronary catheterization encounter represented a step in our MDP model with patient features available at time of catheterization used to determine each patient’s state. The revascularization treatment decision made at each step (i.e., MT, PCI or CABG) was considered the action for the model. A composite MACE outcome at three years following each action was used to calculate the reward. The transitions in this model included moving from one catheterization to another, the patient’s death, or passing three years from the treatment without further catheterization or death.

We defined patient episodes based on these transitions, ending them either with the patient’s death or at the end of the three-year post-treatment follow-up period. Notably, if a patient undergoes catheterization more than three years after the previous episode, a new episode was initiated.

State determination

Our MDP models relied on defining unique patient states and learning the outcomes from how each patient transitioned between them. This made it crucial to first define representative states based on available data sources. Since one of our focuses was on the traditional Q-learning (QL) method, we needed to determine a finite number of states that were of an appropriate size to be statistically meaningful (e.g., below 1000 states) yet adequately representative of differences observed across patient presentations. Conversely, for deep reinforcement learning methods, such as the Deep Q-network (DQN), the use of neural network-based encoders to estimate the Q-function allowed us to use the input features directly. The terminal states, in all methods, were defined by either death of the patient or by spending three years after a treatment action without death. Figure 8 illustrates the possible scenarios of transitions in our MDP model.

This figure illustrates the schema of formulating CAD treatment problem as an MDP. It shows possible transitions within each episode: a coronary angiography and its associated treatment can lead to (a) another coronary angiography (continuing the episode), (b) death from any cause (episode termination), or (c) neither of the above within the 3-year follow-up period (episode termination). Some components of this figure are AI-generated.

For the traditional QL approach, we employed the K-Means clustering algorithm to categorize patients into N subgroups, defining each cluster as a state. Given the uncertainty regarding the optimal N, we searched the space of \(N\in [\mathrm{2,1000})\), using each state space in a different model. Since DQN does not require predefined states and can utilize the features directly, we used the processed features as our state space.

Reward function

The reward function of our MDP model was dominantly weighted by clinical outcomes. Patients received a reward of +1 at the end of each episode if they completed a 3-year period without experiencing an adverse event. If a patient died within the period, the reward value was adjusted based on the time they lived. We applied a negative reward of −1 for each adverse event occurring following treatment, adjusted for the number of days following the decision (up to three years), using the formula presented in Fig. 9. Under these conditions, the earlier the event occurred, the larger the penalty.

This figure depicts the action space and rewards structure and formulation in each transition of the MDP model. Terminal states refer to states where the episodes end. Patients get a positive reward between 0 and 1 in the terminal state for the portion they lived (i.e., all-cause mortality reduces this positive reward). This ensures that patients receive positive rewards for the time lived following treatments. Negative rewards from MACE apply to all states, including terminal states (e.g., CV death would further reduce the overall reward). Some components of this figure are AI-generated.

The adverse events that were considered by the model were based on a 5-point MACE49, defined as:

-

Acute Myocardial Infarction (AMI)

-

Stroke

-

Repeat Revascularization (PCI or CABG)

-

CV mortality within 3 years after treatment

-

All-cause mortality within 90 days after treatment

When two or more outcomes were experienced, the earlier event was used for reward calculations.

It is important to note that in all models trained or evaluated in this study, we employed a discount factor of γ = 0.99 for all MDP models. While this technically constitutes some discounting, we chose it to ensure a balance between considering future clinical outcomes and maintaining convergence in the learning process. A γ = 1 would eliminate discounting entirely but could introduce convergence challenges, which we aimed to avoid due to large number of experiments conducted.

Action space

The action space in this study consisted of three treatment options for obstructive CAD: MT only, PCI, or CABG. We assumed MT only was a basic action taken by default when PCI or CABG was not taken. Actions were always taken following coronary catheterization.

Evaluation of the policies

Evaluating policies derived from RL algorithms in de-novo patients is challenging due to safety and ethical concerns. Therefore, we used a well-known40,41,45 off-policy evaluation technique called WIS to compare the expected values gained by our recommended policies to the physicians’ policy using trajectories collected under the physicians’ policy (i.e., the decisions of physicians).

WIS is an improved version of importance sampling. While importance sampling provides an unbiased estimation of the expected reward, it suffers from high variance. WIS helps alleviate this problem through a normalization step that adjusts the weights of each sampled trajectory so that they sum to one, effectively stabilizing the estimator. This normalization not only mitigates the high variance typically associated with traditional importance sampling but also ensures that the weighted samples better represent the distribution of the target policy50,51.

We should also note that for all WIS evaluations in the current study, we used bootstrapping with 1000 samples and 1000 steps to calculate confidence intervals. Additionally, we presented WIS-driven expected rewards for both stochastic and greedy policies. The stochastic policy refers to a set of probabilities for taking each possible action (summing to 1), while the greedy policy involves only choosing the action with the highest probability (probability of 1 for that action and zero for the rest).

Additionally, we employed PDIS and PDWIS to further evaluate the selected policies. These methods tend to have lower variance than standard importance sampling and provide additional validation of the policies.

One of the questions in RL models is whether they simply choose actions with generally better outcomes (e.g., CABG) or if they also redistribute the actions to the best-suited patients. To answer this question for our models, we shuffled the actions recommended by each model among patients randomly, thereby altering the policy without changing the distribution of actions. We then evaluated this policy using the WIS method over 100 repeats with different random seeds. Finally, we compared the average return of these shuffled policies with their corresponding original policies.

Behavior policy

The behavior policy for this study is essentially a learned function that converts patient states into actions (i.e., revascularization decisions). The design of a behavior policy directly influences the results of the WIS, and an uncalibrated function may lead to incorrect estimations of the expected rewards. Raghu et al.52 showed that simple models, such as k-nearest neighbor (KNN), may perform better as behavior policies for off-policy evaluations, as the probabilities of the actions in these models better represent the stochastic nature of the problem.

In this study, we used k-means clustering to determine potential states of patients. These states (i.e., clusters) had a very similar nature to models like KNN, with the probabilities of actions in each cluster more likely to be stochastically calibrated. We chose the optimal number of clusters (K) in k-means by evaluating the predictive accuracy of each cluster configuration. Accuracy was determined by how well the most frequent treatment action for each cluster predicted ground truth physician decisions. We selected the smallest K that provided the highest predictive accuracy as the optimal number of states for the physician policy. This method balanced model simplicity with predictive performance and found a stochastically calibrated behavior policy. We refer to this behavior policy as \({\pi }_{{B}_{best}}\) in the rest of the paper. This number of clusters chosen, 177, was the smallest number that achieved the maximum accuracy of 69.48% in predicting the physicians’ true actions.

Learning scheme

In this study, we used various types of QL to find optimal treatment decisions, including traditional QL based on states derived from k-means clustering, DQN, and CQL, which is a special type of DQN. In this part, we will explain each method briefly:

Traditional Q-learning

We used different sets of states derived from k-means clustering and optimized 998 different Q-functions using the training episodes. We drew random samples (with replacement) from patient episodes and calculated the Q-function using QL algorithm with at least 1,000,000 steps (we increased this number as the number of states increased)32,40.

Then, we determined the action with the highest Q-values for each state to extract the optimal policy. One of the advantages of QL based on a small number of states is its ease of use and interpretability. With this method, we can analyze the characteristics of each state (a cluster of patients) and identify the important contributing factors that lead to a specific action being chosen as optimal. Inspired by the RL interpretation methods of Zhang et al.53, we fitted an XGBoost model to transform the features to the associated clusters and calculated the Shapely values to represent the importance of the features.

One issue with QL methods is the potential overestimation of value for rare actions in certain states. To mitigate this and make the policies safer, aligning them closely with physicians’ policies is advisable. A practical approach is to use a weighted average of both the traditional and physicians’ policies to avoid an overly optimistic prediction for rare state-actions. In our study, we executed this by calculating a simple average of the traditional and physicians’ policies for our best model.

Deep Q-network

DQN can be considered an extension of traditional Q-learning that uses neural networks to estimate Q-values instead of a Q-matrix. In our study, we utilized this approach to handle the complexity of high-dimensional state spaces. The neural network receives input features representing states and predicts Q-values, which are compared to the target Q-values calculated from the Q-learning formula through a loss function. Gradient descent is then used to update the network’s parameters (θ). Additionally, DQN maintains a copy of the main network, updated less frequently, to calculate the target Q-values, enhancing the stability of the model33,54. We used a feed-forward neural network structure and tuned the hyperparameters using grid search. The details of the hyperparameter space are provided in Supplementary Table 3. Similar to QL, the optimal policy was calculated by choosing the action that maximized the Q-values for each state.

One of the key advantages of DQN over traditional QL is its ability to generalize across similar states due to the function approximation provided by neural networks. This generalization capability allows DQN to scale effectively to larger state spaces and more complex environments, facilitating the discovery of nuanced patterns and strategies within the data.

Conservative Q-learning

CQL is a special type of DQN in which a term is added to the loss function to discourage the model from overestimating Q-values of state-action pairs that were not observed in past experiences. This modification makes CQL an ideal solution for offline RL, where you cannot directly explore rare state-action pairs, and the model may overestimate their expected reward during training from past experiences. The update formula of CQL is very similar to the DQN and the difference is a regularization term to that lower-bound the Q-function. This regularization term causes the conservatism (i.e., acting close to behavior policy) and is controlled by α parameter. As α increases, the new policy becomes more similar to the behavior policy54,55.

Sensitivity analyses

We conducted sensitivity analyses by changing the imputation algorithm and adjusting different reward settings. The original imputation method was replaced with multiple imputation by chained equations using random forests. Additionally, we modified our reward functions with the following changes to the MACE definition:

-

1.

Removing repeat revascularization.

-

2.

Removing repeat revascularization and 90-day all-cause mortality

-

3.

Retaining only 90-day all-cause mortality.

Figure 10 summarizes the methodology employed in this study to train and evaluate different RL models for optimizing CAD treatments.

This figure summarizes our methodology. Patient data, physician decisions, and subsequent outcomes were collected from various data sources. Using these sources, we structured the data as an MDP and optimized it using traditional Q-Learning, Deep Q-Network, and Conservative Q-Learning algorithms. The optimal policy was then compared to the physician decision model using off-policy evaluation. Some components of this figure are AI-generated.

Data availability

The patient datasets used in this study are not publicly available. However, they can be accessed through appropriate institutional approval. The models and policies are available upon requests from the corresponding author.

Code availability

The code used for generating the results of this study and the RL4CAD model weights are available at: https://github.com/data-intelligence-for-health-lab/RL4CAD.

References

Shahjehan, R. D. & Bhutta, B. S. Coronary Artery Disease. In: StatPearls (StatPearls Publishing, Treasure Island (FL), 2022).

Ralapanawa, U. & Sivakanesan, R. Epidemiology and the Magnitude of Coronary Artery Disease and Acute Coronary Syndrome: A Narrative Review. J. Epidemiol. Glob. Health 11, 169–177 (2021).

Ferrari, A. J. et al. Global incidence, prevalence, years lived with disability (YLDs), disability-adjusted life-years (DALYs), and healthy life expectancy (HALE) for 371 diseases and injuries in 204 countries and territories and 811 subnational locations, 1990–2021: a systematic analysis for the Global Burden of Disease Study 2021. Lancet 403, 2133–2161 (2024).

Garrone, P. et al. Quantitative Coronary Angiography in the Current Era: Principles and Applications. J. Interventional Cardiol. 22, 527–536 (2009).

Magro, M., Garg, S. & Serruys, P. W. Revascularization treatment of stable coronary artery disease. Expert Opin. Pharmacother. 12, 195–212 (2011).

Deb, S. et al. Coronary artery bypass graft surgery vs percutaneous interventions in coronary revascularization: a systematic review. JAMA 310, 2086–2095 (2013).

Boden, W. E. et al. Optimal medical therapy with or without PCI for stable coronary disease. N. Engl. J. Med. 356, 1503–1516 (2007).

A Randomized Trial of Therapies for Type 2 Diabetes and Coronary Artery Disease. N. Engl. J. Med. 360, 2503–2515 (2009).

Sculpher, M. Coronary angioplasty versus medical therapy for angina. Health service costs based on the second Randomized Intervention Treatment of Angina (RITA-2) trial. Eur. Heart J. 23, 1291–1300 (2002).

Trial of invasive versus medical therapy in elderly patients with chronic symptomatic coronary-artery disease (TIME): a randomised trial. Lancet 358, 951–957 (2001)

Hueb, W. et al. The medicine, angioplasty, or surgery study (MASS-II): a randomized, controlled clinical trial of three therapeutic strategies for multivessel coronary artery disease: one-year results. J. Am. Coll. Cardiol. 43, 1743–1751 (2004).

Erne, P. et al. Effects of Percutaneous Coronary Interventions in Silent Ischemia After Myocardial InfarctionThe SWISSI II Randomized Controlled Trial. JAMA 297, 1985–1991 (2007).

Gaudino, M., Andreotti, F. & Kimura, T. Current concepts in coronary artery revascularisation. Lancet 401, 1611–1628 (2023).

Lim, G. B. Cost-effectiveness of CABG surgery versus PCI in complex CAD. Nat. Rev. Cardiol. 11, 557–557 (2014).

Welt, F. G. P. CABG versus PCI — End of the Debate? N. Engl. J. Med. 386, 185–187 (2022).

Chaitman, B. R. et al. Coronary Artery Surgery Study (CASS): Comparability of 10 year survival in randomized and randomizable patients. J. Am. Coll. Cardiol. 16, 1071–1078 (1990).

Spadaccio, C. & Benedetto, U. Coronary artery bypass grafting (CABG) vs. percutaneous coronary intervention (PCI) in the treatment of multivessel coronary disease: quo vadis? —a review of the evidences on coronary artery disease. Ann. Cardiothorac. Surg. 7, 506–515 (2018).

Serruys, P. W. et al. Percutaneous coronary intervention versus coronary-artery bypass grafting for severe coronary artery disease. N. Engl. J. Med. 360, 961–972 (2009).

Farkouh, M. E. et al. Design of the Future REvascularization Evaluation in patients with Diabetes mellitus: Optimal management of Multivessel disease (FREEDOM) Trial. Am. Heart J. 155, 215–223 (2008).

Sianos, G. et al. The SYNTAX Score: an angiographic tool grading the complexity of coronary artery disease. EuroIntervention 1, 219–227 (2005).

Farooq, V. et al. Anatomical and clinical characteristics to guide decision making between coronary artery bypass surgery and percutaneous coronary intervention for individual patients: development and validation of SYNTAX score II. Lancet 381, 639–650 (2013).

Campos, C. M. et al. Risk stratification in 3-vessel coronary artery disease: Applying the SYNTAX Score II in the Heart Team Discussion of the SYNTAX II trial. Catheter Cardiovasc. Inter. 86, E229–E238 (2015).

Campos, C. M. et al. Validity of SYNTAX score II for risk stratification of percutaneous coronary interventions: A patient-level pooled analysis of 5,433 patients enrolled in contemporary coronary stent trials. Int. J. Cardiol. 187, 111–115 (2015).

Brener, S. J. et al. The SYNTAX II Score Predicts Mortality at 4 Years in Patients Undergoing Percutaneous Coronary Intervention. J. Invasive Cardiol. 30, 290–294 (2018).

Alizadehsani, R. et al. Machine learning-based coronary artery disease diagnosis: A comprehensive review. Comput. Biol. Med. 111, 103346 (2019).

Abdar, M. et al. A new machine learning technique for an accurate diagnosis of coronary artery disease. Comput. Methods Prog. Biomed. 179, 104992 (2019).

Motwani, M. et al. Machine learning for prediction of all-cause mortality in patients with suspected coronary artery disease: a 5-year multicentre prospective registry analysis. Eur. Heart J. 38, 500–507 (2017).

Johnson, K. M., Johnson, H. E., Zhao, Y., Dowe, D. A. & Staib, L. H. Scoring of Coronary Artery Disease Characteristics on Coronary CT Angiograms by Using Machine Learning. Radiology 292, 354–362 (2019).

Wang, J. et al. Risk Prediction of Major Adverse Cardiovascular Events Occurrence Within 6 Months After Coronary Revascularization: Machine Learning Study. JMIR Med. Inform. 10, e33395 (2022).

Ninomiya, K. et al. Can Machine Learning Aid the Selection of Percutaneous vs Surgical Revascularization? J. Am. Coll. Cardiol. 82, 2113–2124 (2023).

Bertsimas, D., Orfanoudaki, A. & Weiner, R. B. Personalized treatment for coronary artery disease patients: a machine learning approach. Health Care Manag. Sci. 23, 482–506 (2020).

Sutton, R. S. Reinforcement Learning (Springer Science & Business Media, 1992)

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature 518, 529–533 (2015).

Yu, C., Liu, J., Nemati, S. & Yin, G. Reinforcement Learning in Healthcare: A Survey. ACM Comput. Surv. 55, 1–36 (2023).

Zhao, Y., Kosorok, M. R. & Zeng, D. Reinforcement learning design for cancer clinical trials. Stat. Med. 28, 3294–3315 (2009).

Eckardt, J.-N., Wendt, K., Bornhäuser, M. & Middeke, J. M. Reinforcement Learning for Precision Oncology. Cancers 13, 4624 (2021).

Moreau, G., François-Lavet, V., Desbordes, P. & Macq, B. Reinforcement Learning for Radiotherapy Dose Fractioning Automation. Biomedicines 9, 214 (2021).

Ngo, P. D., Wei, S., Holubová, A., Muzik, J. & Godtliebsen, F. Control of Blood Glucose for Type-1 Diabetes by Using Reinforcement Learning with Feedforward Algorithm. Computational Math. Methods Med. 2018, e4091497 (2018).

Parbhoo, S., Bogojeska, J., Zazzi, M., Roth, V. & Doshi-Velez, F. Combining Kernel and Model Based Learning for HIV Therapy Selection. AMIA Jt Summits Transl. Sci. Proc. 2017, 239–248 (2017).

Komorowski, M., Celi, L. A., Badawi, O., Gordon, A. C. & Faisal, A. A. The Artificial Intelligence Clinician learns optimal treatment strategies for sepsis in intensive care. Nat. Med. 24, 1716–1720 (2018).

Peine, A. et al. Development and validation of a reinforcement learning algorithm to dynamically optimize mechanical ventilation in critical care. npj Digit. Med. 4, 1–12 (2021).

Prasad, N., Cheng, L.-F., Chivers, C., Draugelis, M. & Engelhardt, B. E. A Reinforcement Learning Approach to Weaning of Mechanical Ventilation in Intensive Care Units. In 33rd Conference on Uncertainty in Artificial Intelligence (2017).

Ghali, W. A. & Knudtson, M. L. Overview of the Alberta Provincial Project for Outcome Assessment in Coronary Heart Disease. On behalf of the APPROACH investigators. Can. J. Cardiol. 16, 1225–1230 (2000).

Southern, D. A. et al. Expanding the impact of a longstanding Canadian cardiac registry through data linkage: challenges and opportunities. Int. J. Popul Data Sci. 3, 441 (2018).

Shirali, A., Schubert, A. & Alaa, A. Pruning the Way to Reliable Policies: A Multi-Objective Deep Q-Learning Approach to Critical Care. IEEE J. Biomed. Health Inform. 28, 6268–6279 (2024).

Stone, G. W. et al. Intravascular imaging-guided coronary drug-eluting stent implantation: an updated network meta-analysis. Lancet 403, 824–837 (2024).

Collins, G. S. et al. TRIPOD+AI statement: updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 385, e078378 (2024).

Ghasemi, P. & Lee, J. Unsupervised Feature Selection to Identify Important ICD-10 and ATC Codes for Machine Learning on a Cohort of Patients With Coronary Heart Disease: Retrospective Study. JMIR Med. Inform. 12, e52896 (2024).

Bosco, E., Hsueh, L., McConeghy, K. W., Gravenstein, S. & Saade, E. Major adverse cardiovascular event definitions used in observational analysis of administrative databases: a systematic review. BMC Med. Res. Methodol. 21, 241 (2021).

Jiang, N. & Li, L. Doubly Robust Off-policy Value Evaluation for Reinforcement Learning. Int. Conf. Mach. Learn. 48, 652–661 (2016).

Gottesman, O. et al. Evaluating Reinforcement Learning Algorithms in Observational Health Settings. Preprint at https://arxiv.org/abs/1805.12298 (2018).

Raghu, A. et al. Behaviour Policy Estimation in Off-Policy Policy Evaluation: Calibration Matters. Preprint at http://arxiv.org/abs/1807.01066 (2018).

Zhang, K. et al. An interpretable RL framework for pre-deployment modeling in ICU hypotension management. npj Digit. Med. 5, 1–10 (2022).

Seno, T. & Imai, M. d3rlpy: An Offline Deep Reinforcement Learning Library. J. Mach. Learn. Res. 23, 1–20 (2022).

Kumar, A., Zhou, A., Tucker, G. & Levine, S. Conservative Q-Learning for Offline Reinforcement Learning. Adv. Neural. Inf. Process. Syst. 33, 1179–1191 (2020).

Acknowledgements

This study was supported by the Libin Cardiovascular Institute PhD Graduate Scholarship, the Alberta Innovates Graduate Scholarship, an AICE-Concepts Grant from Alberta Innovates (212200473), and a Project Grant from the Canadian Institutes of Health Research (PJT 178027). We would like to express our sincere appreciation to Dr. Dina Labib for her invaluable assistance in determining the useful features for this study. We extend our gratitude to the APPROACH team for their efforts in collecting and providing access to the APPROACH Registry data, which made this study possible.

Author information

Authors and Affiliations

Contributions

P.G. conducted the study by designing the methodology, generating and analyzing the results, and writing the manuscript. M.G. and J.A.W. contributed to study design and provided clinical, data, and methodological insights. D.A.S. and B.L. acquired the data used in this study. J.L. conceived the study, acquired the data, and oversaw the study. All authors critically revised and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

J.L. is a co-founder and a major shareholder of Symbiotic AI, Inc. J.A.W. is a co-founder and a major shareholder of Cohesic, Inc. All other authors declare no financial or non-financial competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Ghasemi, P., Greenberg, M., Southern, D.A. et al. Personalized decision making for coronary artery disease treatment using offline reinforcement learning. npj Digit. Med. 8, 99 (2025). https://doi.org/10.1038/s41746-025-01498-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41746-025-01498-1