Abstract

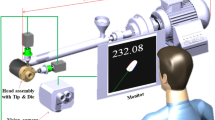

Medical robots have demonstrated the ability to manipulate percutaneous instruments into soft tissue anatomy while working beyond the limits of human perception and dexterity. Robotic technologies further offer the promise of autonomy in carrying out critical tasks with minimal supervision when resources are limited. Here, we present a portable robotic device capable of introducing needles and catheters into deformable tissues such as blood vessels to draw blood or deliver fluids autonomously. Robotic cannulation is driven by predictions from a series of deep convolutional neural networks that encode spatiotemporal information from multimodal image sequences to guide real-time servoing. We demonstrate, through imaging and robotic tracking studies in volunteers, the ability of the device to segment, classify, localize and track peripheral vessels in the presence of anatomical variability and motion. We then evaluate robotic performance in phantom and animal models of difficult vascular access and show that the device can improve success rates and procedure times compared to manual cannulations by trained operators, particularly in challenging physiological conditions. These results suggest the potential for autonomous systems to outperform humans on complex visuomotor tasks, and demonstrate a step in the translation of such capabilities into clinical use.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Test datasets for evaluating source code are available at https://github.com/alvchn/nmi-vasc-robot. Public data used in the study are available in the SPLab Ultrasound Image Database (http://splab.cz/wp-content/uploads/2014/05/ARTERY_TRANSVERSAL.zip and http://splab.cz/wp-content/uploads/2013/11/us_images.zip), the PICMUS Database (https://www.creatis.insa-lyon.fr/Challenge/IEEE_IUS_2016/download) and the SPLab Tecnocampus Hand Image Database (http://splab.cz/en/download/databaze/tecnocampus-hand-image-database).

Code availability

Source code are available from the Github repository: https://github.com/alvchn/nmi-vasc-robot. Use of the code is subject to a limited right to use for academic, governmental or not-for-profit research. Use of the code for commercial or clinical purposes is prohibited in the absence of a Commercial License Agreement from Rutgers, The State University of New Jersey. References to open-source software used in the study are provided within the paper.

References

Yang, G. Z. et al. Medical robotics—regulatory, ethical, and legal considerations for increasing levels of autonomy. Sci. Robot.2, eaam8638 (2017).

Moustris, G. P., Hiridis, S. C., Deliparaschos, K. M. & Konstantinidis, K. M. Evolution of autonomous and semi-autonomous robotic surgical systems: a review of the literature. Int. J. Med. Robot. Comput. Assist. Surg.7, 375–392 (2011).

Shademan, A. et al. Supervised autonomous robotic soft tissue surgery. Sci. Transl. Med.4, 337 (2016).

Edwards, T. L. et al. First-in-human study of the safety and viability of intraocular robotic surgery. Nat. Biomed. Eng.2, 649–656 (2018).

Fagogenis, G. et al. Autonomous robotic intracardiac catheter navigation using haptic vision. Sci. Robot.4, eaaw1977 (2019).

Weber, S. et al. Instrument flight to the inner ear. Sci. Robot.2, eaal4916 (2017).

Daudelin, J. et al. An integrated system for perception-driven autonomy with modular robots. Sci. Robot.3, eaat4983 (2018).

Niska, R., Bhuiya, F. & Xu, J. National hospital ambulatory medical care survey: 2010 emergency department summary. Natl Health Stat. Report2010, 1–31 (2010).

Horattas, M. C. et al. Changing concepts in long-term central venous access: catheter selection and cost savings. Am. J. Infect. Control29, 32–40 (2001).

Sampalis, J. S., Lavoie, A., Williams, J. I., Mulder, D. S. & Kalina, M. Impact of on-site care, prehospital time, and level of in-hospital care on survival in severely injured patients. J. Trauma32, 252–261 (1993).

Hulse, E. J. & Thomas, G. O. Vascular access on the 21st century military battlefield. J. R. Army Med. Corps156, 285–390 (2010).

Armenteros-Yeguas, V. et al. Prevalence of difficult venous access and associated risk factors in highly complex hospitalised patients. J. Clin. Nurs.26, 4267–4275 (2017).

Lamperti, M. & Pittiruti, M. II. Difficult peripheral veins: turn on the lights. Br. J. Anaesth.110, 888–891 (2013).

Rauch, D. et al. Peripheral difficult venous access in children. Clin. Pediatr.(Phila)48, 895–901 (2009).

Ortiz, D. et al. Access site complications after peripheral vascular interventions: incidence, predictors, and outcomes. Circ. Cardiovasc. Interv.7, 821–828 (2014).

Lee, S. et al. A transparent bending-insensitive pressure sensor. Nat. Nanotechnol.11, 472–478 (2016).

Chen, Z. et al. Non-invasive multimodal optical coherence and photoacoustic tomography for human skin imaging. Sci. Rep.7, 17975 (2017).

Kolkman, R. G. M., Hondebrink, E., Steenbergen, W. & De Mul, F. F. M. In vivo photoacoustic imaging of blood vessels using an extreme-narrow aperture sensor. IEEE J. Sel. Top. Quantum Electron.9, 343–346 (2003).

Matsumoto, Y. et al. Label-free photoacoustic imaging of human palmar vessels: a structural morphological analysis. Sci. Rep.8, 786 (2018).

Meiburger, K. M. et al. Skeletonization algorithm-based blood vessel quantification using in vivo 3D photoacoustic imaging. Phys. Med. Biol.61, 7994–8009 (2016).

Bashkatov, A. N., Genina, E. A., Kochubey, V. I. & Tuchin, V. V. Optical properties of human skin, subcutaneous and mucous tissues in the wavelength range from 400 to 2,000 nm. J. Phys. D38, 2543–2555 (2005).

Paquit, V. C., Tobin, K. W., Price, J. R. & Mèriaudeau, F. 3D and multispectral imaging for subcutaneous veins detection. Opt. Express17, 11360–11365 (2009).

Lamperti, M. et al. International evidence-based recommendations on ultrasound-guided vascular access. Intensive Care Med.38, 1105–1117 (2012).

Egan, G. et al. Ultrasound guidance for difficult peripheral venous access: systematic review and meta-analysis. Emerg. Med. J.30, 521–526 (2013).

Seto, A. H. et al. Real-time ultrasound guidance facilitates femoral arterial access and reduces vascular complications: FAUST (Femoral Arterial Access with Ultrasound Trial). JACC Cardiovasc. Interv.3, 751–758 (2010).

Stolz, L. A., Stolz, U., Howe, C., Farrell, I. J. & Adhikari, S. Ultrasound-guided peripheral venous access: a meta-analysis and systematic review. J. Vasc. Access16, 321–326 (2015).

Antoniou, G. A., Riga, C. V., Mayer, E. K., Cheshire, N. J. W. & Bicknell, C. D. Clinical applications of robotic technology in vascular and endovascular surgery. J. Vasc. Surgery53, 493–499 (2011).

Zivanovic, A. & Davies, B. L. A robotic system for blood sampling. IEEE Trans. Inf. Technol. Biomed.4, 8–14 (2000).

Cheng, Z. et al. A hand-held robotic device for peripheral intravenous catheterization. Proc. Inst. Mech. Eng. H J. Eng. Med.231, 1165–1177 (2017).

Kobayashi, Y. et al. Use of puncture force measurement to investigate the conditions of blood vessel needle insertion. Med. Eng. Phys.35, 684–689 (2013).

Kobayashi, Y. et al. Preliminary in vivo evaluation of a needle insertion manipulator for central venous catheterization. Robomech. J.1, 1–18 (2014).

Hong, J., Dohi, T., Hashizume, M., Konishi, K. & Hata, N. An ultrasound-driven needle-insertion robot for percutaneous cholecystostomy. Phys. Med. Biol.49, 441–455 (2004).

de Boer, T., Steinbuch, M., Neerken, S. & Kharin, A. Laboratory study on needle–tissue interaction: toward the development of an instrument for automated venipuncture. J. Mech. Med. Biol.7, 325–335 (2007).

Carvalho, P., Kesari, A., Weaver, S., Flaherty, P. & Fischer, G. Robotic assistive device for phlebotomy. In Proc. ASME 2015 International Design and Engineering Technical Conferences & Computers and Information in Engineering Conference Vol. 3, 47620 (ASME, 2015).

Brewer, R. Improving Peripheral IV Catheterization Through Robotics—From Simple Assistive Devices to a Fully Autonomous System (Stanford University, 2015).

Chen, A. I., Nikitczuk, K., Nikitczuk, J., Maguire, T. J. & Yarmush, M. L. Portable robot for autonomous venipuncture using 3D near infrared image guidance. Technology1, 72–87 (2013).

Harris, R., Mygatt, J. & Harris, S. System and methods for autonomous intravenous needle insertion. US patent 9,364,171 (2011).

Balter, M. L., Chen, A. I., Maguire, T. J. & Yarmush, M. L. Adaptive kinematic control of a robotic venipuncture device based on stereo vision, ultrasound, and force guidance. IEEE Trans. Ind. Electron.64, 1626–1635 (2017).

Lecun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature521, 436–444 (2015).

Long, J., Shelhamer, E. & Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell.39, 640–651 (2017).

Valipour, S., Siam, M., Jagersand, M. & Ray, N. Recurrent fully convolutional networks for video segmentation. In Proc. 2017 IEEE Conference on Applications of Computer Vision 26–36 (IEEE, 2017).

Bjærum, S., Torp, H. & Kristoffersen, K. Clutter filter design for ultrasound color flow imaging. IEEE Trans. Ultrason. Ferroelectr. Freq. Control49, 204–216 (2002).

Chen, A. I. et al. Multilayered tissue mimicking skin and vessel phantoms with tunable mechanical, optical, and acoustic properties. Med. Phys.43, 3117–3131 (2016).

Lewis, G. C., Crapo, S. A. & Williams, J. G. Critical skills and procedures in emergency medicine: vascular access skills and procedures. Emerg. Med. Clin. North Am.31, 59–86 (2013).

Galena, H. J. Complications occurring from diagnostic venipuncture. J. Fam. Pract.34, 582–584 (1992).

Mnih, V. et al. Human-level control through deep reinforcement learning. Nature518, 529–533 (2015).

Nguyen, N. D., Nguyen, T., Saeid, N., Bhatti, A. & Guest, G. Manipulating soft tissues by deep reinforcement learning for autonomous robotic surgery. In Proc. 2019 IEEE International Systems Conference 1–7 (IEEE, 2019).

Bullitt, E., Muller, K. E., Jung, I., Lin, W. & Aylward, S. Analyzing attributes of vessel populations. Med. Image Anal.9, 39–49 (2005).

Balter, M. L. et al. Automated end-to-end blood testing at the point-of-care: integration of robotic phlebotomy with downstream sample processing. Technology6, 59–66 (2018).

Drain, P. K. et al. Diagnostic point-of-care tests in resource-limited settings. Lancet Infect. Dis.14, 239–249 (2014).

Ronneberger, O., Fischer, P. & Brox, T. U-Net: convolutional networks for biomedical image segmentation. Med. Image Comput. Comput. Interv.9351, 234–241 (2015).

Ioffe, S. & Szegedy, C. Batch normalization: accelerating deep network training by reducing internal covariate shift. In Proc. 32nd International Conference on Machine Learning Vol. 37, 448–456 (JMLR, 2015).

He, K., Zhang, X., Ren, S. & Sun, J. Delving deep into rectifiers: surpassing human-level performance on ImageNet classification. In Proc. 2015 IEEE International Conference on Computer Vision 1026–1034 (IEEE, 2015).

He, K., Zhang, X., Ren, S. & Sun, J. Identity mappings in deep residual networks. In Proc. 14th European Conference on Computer Vision 630–645 (Springer, 2016).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature323, 533–536 (1986).

Shi, X. et al. Convolutional LSTM network: a machine learning approach for precipitation nowcasting. In Proc. 28th International Conference on Neural Information Processing Systems 802–810 (MIT Press, 2015).

Cho, K. et al. Learning phrase representations using RNN encoder–decoder for statistical machine translation. In Proc. 2014 Conference on Empirical Methods in Natural Language Processing 1724–1734 (ACL, 2014).

Chung, J., Çağlar, G., Cho, K. & Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. Preprint at https://arxiv.org/abs/1412.3555 (2014).

Chen, A. I., Balter, M. L., Maguire, T. J. & Yarmush, M. L. 3D near infrared and ultrasound imaging of peripheral blood vessels for real-time localization and needle guidance. In Medical Image Computing and Computer-Assisted Interventations Vol. 9902, 130–137 (Springer, 2016).

Zhao, H., Gallo, O., Frosio, I. & Kautz, J. Loss functions for image restoration with neural networks. IEEE Trans. Comput. Imaging3, 47–57 (2017).

Sudre, C. H., Li, W., Vercauteren, T., Ourselin, S. & Jorge Cardoso, M. in Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support (eds Cardoso M. et al.) 240–248 (Springer, 2017).

Chambolle, A., Caselles, V., Novaga, M., Cremers, D. & Pock, T. in Theoretical Foundations and Numerical Methods for Sparse Recovery (ed. Fornasier, M) 263–340 (2010).

Říha, K. Artery Databases (Brno University of Technology, 2014); http://splab.cz/wp-content/uploads/2014/05/ARTERY_TRANSVERSAL.zip

Zukal, M., Beneš, R., Číka, I. P. & Říha, K. Ultrasound Image Database (Brno University of Technology, 2013); http://splab.cz/wp-content/uploads/2013/11/us_images.zip

Le Guennec, A., Malinowski, S. & Tavenard, R. Data augmentation for time series classification using convolutional neural networks. Preprint at https://halshs.archives-ouvertes.fr/halshs-01357973 (2016).

Kingma, D. P. & Ba, J. L. Adam: a method for stochastic optimization. In Proc. 3rd International Conference on Learning Representations (ICLR, 2015).

Ng, A. Y. Feature selection, L1 vs. L2 regularization, and rotational invariance. In Proc. 21st International Conference on Machine Learning 78–85 (ACM, 2004).

Abadi, M. et al. TensorFlow: a system for large-scale machine learning. In Proc. 12th USENIX Conference on Operating Systems Design and Implementation Vol.16, 265–283 (USENIX, 2016).

Liu, B., Zhang, F. & Qu, X. A method for improving the pose accuracy of a robot manipulator based on multi-sensor combined measurement and data fusion. Sensors15, 7933–7952 (2015).

Bradski, G. The OpenCV Library. Dr Dobbs J. Softw. Tools120, 122–125 (2000).

Rusu, R. B. & Cousins, S. 3D is here: Point Cloud Library (PCL). In Proc. 2011 IEEE International Conference on Robotics and Automation 1–4 (IEEE, 2011).

Yoo, T. S. et al. Engineering and algorithm design for an image processing API: a technical report on ITK—The Insight Toolkit. Stud. Health Technol. Inform.85, 586–592 (2002).

Myronenko, A. & Song, X. Point set registration: coherent point drifts. IEEE Trans. Pattern Anal. Mach. Intell.32, 2262–2275 (2010).

Gunst, R. F. & Mason, R. L. Fractional factorial design. WIREs Comput. Stat.1, 234–244 (2009).

Acknowledgements

We thank J. Leiphemer and N. DeMaio for their assistance and support in designing and implementing the device, E. Pantin and A. Davidovich for support in the human imaging studies and phantom studies, including review of imaging data and overall clinical guidance, E. Yurkow, D. Adler, M. Lo and G. Yarmush for assistance in the animal studies, and B. Lee for code used in our deep learning approach. Research reported in this publication was supported by the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health under awards R01-EB020036 and T32-GM008339. This work was also supported by a National Institutes of Health Ruth L. Kirschstein Fellowship F31-EB018191 awarded to A.I.C. and a National Science Foundation Fellowship DGE-0937373 awarded to M.L.B. The authors acknowledge additional support from the Rutgers University School of Engineering, Rutgers University Department of Biomedical Engineering and the Robert Wood Johnson University Hospital.

Author information

Authors and Affiliations

Contributions

A.I.C. and M.L.B. designed the system, developed the algorithms and annotation software, and implemented the software and hardware. A.I.C. led execution of the imaging, in vitro and in vivo studies and analysed the primary data. M.L.Y. and T.J.M. provided the general direction for the project and provided valuable comments on the system design and manuscript. Correspondence and requests for materials should be addressed to A.I.C.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary infomation

Rights and permissions

About this article

Cite this article

Chen, A.I., Balter, M.L., Maguire, T.J. et al. Deep learning robotic guidance for autonomous vascular access. Nat Mach Intell 2, 104–115 (2020). https://doi.org/10.1038/s42256-020-0148-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-020-0148-7

This article is cited by

-

Artificial intelligence in interventional radiology: state of the art

European Radiology Experimental (2024)

-

AI co-pilot bronchoscope robot

Nature Communications (2024)

-

A review on quantum computing and deep learning algorithms and their applications

Soft Computing (2023)

-

Puncture site decision method for venipuncture robot based on near-infrared vision and multiobjective optimization

Science China Technological Sciences (2023)

-

RETRACTED ARTICLE: An intelligent controller for ultrasound-based venipuncture through precise vein localization and stable needle insertion

Applied Nanoscience (2023)