Abstract

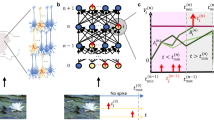

Spike-based neuromorphic hardware promises to reduce the energy consumption of image classification and other deep-learning applications, particularly on mobile phones and other edge devices. However, direct training of deep spiking neural networks is difficult, and previous methods for converting trained artificial neural networks to spiking neurons were inefficient because the neurons had to emit too many spikes. We show that a substantially more efficient conversion arises when one optimizes the spiking neuron model for that purpose, so that it not only matters for information transmission how many spikes a neuron emits, but also when it emits those spikes. This advances the accuracy that can be achieved for image classification with spiking neurons, and the resulting networks need on average just two spikes per neuron for classifying an image. In addition, our new conversion method improves latency and throughput of the resulting spiking networks.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

$29.99 / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

$119.00 per year

only $9.92 per issue

Buy this article

- Purchase on SpringerLink

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Data availability

Both ImageNet37 and CIFAR1013 are publicly available datasets. No additional datasets were generated or analysed during the current study. The data for the spike response depicted in Figure 1 have been published by the Allen Institute for Brain Science in 2015 (Allen Cell Types Database; available from: https://celltypes.brain-map.org/experiment/electrophysiology/587770251). The implementation and pre-trained weights of the EfficientNet-B7 and ResNets are available from: https://github.com/tensorflow/tpu/tree/master/models/official/efficientnet.

Code availability

The code this work is based on is publicly available (https://github.com/christophstoeckl/FS-neurons; https://zenodo.org/record/4326749#.YCUuchP7S3I). Additionally, the code is available in a Code Ocean compute capsule (https://codeocean.com/capsule/7743810/tree).

References

García-Martín, E., Rodrigues, C. F., Riley, G. & Grahn, H. Estimation of energy consumption in machine learning. J. Parallel Distrib. Comput. 134, 75–88 (2019).

Ling, J. Power of a human brain. The Physics Factbook https://hypertextbook.com/facts/2001/JacquelineLing.shtml (2001).

Bellec, G. et al. A solution to the learning dilemma for recurrent networks of spiking neurons. Nat. Commun. 11, 3625 (2020).

Rueckauer, B., Lungu, I. A., Hu, Y., Pfeiffer, M. & Liu, S. C. Conversion of continuous-valued deep networks to efficient event-driven networks for image classification. Front. Neurosci. 11, 682 (2017).

Tan, M. & Le, Q. EfficientNet: rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning (eds Chaudhuri, K. & Salakhutdinov, R.) 6105–6114 (2019).

Hendrycks, D. and Gimpel, K. Gaussian error linear units (GELUs). Preprint at http://arxiv.org/abs/1606.08415 (2016).

Maass, W. and Natschläger, T. in Computational Neuroscience 221–226 (Springer, 1998).

Thorpe, S., Delorme, A. & Rullen, R. Spike-based strategies for rapid processing. Neural Networks 14, 715–725 (2001).

Kheradpisheh, S. R. & Masquelier, T. S4nn: temporal backpropagation for spiking neural networks with one spike per neuron. Int. J. Neural Syst. 30, 2050027 (2020).

Russakovsky, O. et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vision 115, 211–252 (2015).

Van Horn, G. et al. Building a bird recognition app and large scale dataset with citizen scientists: the fine print in fine-grained dataset collection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 595–604 (2015).

Zoph, B. & Le, Q. V. Searching for activation functions. In 6th International Conference on Learning Representations, ICLR 2018 – Workshop Track Proceedings 1–13 (2018).

Krizhevsky, A. et al. Learning Multiple Layers of Features from Tiny Images (2009).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Furber, S. B., Galluppi, F., Temple, S. & Plana, L. A. The spinnaker project. Proc. IEEE 102, 652–665 (2014).

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Woźniak, S., Pantazi, A., Bohnstingl, T. & Eleftheriou, E. Deep learning incorporating biologically inspired neural dynamics and in-memory computing. Nat. Mach. Intell. 2, 325–336 (2020).

Billaudelle, S. et al. Versatile emulation of spiking neural networks on an accelerated neuromorphic substrate. In 2020 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (2020).

Sterling, P. & Laughlin, S. Principles of Neural Design (MIT Press, 2015).

Gouwens, N. W. et al. Classification of electrophysiological and morphological neuron types in the mouse visual cortex. Nat. Neurosci. 22, 1182–1195 (2019).

Bakken, T. E. et al. Evolution of cellular diversity in primary motor cortex of human, marmoset monkey, and mouse. Preprint at bioRxiv https://doi.org/10.1101/2020.03.31.016972 (2020).

Gerstner, W., Kistler, W. M., Naud, R. & Paninski, L. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition (Cambridge Univ. Press, 2014).

Harris, K. D. et al. Spike train dynamics predicts theta-related phase precession in hippocampal pyramidal cells. Nature 417, 738–741 (2002).

Markram, H. et al. Interneurons of the neocortical inhibitory system. Nat. Rev. Neurosci. 5, 793–807 (2004).

Kopanitsa, M. V. et al. A combinatorial postsynaptic molecular mechanism converts patterns of nerve impulses into the behavioral repertoire. Preprint at bioRxiv https://doi.org/10.1101/500447 (2018).

Zhang, Q. et al. Recent advances in convolutional neural network acceleration. Neurocomputing 323, 37–51 (2019).

Hu, J., Shen, L. and Sun, G. Squeeze-and-excitation networks. Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 7132–7141 (2018).

Stöckl, C. & Maass, W. Recognizing images with at most one spike per neuron. Preprint at http://arxiv.org/abs/2001.01682 (2019).

Parekh, O., Phillips, C. A., James, C. D. & Aimone, J. B. Constant-depth and subcubic-size threshold circuits for matrix multiplication. In Proceedings of the 30th on Symposium on Parallelism in Algorithms and Architectures 67–76 (2018).

Rueckauer, B. & Liu, S.-C. Conversion of analog to spiking neural networks using sparse temporal coding. In 2018 IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (IEEE, 2018).

Maass, W. Fast sigmoidal networks via spiking neurons. Neural Comput. 9, 279–304 (1997).

Frady, E. P. et al. Neuromorphic nearest neighbor search using intel’s pohoiki springs. In NICE ’20: Neuro-inspired Computational Elements Workshop (eds Okandan, M. & Aimone, J. B.) 23:1–23:10 (ACM, 2020).

Yousefzadeh, A. et al. Conversion of synchronous artificial neural network to asynchronous spiking neural network using sigma-delta quantization. In 2019 IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS) 81–85 (IEEE, 2019).

Sengupta, A., Ye, Y., Wang, R., Liu, C. & Roy, K. Going deeper in spiking neural networks: VGG and residual architectures. Front. Neurosci. 13, 95 (2019).

Lee, C., Sarwar, S. S., Panda, P., Srinivasan, G. & Roy, K. Enabling spike-based backpropagation for training deep neural network architectures. Front. Neurosci. 14, 119 (2020).

Rathi, N., Srinivasan, G., Panda, P. & Roy, K. Enabling deep spiking neural networks with hybrid conversion and spike timing dependent backpropagation. In International Conference on Learning Representations (2020).

Deng, J. et al. Imagenet: a large-scale hierarchical image database. In 2009 IEEE Conference on Computer Vision and Pattern Recognition 248–255 (IEEE, 2009).

Han, B., Srinivasan, G. & Roy, K. RMP-SNN: residual membrane potential neuron for enabling deeper high-accuracy and low-latency spiking neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 13558–13567 (2020).

Acknowledgements

We would like to thank F. Scherr for helpful discussions. We thank T. Bohnstingl, E. Eleftheriou, S. Furber, C. Pehle, P. Plank and J. Schemmell for advice regarding implementation aspects of FS-neurons in various types of neuromorphic hardware. This research was partially supported by the Human Brain Project of the European Union (grant agreement number 785907).

Author information

Authors and Affiliations

Contributions

C.S. conceived the main idea. C.S. and W.M. designed the model and planned the experiments. C.S. carried out the experiments. C.S. and W.M. wrote the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Peer review information Nature Machine Intelligence thanks James Aimone, Tara Hamilton and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data

Extended Data Fig. 1

Comparison with other conversion methods. We did not find information regarding the number of time steps used for the BinaryConnect model4.

Rights and permissions

About this article

Cite this article

Stöckl, C., Maass, W. Optimized spiking neurons can classify images with high accuracy through temporal coding with two spikes. Nat Mach Intell 3, 230–238 (2021). https://doi.org/10.1038/s42256-021-00311-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s42256-021-00311-4