Abstract

Objective

This case study illustrates the use of natural language processing for identifying administrative task categories, prevalence, and shifts necessitated by a major event (the COVID-19 [coronavirus disease 2019] pandemic) from user-generated data stored as free text in a task management system for a multisite mental health practice with 40 clinicians and 13 administrative staff members.

Materials and Methods

Structural topic modeling was applied on 7079 task sequences from 13 administrative users of a Health Insurance Portability and Accountability Act–compliant task management platform. Context was obtained through interviews with an expert panel.

Results

Ten task definitions spanning 3 major categories were identified, and their prevalence estimated. Significant shifts in task prevalence due to the pandemic were detected for tasks like billing inquiries to insurers, appointment cancellations, patient balances, and new patient follow-up.

Conclusions

Structural topic modeling effectively detects task categories, prevalence, and shifts, providing opportunities for healthcare providers to reconsider staff roles and to optimize workflows and resource allocation.

Keywords: COVID19, task management, natural language processing, topic modeling, mental health

INTRODUCTION

Administrative tasks in health care continue to increase in number and scope, leading to decreased system effectiveness1,2 and significantly higher costs.3,4 Administrative task studies have been primarily conducted via observation and surveys,5,6 in part because tasks are viewed as a “black box,”2,7 often managed via disparate systems such as notebooks, sticky notes, or Excel files that are difficult to track and utilize to describe the nature of the work.

Although still a novelty in health care, task management systems allow users to enter, track, and assign tasks electronically, opening up new opportunities to study the nature and scope of administrative burden in healthcare settings. Some task management tools are available through existing electronic health record (EHR) systems. Information recorded through the use of such tools, such as audit logs of sequences of system-predefined events (eg, placing medication orders and assigning diagnoses), can assist hospitals in optimizing workflows by categorizing tasks and evaluating task complexity and efficiency.8,9 There are also SaaS (software as a service)-based task management systems that interface with EHR systems to provide additional capabilities or offer stand-alone collaboration platforms with the ability to create more complex workflows and accountability in managing all the administrative work of a hospital or practice. The use of task management tools can enable more efficient task completion10 and outline a pathway for task automation, reducing task burden.11–13

In addition to selection from menus of predefined events, some EHR and task management systems provide users with the ability to enter free-text data describing tasks that need to be completed. Such user-generated (also referred to as “person-generated”)14 text data can be a rich resource for deeper understanding of day-to-day operations and for observing previously “invisible” tasks without a filter imposed by the task management system or the researcher. This, in turn, allows for approaching task management from the point of view of user-centered design in which challenging or error-prone workflows, eg, in registration, scheduling, billing, and collections, can be identified. Systems can then be modified to improve processes and optimize resource allocation. Because the volume of text data is typically very large, natural language processing (NLP) algorithms can be used to aid the learning from existing workflows.

We present the case study of a mid-sized mental health practice to explore the use of a probabilistic NLP method, structural topic modeling (STM),15 on de-identified user-generated data as a tool for understanding (1) categories of administrative tasks that are performed; (2) prevalence of administrative tasks (ie, expected proportion, or relative volume, of tasks in the text corpus of descriptions generated by task management system users); and (3) task shifts in response to a major event (how task prevalence differs pre–COVID-19 [coronavirus disease 2019] and during the first 3 months of the COVID-19 pandemic). We provide context for the findings from the STM analysis through interviews with a panel of experts. The disruption of the COVID-19 pandemic presents a compelling setting for examining how external factors may influence task patterns. The increase in people seeking mental health treatment because of symptoms of anxiety and depression during the first 3 months of the COVID-19 period due to isolation16 and changes from in-person care to telehealth17–19 may have contributed to changes in mental health practice administrative task patterns.

While COVID-19 is a most recent example of an event that significantly impacted task patterns, healthcare organizations regularly experience organizational changes (eg, leadership and staff changes) and institutional disruptions (eg, major insurance coverage updates) that influence task patterns. As this case study will illustrate, successfully operationalizing NLP to detect task categories, prevalence, and shifts in real time could be used by practices to reassign administrative responsibilities, streamline workload through process improvement, and assess the need for additional resources in response to such shifts.

MATERIALS AND METHODS

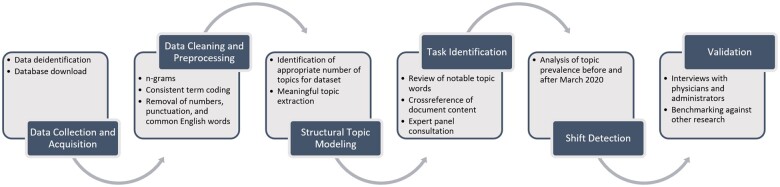

The 6-step methodology for this case study is summarized in Figure 1 and explained in more detail subsequently.

Figure 1.

A structural topic modeling approach for identifying and categorizing tasks performed by administrators in a medical practice.

Data collection and acquisition

Our analysis is based on proprietary mental health practice data stored on an Health Insurance Portability and Accountability Act–compliant task-management platform, Dock Health (https://dock.health/). Although we use data from this platform for this case study, similar administrative task free-text data sources could be analyzed using the methods we describe.

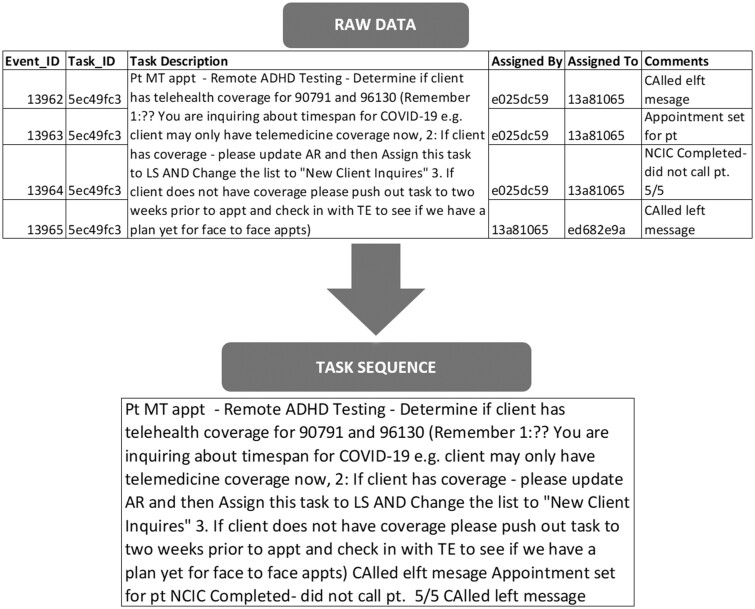

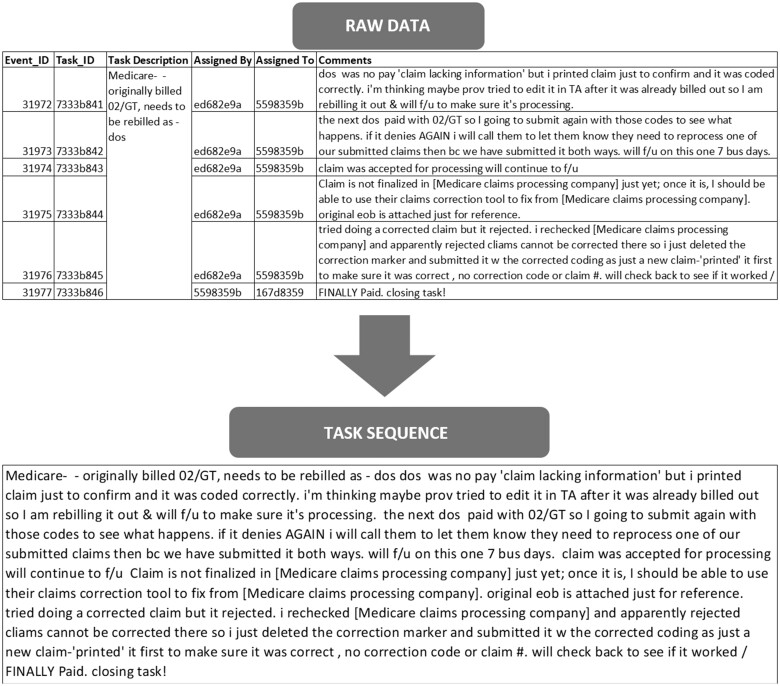

The U.S.-based mental health practice has 40 mental health providers, including psychologists and licensed mental health counselors, and 13 administrative staff. The data comprised 7079 task sequences completed by 13 users between November 25, 2019, and June 25, 2020. A task sequence consisted of task descriptions and free comments entered by users. Each task sequence was associated with a main task, which was assigned and reassigned to different assignees until the initial task was marked as “completed” (see Figure 2 for an example). Any user of the platform could assign tasks to any other user in the practice.

Figure 2.

Converting raw task description data to task sequence. This task (Task_ID 5ec49fc3) has 4 user-created events numbered 13 962 through 13 965. The task description is free text entered by the first user (e025dc59). The task was assigned to user 13a81065. User 13a81065 completed 3 events as part of the task and entered comments for each event, after which the task was assigned to user ed682e9a, who completed the fourth event, entered the last comment, and marked the task COMPLETED. A task sequence is a composite of the task description and all comments associated with the task, as shown in the box at the bottom.

This study’s focus was on tasks performed by administrative staff in the practice: of the 13 users, 12 had administrative roles and 1 was a practicing psychologist and practice owner with administrative responsibilities. We chose to focus on tasks performed by administrative staff because of their growing number and impact of providers, practices, and patients.1 Furthermore, by examining administrative tasks, we include the tasks of schedulers, credentialing staff, billers, and other roles that are traditionally understudied in healthcare settings.20

We restricted our dataset to administrative tasks by selecting all task sequence entries in the task management system assigned by and to administrative users. The task sequences were de-identified for protected health information and personally identifiable information in accordance with Health Insurance Portability and Accountability Act privacy rules leveraging Amazon Web Services Medical Comprehend. The project was approved as exempt by our institution’s institutional review board.

Data cleaning and preprocessing

For the purposes of analysis, all comments in a particular task sequence were merged into a “document.” The documents were cleaned and preprocessed as follows. All text was converted to lowercase. Sequences of the most frequently occurring 2 and 3 contiguous words (2-grams and 3-grams) were generated to identify specialized terms. Common misspellings and abbreviations were corrected. Variations of terms were coded as single terms for consistency (eg, “credit card”, “card,” and “cc” were coded as “cc”). Numbers and punctuation were removed. Common English words such as “the” were removed using the SMART framework.21

Topic modeling

Topic modeling is a generative model of text, in which documents are represented as a mixture of topics and topics are represented as a mixture of words.22,23 We used STM24,25—an extension to classical topic modeling—to allow for the prevalence of topics in the documents to be influenced through a standard regression model by an indicator variable corresponding to whether the documents were in the pre–COVID-19 and COVID-19 datasets. This means that the same topics were identified by the algorithm in the pre–COVID-19 and the COVID-19 datasets, but the expected proportion of each topic in the corpus (ie, the topic’s prevalence) was allowed to be different in the 2 datasets. The number of topics itself is an input to STM, and needs to be estimated carefully; see, for example,Zhao et al26 and the references therein. Measures such as held-out likelihood and semantic coherence27 are typically used to determine an acceptable range.25 We applied widely accepted methods to finalize the number of topics, both quantitative and qualitative. Specifically, we found the number of topics (10) after which there were diminishing marginal returns in terms of the held-out likelihood, and we utilized our expert panel to perform preliminary inspection of consistency and interpretability of the notable words obtained for each topic when varying the number of topics in a range around that number (from 5 to 13).8,28

The topic (task) definitions produced by the STM algorithm were labeled by following the process in Erzurumlu and Pachamanova.28 An expert panel consisting of 3 domain experts with experience in administrative medical tasks and 2 users of the task management platform from the mental health practice was recruited. The experts were asked in isolation to interpret the meaning of each topic after reviewing the list of notable words found by the STM algorithm and 3 documents in which the corresponding topic had the highest prevalence. After each expert provided their definition of each topic (task), the final topic definitions were selected to be the terms that occurred in all experts’ descriptions, ie, those for which the experts were in unanimous agreement. For example, if some experts used “insurance billing inquiries” to define a topic, while others used “insurance billing inquiries; refunds” or “ncic and insurance billing inquiries” (where “ncic” stands for “new client informed consent” form), only “insurance billing inquiries” was kept as the final task definition. We note that the tasks identified through this process are nonpredefined. In contrast, traditional observation and survey methods predefine the tasks and the information is coded by human researchers.

Detecting shifts

After the tasks’ definitions were finalized, we analyzed the prevalence (ie, the relative volume or expected proportion) of the different topics in the pre–COVID-19 and COVID-19 datasets and focused especially on shifts, ie, on the difference in expected topic proportions, which can be obtained as an output of the STM algorithm.25 The pre–COVID-19 dataset spanned tasks during the 3 months before COVID-19 was declared a pandemic (November 25, 2019, to February 24, 2020). The COVID-19 dataset spanned tasks during the 3 months after the practice switched operations to telehealth (March 25, 2020, to June 25, 2020). There were 3668 task sequences in the pre–COVID-19 dataset and 3411 task sequences in the COVID-19 dataset.

Validation

The findings were discussed with a psychologist and an administrative assistant from the medical practice from which the task data were collected to understand the context. The qualitative data provided additional dependability and insights into our quantitative findings.29,30

RESULTS

The STM analysis extracted 10 topics. The interpretations of the topics (tasks) on which our panel of experts converged are listed in the first column of Table 1. The notable words for each topic according to 4 widely accepted metrics (frequency, FREX, lift, and score)25 are summarized in the second column of Table 1. Frequency lists the most commonly occurring words in each topic, FREX is a weighted metric of the frequency and the exclusivity of words within a topic, and lift and score are measures of the frequency of word occurrence in the corresponding topic relative to the frequency of occurrence in the rest of the corpus generated by the text of all task sequences. By sharing all 4 metrics with our expert panel, we were able to obtain a more nuanced understanding of the topic's meaning. For example, notable words according to the lift metric for topic 2 included various payer codes, which were not discovered through other metrics. This led to a deeper discussion from our panelists of the exchanges that must occur between the practice and multiple payers.

Table 1.

Topic (task) interpretation and STM output summaries

| Label | Topic Word Listing |

|---|---|

| Topic 1: New client inquiries via website | Topic 1 top words |

| Frequency: form, website, called, left, email, inquiry, completed, visitor, lvm, pmars | |

| FREX: website, inquiry, visitor, web, sidebar, secure, amp, lvm, form, left | |

| Lift: amp, anxietydepression, callled, cbt, consultationappointment, custody, decides, emdr, guaranteed, ikpeama | |

|

Score: visitor, website, inquiry, sidebar, form, lvm, web, secure, pmars, completed | |

| Topic 2: Insurance billing inquiries (practice calling insurers) | Topic 2 top words |

| Frequency: pt, ncic, completed, refund, ins, waive, clm, pm, owed, copays | |

| FREX: ncic, reattestation, refunded, proview, bcbsr, enews, solutions, ba, da, bcbsmyy | |

| Lift: aca, approaching, bcbscep, bcbsgom, bcbshuk, bcbslpg, bcbsmcg, bcbspjn, bcbsxik, cca | |

|

Score: ncic, refund, completed, waive, owed, copays, pt, reattestation, proview, bcbsr | |

| Topic 3: Switching providers; credentialing | Topic 3 top words |

| Frequency: provider, email, completed, form, note, signature, mobile, ticket, task, device | |

| FREX: signature, mobile, ticket, device, ipad, smartphone, resupport, lcswc, psyd, subtask | |

| Lift: adopted, agencya, appropriatenesstherapytesting, aso, blocked, click, council, credentials, dcphonea, dossier | |

|

Score: device, smartphone, ipad, mobile, resupport, signature, lcswc, ticket, updates, unclassified | |

| Topic 4: Cancellations via voicemail | Topic 4 top words |

| Frequency: message, pm, voice, inquiries, cancel, reschedule, caller, wireless, call, md | |

| FREX: message, voice, reschedule, caller, wireless, air, dead, references, app, robocall | |

| Lift: revital, witness, app, booking, calleda, caller, callisonrtkl, catonsville, coup, dead | |

|

Score: voice, message, inquiries, pm, reschedule, cancel, caller, wireless, dead, air | |

| Topic 5: Medical record requests | Topic 5 top words |

| Frequency: task, request, created, dock, emailed, ta, update, va, updated, send | |

| FREX: created, dock, fwd, record, support, connects, mln, records, requesting, link | |

| Lift: applicable, applications, assc, attachments, cert, ceus, cleaners, coffee, combine, credited | |

|

Score: dock, created, task, fwd, request, va, emailed, connects, mln, record | |

| Topic 6: Collection on client balances | Topic 6 top words |

| Frequency: pt, ccd, appt, gs, called, va, auth, wp, closing, balance | |

| FREX: gs, wp, balance, owes, na, statement, npr, auth, charge, mssg | |

| Lift: asr, bounced, dispute, filea, krum, ld, mssg, packets, pre, reloaded | |

|

Score: ccd, gs, wp, owes, balance, na, va, auth, pt, appt | |

| Topic 7: New client inquiry follow-up | Topic 7 top words |

| Frequency: im, number, appt, pmars, calling, phone, call, callback, give, therapy | |

| FREX: bye, daughter, doctor, son, years, evaluation, school, psychologist, pmhi, area | |

| Lift: abusive, acceptable, admitted, adults, african, agoraphobia, alright, amyes, anger, angry | |

|

Score: bye, pmars, number, therapy, im, appt, anxiety, calling, daughter, depression | |

| Topic 8: Collections from insurance (claims) | Topic 8 top words |

| Frequency: pt, back, ins, call, called, today, tomorrow, week, monday, told | |

| FREX: active, heard, told, session, monday, hear, mom, gave, base, touch | |

| Lift: ack, arm, auto, cobra, doors, eligible, exchange, joint, kicks, log | |

|

Score: back, pt, call, ins, called, plan, monday, told, today, mom | |

| Topic 9: Psychological testing appointments and general appointment preparation | Topic 9 top words |

| Frequency: appt, client, due, date, testing, scheduled, docs, email, office, glip | |

| FREX: testing, referral, determine, ifwhen, ensure, bariatric, listings, inquiring, proviewar, docs | |

| Lift: altogether, bunch, employee, ere, hew, intentionally, kioskforms, mrok, obtain, ordered | |

|

Score: client, appt, testing, docs, due, date, scheduled, referral, determine, ifwhen | |

| Topic 10: Claim resolution | Topic 10 top words |

| Frequency: claim, dos, days, back, spoke, check, va, ref, paid, rep | |

| FREX: claim, dos, ref, rep, bus, reprocessed, denied, payments, correctly, reprocessing | |

| Lift: finalized, addon, adjudicated, adjudication, allowa, attn, automatic, autoposted, awhile, backed | |

| Score: claim, dos, ref, bus, paid, rep, reprocessed, va, denied, pmt |

STM: structural topic modeling.

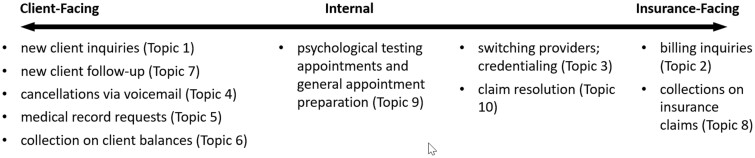

Types of tasks

A review of the task descriptions in Table 1 suggests that administrative tasks completed at the practice fall on a spectrum: from primarily client facing to internal to primarily insurance facing (Figure 3).

Figure 3.

Spectrum of task categories discovered: from primarily client facing (left) to internal (middle) to primarily insurance facing (right). Some tasks (topics 3, 10) are in between the internal and insurance-facing categories.

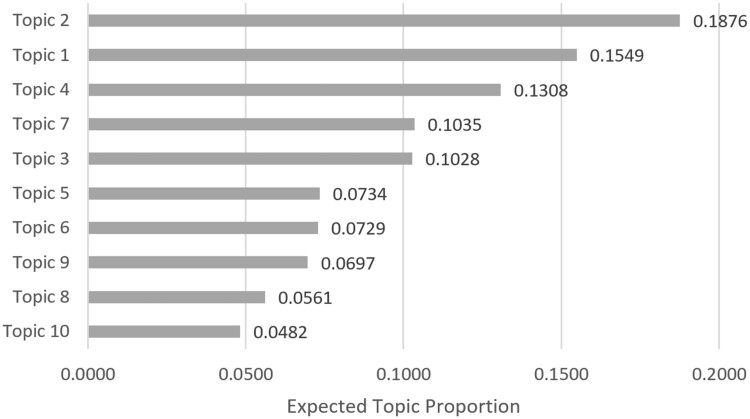

Task prevalence

Figure 4 displays the topic prevalence (expected topic proportions) in the entire corpus. The most prevalent topic is topic 2 (insurance billing inquiries); it is almost 20% of the corpus. The least prevalent topic is topic 10 (claim resolution)—it is about 5% of the corpus.

Figure 4.

Estimated topic prevalence (expected topic proportions) in the corpus.

Task shifts

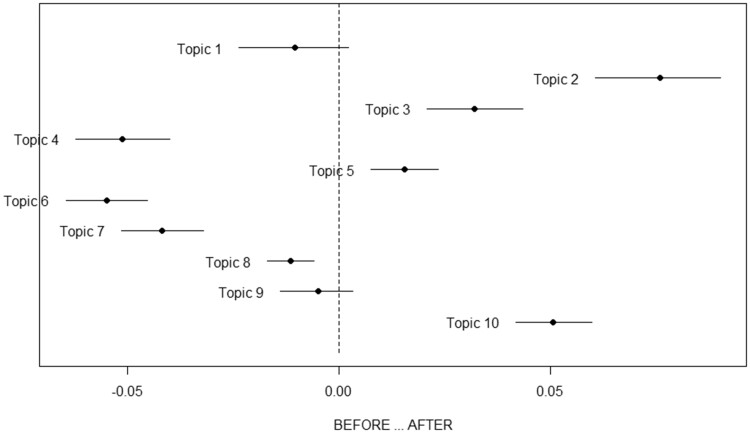

The prevalence of topics (tasks) differed in the pre–COVID-19 and COVID-19 periods, as illustrated in Figure 5. The prevalence of topics 2, 3, 5, and 10 significantly increased in the COVID-19 period compared with the pre–COVID-19 period. In contrast, the prevalence of topics 4, 6, 7, and 8 decreased.

Figure 5.

Analysis of changes in the prevalence of topics in the pre–COVID-19 (coronavirus disease 2019) period and COVID-19 period datasets. The scale on the vertical axis is irrelevant; it simply lists the topics in order. The horizontal axis shows the size of the incremental effect (positive for topics on the right-hand side of the graph and negative on the left-hand side of the graph) of an indicator variable (1 = COVID-19, 0 = pre–COVID-19) on the prevalence of the corresponding topic.

DISCUSSION

Types and prevalence of tasks

Our STM analysis revealed administrative tasks that may have been “invisible” due to their infrequency. For example, claim resolution (topic 10) has low prevalence (∼5% of the corpus); however, its detection is important because the task can be emblematic of a challenging or error-prone workflow. The task tends to involve variability and rework, requiring multiple interactions with the insurance companies, providers, and patients to clarify and eventually resolve the initially denied claim. (see Figure 6 for a representative example of a claim resolution task sequence with high prevalence [>90%] of topic 10). After identifying an infrequent but inconsistent task, a practice may explore improved task standardization.31 If task standardization is infeasible due to the complexity of claim resolution, eg, Chen et al,8 then administrative task time could be specifically allocated to resolve claims in a timely manner, as it impacts practice finances.

Figure 6.

An example of a task sequence with high prevalence of topic 10 (claim resolution).

The STM analysis also reveals high-prevalence tasks that could be targeted for process improvement and workflow design to reduce the work burden on administrative staff.12 For example, new client inquiries via the website (topic 1) was the second most prevalent topic (∼15%). A targeted improvement project may reveal workflow redesign opportunities to, for example, include more information on the website or request additional information on the web form for more effective conversion of inquiries to a psychologist’s patient panel. Finally, we observe that a majority of task topics are client facing (∼54% of the corpus). Because all healthcare workers’ communication skills are indicators of care quality,32 this insight may encourage leadership to emphasize patient-centered communication for its administrative staff. This also indicates that patient-centered design approaches may be advisable when redesigning workflows related to client-facing task topics. For example, relatively new patients could be asked to provide feedback on the new patient inquiry and follow-up processes in order to improve customer service.

Task shifts

First, we discuss the 3 task topics whose prevalence increased the most during the COVID-19 period. Findings from the STM analysis (Figure 5) agree with statements from users of the platform that because of the switch to telehealth on March 25, 2020, there was a significant increase in the number of inquiries the practice needed to make to insurance companies to determine eligibility for reimbursement for certain services (topic 2). Insurance claim resolutions (topic 10) were a more prominent topic in the COVID-19 period because of the need to learn changing parameters of claim reimbursements by different insurance providers. Many clients switched insurance providers due to job loss or furlough; hence, healthcare providers had to be credentialed on multiple insurances during this time, leading to an increase in the prevalence of topic 3 in the COVID-19 period.

The prevalence of topics 4, 6, and 7 decreased the most during the COVID-19 period. Cancellations (topic 4) decreased due to telehealth, making it easier for patients to keep their appointments, or perhaps the more pressing need to keep these appointments given the stress of the new reality. Discussion of collections on client balances (topic 6) decreased due to the practice’s deliberate decision to ease the financial burden of patients, particularly given that as many as 10% of patients lost their health insurance due to unemployment. The practice’s choice to reduce patient costs via a sliding scale and to waive copays to support patients also contributed to a reduction in the prevalence of tasks associated with collections. The decrease in new client follow-up (topic 7) in the COVID-19 period despite a steady prevalence of new client inquiries (topic 1) indicated the need for more administrative and clinical support. It was evident that the resources of the mental health practice were strained and that it was difficult to take on new patients.

Overall, the data suggest a shift away from client-facing activities, ie, cancellations, collections, and new client follow-up, and a shift toward insurance-facing activities, ie, insurance eligibility, insurance claim resolutions, and increased provider credentialing with multiple insurers. Detecting the magnitude of such shifts in real time may lead the practice in the short term to provide additional education and support so that administrative staff can work with payers more effectively. The practice may even choose to hire a temporary biller or collector to manage the influx. However, for the long term, this focus may lead to decreased priority for client-facing activities. Because client-facing activities are still more prevalent overall and critical to a broader commitment to patient-centered care, administrative staff should be trained to balance greater task diversity.8

Research and practical implications

The insights discussed in the previous section are examples of the type of data-driven analysis that can be performed by utilizing NLP and user-generated data to enable the discovery of facts that may be unknown to healthcare administrators but can aid in task management and workflow optimization in health care. We note that this study is complementary to task management studies that utilize predefined activities such as Chen et al8 and Jones et al.9 The data we used in this study were collected with the idea of building workflows from the ground up: through observing how the task management system is used before defining activities and automating workflows. Task management systems that enable users to generate such data can use it to learn more about actual usage patterns and hidden tasks by applying methods similar to the one outlined in this case study. Such studies can be treated as the first stage in a general task management design pipeline.

Some previous observation-based studies have focused on grouping administrative work by staff member. For example, in one study, work done by scanning clerks to scan documents and create digital documents for the patient's EHR or other practice management systems was deemed fully automatable, leading to the conclusion that the occupation could be eliminated.6 In contrast, through the use of user-generated data and NLP, we emphasize the identification of the categories of tasks themselves, independent of role. Grouping by task category, as opposed to by staff role, helps focus on resource shifting, considering new roles that individuals may play in the organization, eg, in process improvement or more frequent tasks, and design of automated flows, instead of reducing the workforce.11 Platform designers and NLP analysts can use such insights to identify tasks that may be considered for automation or to refine current automation platforms given task prevalence (eg, topic 2) or a task’s laborious nature (eg, topic 10).

With task management systems that integrate with EHR systems, like the one used in this study, the approach could generalize beyond tasks performed by administrative staff. A taxonomy of tasks performed by healthcare providers, for example, has been challenging to create,1 but given appropriate data, the methodology outlined in this case study could be used for identifying categories of tasks providers perform. For example, physicians perform both clinical tasks that are directly associated with the practice of medicine and patient care and also administrative tasks. Some administrative tasks can only be completed by a physician and improve the quality, timeliness, and appropriateness of care, eg, writing in reason for referral from specialist in primary to internal medicine subspecialists or other physicians.1 Other administrative tasks can be completed by other staff or considered for automation or removal from the workflow design, eg, physicians must provide payer and laboratory with advance notification of outpatient laboratory tests.1 Evaluating the prevalence of clinical and administrative tasks that must be performed by physicians compared with tasks that could be performed by other staff can be useful for understanding the administrative task burden on medical professionals, which has been a noted problem associated with physician burnout.1,12

Limitations

The task management system that provided data for this case study, Dock Health, allowed for user-generated free text without predefined tasks, which enabled us to use NLP to categorize tasks. The approach is therefore most applicable to task management systems that provide the opportunity for users to enter free text when completing tasks.

The data in this case study were from 1 mid-sized multisite mental health practice, and findings may be different for other practices depending on factors like location, administrative structure, and patient demographics. Because users may have a different way of describing the same task through task management systems, the effectiveness of the proposed approach may need further validation for different task management systems in which users may have different system utilization behaviors. Findings may vary also due to potential differences in data preprocessing and the probabilistic nature of STM. In addition, with topic modeling and STM, there is a certain amount of human effort involved in interpreting and understanding the findings of the NLP algorithm. The approach is theoretically not restricted to tasks performed by administrative staff, but human oversight may need to be more extensive if it is applied to tasks performed by clinicians, in which the semantics may be much more complex. The sensitivity of detecting meaningful task definitions and task shifts should be extensively investigated for the particular hospital and practice.

CONCLUSION

This study provided an illustration of the viability of NLP algorithms for utilizing user-generated data for task management. In particular, STM appears effective at identifying nonpredetermined task categories, estimating task prevalence, and detecting shifts in task prevalence. Our case study of the effect of COVID-19 on a mental health practice’s operations uncovers shifts in tasks related to billing, cancellations, new client follow-up, and collections, and offers lessons for other healthcare organizations looking to operationalize NLP for building workflows and better resource allocation in task management.

Our findings revealed broader health policy insights beyond task management. For example, the shift from client-facing to insurance-facing tasks highlights some of the changes to telehealth coverage for mental health that was expanded immediately after the outbreak of COVID-19 but has not been legislated to date.33 Legislating universal tele-mental health coverage may improve claim resolution efficiency, thus allowing more time for patient-focused tasks. Using our NLP approach on other task management system free text in other specialties would likely identify other insights about how COVID-19 (or other major organizational and institutional changes) changed care and what may need to be done in response at the practice, platform, and policy levels.

FUNDING

This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

AUTHOR CONTRIBUTIONS

WG, DP, and ZL conceived the study concept. DP led the methodology aspects and structural topic modeling analysis. WG led the expert panel interviews, task management concept development, and coordination of the project. ZL led the data curation, management, and preprocessing. MD provided feedback on the study concept and helped with expert panel recruitment. NG managed the data deidentification and organization process. DP and WG wrote the manuscript. All authors reviewed and edited the manuscript. All authors approved the final manuscript.

ACKNOWLEDGMENTS

The authors thank the dedicated staff at the mental health practice with which we collaborated. The authors are also very grateful to the Journal of the American Medical Informatics Association editors and 3 anonymous referees for their thoughtful comments and helpful suggestions.

CONFLICT OF INTEREST STATEMENT

MD and NG are co-founders and stock owners in Dock Health, Inc. De-identified data from Dock Health were provided to WG, ZL, and DP without sponsorship or remuneration.

DATA AVAILABILITY STATEMENT

The data underlying this article were provided by Dock Health with permission from the mental health practice in the case study. The text datasets generated and analyzed during the current study are not publicly available due to patient and provider private information.

REFERENCES

- 1. Erickson SM, Rockwern B, Koltov M, et al. ; Medical Practice and Quality Committee of the American College of Physicians. Putting patients first by reducing administrative tasks in health care: a position paper of the American College of Physicians. Ann Intern Med 2017; 166 (9): 659–61. [DOI] [PubMed] [Google Scholar]

- 2. Michel L. Into the black box of a nursing burden, a comparative analysis of administrative tasks in French and American hospitals. 2017. https://tel.archives-ouvertes.fr/tel-01762491. Accessed April 30, 2021.

- 3. Casalino LP, Nicholson S, Gans DN, et al. What does it cost physician practices to interact with health insurance plans? Health Aff (Millwood) 2009; 28 (4): w533–43. [DOI] [PubMed] [Google Scholar]

- 4. Tollen L, Keating E, Weil A.. How administrative spending contributes to excess US health spending. Health Affairs Blog. https://www.healthaffairs.org/do/10.1377/hblog20200218.375060/full/. Accessed April 30, 2021.

- 5. Westbrook JI, Duffield C, Li L, et al. How much time do nurses have for patients? a longitudinal study quantifying hospital nurses’ patterns of task time distribution and interactions with health professionals. BMC Health Serv Res 2011; 11 (1): 319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Willis M, Duckworth P, Coulter A, et al. Qualitative and quantitative approach to assess the potential for automating administrative tasks in general practice. BMJ Open 2020; 10 (6): e032412. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Sakowski JA, Kahn JG, Kronick RG, et al. Peering into the black box: billing and insurance activities in a medical group. Health Aff (Millwood) 2009; 28 (4): w544–54. [DOI] [PubMed] [Google Scholar]

- 8. Chen B, Alrifai W, Gao C, et al. Mining tasks and task characteristics from electronic health record audit logs with unsupervised machine learning. J Am Med Inform Assoc 2021; 28 (6): 1168–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Jones B, Zhang X, Malin BA, et al. Learning tasks of pediatric providers from electronic health record audit logs. AMIA Annu Symp Proc 2021; 2020: 612–8. [PMC free article] [PubMed] [Google Scholar]

- 10. O'Malley AS, Draper K, Gourevitch R, et al. Electronic health records and support for primary care teamwork. J Am Med Inform Assoc 2015; 22 (2): 426–34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Davenport TH, Glover WJ.. Artificial intelligence and the augmentation of health care decision-making. NEJM Catalyst, 19 Jun 2018. https://catalyst.nejm.org/doi/full/10.1056/CAT.18.0151. Accessed April 30, 2021. [Google Scholar]

- 12. Thomas Craig KJ, Willis VC, Gruen D, et al. The burden of the digital environment: a systematic review on organization-directed workplace interventions to mitigate physician burnout. J Am Med Inform Assoc 2021; 28 (5): 985–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Dymek C, Kim B, Melton GB, et al. Building the evidence-base to reduce electronic health record–related clinician burden. J Am Med Inform Assoc 2021; 28 (5): 1057–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Hartzler AL, Taylor MN, Park A, et al. Leveraging cues from person-generated health data for peer matching in online communities. J Am Med Inform Assoc 2016; 23 (3): 496–507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Roberts ME, Stewart BM, Airoldi EM.. A model of text for experimentation in the social sciences. J Am Stat Assoc 2016; 111 (515): 988–1003. [Google Scholar]

- 16. Panchal N, Kamal R.. The implications of COVID-19 for mental health and substance use. KFF. 2021. https://www.kff.org/coronavirus-covid-19/issue-brief/the-implications-of-covid-19-for-mental-health-and-substance-use/. Accessed April 30, 2021. [Google Scholar]

- 17. Patel SY, Mehrotra A, Huskamp HA, et al. Variation in telemedicine use and outpatient care during the COVID-19 pandemic in the United States. Health Aff (Millwood) 2021; 40 (2): 349–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Alexander GL, Powell KR, Deroche CB.. An evaluation of telehealth expansion in U.S. nursing homes. J Am Med Inform Assoc 2021; 28 (2): 342–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Hron JD, Parsons CR, Williams LA, et al. Rapid implementation of an inpatient telehealth program during the COVID-19 pandemic. Appl Clin Inform 2020; 11 (3): 452–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Mayo AT, Myers CG, Sutcliffe KM.. Organizational science and health care. Acad Manag Ann 2021; 15 (2): 537–76. doi:10.5465/annals.2019.0115. [Google Scholar]

- 21. Salton G, Wong A, Yang CS.. A vector space model for automatic indexing. Commun ACM 1975; 18 (11): 613–20. [Google Scholar]

- 22. Blei DM, Ng AY, Jordan MI.. Latent Dirichlet allocation. J Mach Learn Res 2003; 3: 993–1022. [Google Scholar]

- 23. Chen JH, Goldstein MK, Asch SM, et al. Predicting inpatient clinical order patterns with probabilistic topic models vs conventional order sets. J Am Med Inform Assoc 2017; 24 (3): 472–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Roberts ME, Stewart BM, Tingley D, et al. The structural topic model and applied social science In: Advances in Neural Information Processing Systems Workshop on Topic Models: Computation, Application, and Evaluation; 2013: 1–20. https://mimno.infosci.cornell.edu/nips2013ws/slides/stm.pdf. [Google Scholar]

- 25. Roberts ME, Stewart BM, Tingley D.. stm: an R Package for structural topic models. J Stat Softw 2019; 91 (2): 1–40. [Google Scholar]

- 26. Zhao W, Chen JJ, Perkins R, et al. A heuristic approach to determine an appropriate number of topics in topic modeling. BMC Bioinformatics 2015; 16 (Suppl 13): S8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Mimno D, Wallach HM, Talley E, et al. Optimizing semantic coherence in topic models. In: Proceedings of the Conference on Empirical Methods in Natural Language Processing; 2011: 262–72.

- 28. Erzurumlu SS, Pachamanova D.. Topic modeling and technology forecasting for assessing the commercial viability of healthcare innovations. Technol Forecast Soc Change 2020; 156: 120041. [Google Scholar]

- 29. Nadarzynski T, Miles O, Cowie A, et al. Acceptability of artificial intelligence (AI)-led chatbot services in healthcare: A mixed-methods study. Digit Health 2019; 5:2055207619871808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. Lincoln YS, Guba EG.. Establishing trustworthiness. In: Naturalistic Inquiry. Beverly Hills, CA: Sage; 1985: 289–327. [Google Scholar]

- 31. Nolan TW. Understanding medical systems. Ann Intern Med 1998; 128 (4): 293–8. [DOI] [PubMed] [Google Scholar]

- 32. Gremigni P, Sommaruga M, Peltenburg M.. Validation of the Health Care Communication Questionnaire (HCCQ) to measure outpatients’ experience of communication with hospital staff. Patient Educ Couns 2008; 71 (1): 57–64. [DOI] [PubMed] [Google Scholar]

- 33. Wicklund E. Congress targets telehealth coverage for mental health, substance abuse treatment. 2021. https://mhealthintelligence.com/news/congress-targets-telehealth-coverage-for-mental-health-substance-abuse-treatment. Accessed April 30, 2021.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data underlying this article were provided by Dock Health with permission from the mental health practice in the case study. The text datasets generated and analyzed during the current study are not publicly available due to patient and provider private information.