Abstract

Objective

Despite the widespread and increasing use of electronic health records (EHRs), the quality of EHRs is problematic. Efforts have been made to address reasons for poor EHR documentation quality. Previous systematic reviews have assessed intervention effectiveness within the outpatient setting or paper documentation. The purpose of this systematic review was to assess the effectiveness of interventions seeking to improve EHR documentation within an inpatient setting.

Materials and Methods

A search strategy was developed based on elaborated inclusion/exclusion criteria. Four databases, gray literature, and reference lists were searched. A REDCap data capture form was used for data extraction, and study quality was assessed using a customized tool. Data were analyzed and synthesized in a narrative, semiquantitative manner.

Results

Twenty-four studies were included in this systematic review. Owing to high heterogeneity, quantitative comparison was not possible. However, statistically significant results in interventions and affected outcomes were analyzed and discussed. Education and implementation of a new EHR reporting system were the most successful interventions, as evidenced by significantly improved EHR documentation.

Discussion

Heterogeneity of interventions, outcomes, document type, EHR user, and other variables led to difficulty in measuring EHR documentation quality and effectiveness of interventions. However, the use of education as a primary intervention aligned closely with existing literature in similar fields.

Conclusions

Interventions implemented to enhance EHR documentation are highly variable and require standardization. Emphasis should be placed on this novel area of research to improve communication between healthcare providers and facilitate data sharing between centers and countries.

PROSPERO Registration Number: CRD42017083494.

Keywords: electronic health records, documentation, quality improvement, inpatient, intervention

INTRODUCTION

Healthcare professionals worldwide have transitioned from handwritten documentation to electronic reporting processes. In North America, over half of office-based practices and hospitals use some form of electronic health record (EHR) documentation.1 Clinical electronic documentation is referred to in this review as “the creation of a digital record detailing a medical treatment, medical trial or clinical test.”2 Compared with conventional paper documentation, EHRs produce clear, legible data that lends itself well to the support of patient care, communication among health professionals, quality assurance, and providing source information for coding for administrative databases used in research. Although EHR documentation has existed since the 1960s, a review of the medical literature reveals that the quality and usability of EHR documentation is generally poor.3 Several problems with EHR documentation have been identified. These include structural problems in which documentation quality suffers if the EHR system does not have built-in logic prohibiting the user from continuing onto the next section of documentation if the previous section has not been completed. Similarly, free-text fields, as opposed to point-and-click radio button documentation, have demonstrated increases in error.4 Resistance to EHR adoption further inhibits the standardization of documentation and can also impact data quality and usability.5

Poor EHR documentation can negatively affect a myriad of outcomes, including patient health. For example, the misuse of the copy-and-paste function from a previous hospital stay can create a misrepresentation of the patient’s health concerns during the current hospital visit.6 Patient safety can also be affected by poor EHR documentation, with the presence of prepopulated fields leading to medication errors.7 Poor EHR documentation can also affect the quality of coding for administrative databases used in research.8 Inpatient EHR documentation is a source of coded data in several countries. Within a Canadian context, a well-known national organization (Canadian Institute for Health Information) currently uses administrative databases to provide robust information to inform health policies and improve the delivery of health services.9 The inpatient administrative database used by Canadian Institute for Health Information is the discharge abstract database, which relies heavily on the electronic document during a hospital visit.

Owing to the aforementioned consequences of poor EHR documentation, it is essential to identify interventions that are effective at improving the quality of EHR documentation. Prior systematic reviews have explored ways to improve medical documentation; however, these have focused on the outpatient setting,5,10,11 or targeted EHR documentation of a specific EHR user.11,12 Other reviews have not focused solely on electronic forms of documentation (ie, included interventions to improve the quality of paper documents)12 or have focused on a single type of intervention to improve documentation, such as computer-generated reminders or structured forms.13,14 Noteworthy results from these systematic reviews illustrate (1) a dearth of literature addressing EHR improvement; (2) successful interventions to improve EHR documentation (eg, system add-ons, educational materials, financial incentives); and (3) different indicators to measure quality of documentation, such as completeness and accuracy of patient information.5 Consequently, a systematic review of the literature was performed, following the Preferred Reporting Items for Systematic Reviews and Meta-Analyses guidelines,15 to evaluate the effectiveness of interventions, programs, or institutional changes (broadly referred to in this article as interventions) that have sought to improve the quality of EHR documentation in the inpatient setting.

The aim of this review was to address 2 research questions: (1) What is the effectiveness of interventions seeking to improve inpatient hospital documentation in electronic health records? And (2) What tools and metrics were used to measure the improvement in EHR documentation? It should be noted that the word seeking is crucial to this question; studies were included in the review if the intent of the intervention was to improve documentation quality, regardless of the study outcome.

MATERIALS AND METHODS

Search strategy

The following databases were searched from inception to November 8, 2017, with no language restrictions: MEDLINE, EMBASE, Cochrane Central Register of Controlled Trials, and CINAHL. Additionally, both investigators (L.O.V. and N.W.) completed a gray literature search, including conference proceedings identified through EMBASE. Experts in the field, identified from the review process, and other researchers who have previously worked on the topic were contacted for further information about ongoing or unpublished studies. Reference lists of included studies were also searched.

After consulting with 2 librarians, the search strategy was organized into 4 comprehensive themes, resulting in 4 Boolean searches using the term or to explode and search the following subject headings by keyword: (1) derivatives of “electronic health records” to specify the main outcome; (2) derivatives of “documentation” to refine the main outcome; (3) both general terms and specific examples of interventions, including synonyms or derivatives, to capture the vast array of interventions; and (4) Cochrane filter for randomized controlled trial (RCT), University of Alberta filter for observational studies and PubMed Health filter for quasi-experimental studies to identify study designs.16–18 Last, the Boolean operator and was used to combine the 4 search themes. A detailed search strategy with all included terms is available within our study protocol.19

Improvement in documentation and its possible measures were not specified as search themes to avoid excluding studies that may have used an improvement measure not listed in the data extraction form. Further, since an intervention could be applied to the computer program or EHR “vendor,” rather than a human group of participants, EHR users were not specified in this search.

Eligibility criteria and study selection

Detailed inclusion and exclusion criteria are outlined within our study protocol.19 For the purpose of providing a comprehensive systematic review of the topic, this review was not restricted to RCTs, but rather incorporated all original literature reporting on the quality of EHR documentation. Consequently, experimental, quasi-experimental and observational studies were captured. Studies from all countries were accepted, to allow for generalizability of results. The study population are primary users of inpatient EHRs, including physicians, nurses, diagnostic imaging staff, pharmacists, and clinical trainees (eg, residents, interns). The interventions include but are not limited to activities, programs, or institutional changes applied to improve EHR documentation, such as the use of new software, dictation templates, audits, educational sessions, structured reporting, healthcare provider training, incentives, rewards, or penalties. Specifically, studies comparing interventions to controls (ie, standard EHR documentation or a comparator intervention) were preferred. Studies reporting on outpatient procedures and those in ambulatory settings were excluded. When not explicitly stated, expert opinions were sought to determine if studies took place in the inpatient setting. The outcome of interest was improved EHR inpatient documentation. Outcome measures were selected a priori from relevant literature4,20 and expert opinions. The outcome of “length” was used to refer to number of lines in text. As supported by the literature,21,22 a shorter text was preferred to encompass elimination of redundancy in a note. Outcome measure definitions can be found in Table 1.

Table 1.

Intervention definitions and outcome measures for improved inpatient EHR documentation and their definitions

| Intervention | Definition |

|---|---|

| New EHR Documentation System | Integration of a new documentation method into existing documentation software, involving the use of document templates or structured/synoptic reports. |

| Speech Recognition Software | Implementation of a new software specific to improving speech recognition for medical transcription. |

| New EHR Software System | The installation of a new software which possesses documentation support tools previously unavailable with existing software. Oftentimes, a new software is required for a facility to implement a new EHR documentation system. |

| Education | Educating HCPs on a new documentation system, or on documentation quality. This can take the form of in-services, short- or long-term training sessions, orientations, etc. |

| Electronic Alerts | An alert system integrated into the EHR documentation system that prompts the user to enter required documentation. |

| Guidelines | A set of guidelines for creating high-quality documentation is provided to the EHR user, usually in the form of a paper document or pocket-card. |

| Reminders | Reminders are sent to the EHR user, typically via e-mail, regarding importance of high-quality documentation. |

| Audit | Inspection of a group’s documentation quality, typically performed by the study authors. Often accompanied by feedback to EHR user. |

| Feedback | Information given to EHR user regarding their documentation quality after implementation of an intervention. |

| Incentive | Reward system designed to motivate EHR users to adopt the study’s intervention. |

|

| |

| Outcome measure | Definition |

|

| |

| Medication accuracy | The absence of or decline in the number of errors and discrepancies present in the medication record. |

| Document accuracy | The absence of or decline in the number of errors and discrepancies present in the EHR document. |

| Completeness | The lack or decrease of missing information, as well as the addition of documented items within a medical record. |

| Timeliness | A decrease in the time required to complete the document, and also a shortening of the turnaround time necessary for the document to be available. |

| Overall quality | Variously defined by each study and assessed through mean scores of personalized checklists or quality indicators. |

| Clarity | A well-organized, readable, and easily understandable document. |

| Length | The decrease in the number of lines or word count. |

| Document capture | An increased number of documents created (not included in this review because of lack of data). |

| User Satisfaction | Determined by the primary EHR users in surveys that evaluate their opinion on the implementation of the intervention. |

EHR: electronic health record; HCP: healthcare provider.

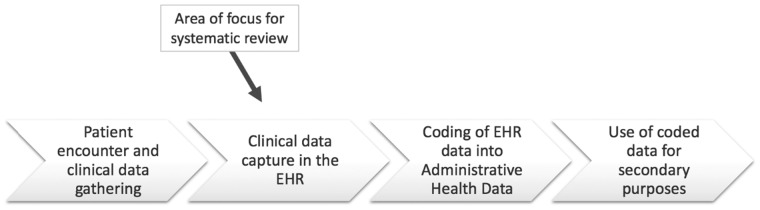

During the full-text screening stage, studies were excluded if the aim of the intervention was to improve other healthcare service areas (eg, patient care, healthcare delivery) or if improved clinical outcomes were the primary or secondary goal. Although ensuring EHR documentation quality is essential for clinical purposes, the measures used to evaluate improvement in these areas (eg, shorter length of stay, improved patient health) are not applicable to other uses of the document, such as improving healthcare provider communication or improving usability by coders. As outlined in Figure 1, this systematic review focuses on the quality of the EHR documentation created during a clinical encounter, prior to its coding, and its impact on administrative data and the data management chain.

Figure 1.

Data management chain and point of interest for electronic health record (EHR) documentation quality improvement interventions.

Both abstract and full-text screening phases were done independently by 2 reviewers (L.O.V. and N.W.) with the support of an eligibility criteria screening tool. Titles and abstracts were scanned to select articles for in-depth analysis. Articles were selected for full-text review if both reviewers agreed on eligibility criteria, or if the abstract did not provide sufficient information to make a decision. Any discrepancies between reviewers were discussed until an agreement was reached. When necessary, additional clarity regarding article eligibility was requested by contacting the articles’ authors and examining unclear articles with another investigator (D.J.N.). Interrater agreement was assessed using the Kappa statistic for both stages of screening.

Data extraction and study quality assessment tool

To collect pertinent information from all included studies, a data extraction form with built-in logic was created using REDCap.23 This logic also comprised hidden questions that appeared when a certain answer was chosen. This feature was a “real-time” function that cannot be depicted in the printed form embedded as Supplementary Appendix S1. The form focused on the detailed study characteristics (eg, EHR users, type of setting, outcome measures). For results of interventions, the reviewers extracted differences between intervention groups, as well as before-and-after, or cross-sectional, designs. The data extraction tool also allowed reviewers to abstract the outcome measures used to identify high- or low-quality EHR documentation. Study quality and systematic error (bias) was assessed using a hybrid of the Downs and Black scale and the Newcastle-Ottawa Scale, including 11 items to capture experimental, quasi-experimental, and observational study designs (Supplementary AppendixS2).24,25

Data synthesis and analysis

Given the heterogeneity within included studies, as well as the lack of standardized or consistent reporting of outcome measures, meta-analysis was not possible. The factors associated with heterogeneity were explored and assessed, as well as the effect of a number of variables on the results of the interventions. These variables included but were not limited to: type of EHR user (eg, physician, nurse, pharmacist, therapist), type of setting (urban or rural), size of setting (single or multicenter), area of clinical practice, demographic characteristics of users, and experience with EHR (years of use). The final number and the characteristics of studies identified for inclusion in and exclusion from the systematic review were reported in a Preferred Reporting Items for Systematic Reviews and Meta-Analyses flow diagram. All extracted data were tabulated, including participant characteristics, study designs, interventions, instruments, and study results. For the primary question (overall effectiveness of interventions), results obtained across all studies were grouped by intervention. Differences between study results were presented in narrative form with semiquantitative analysis. Statistical significance was used as a measure of effectiveness of each intervention. To address the secondary question, metrics used to identify interventions with high or low effectiveness were described.

RESULTS

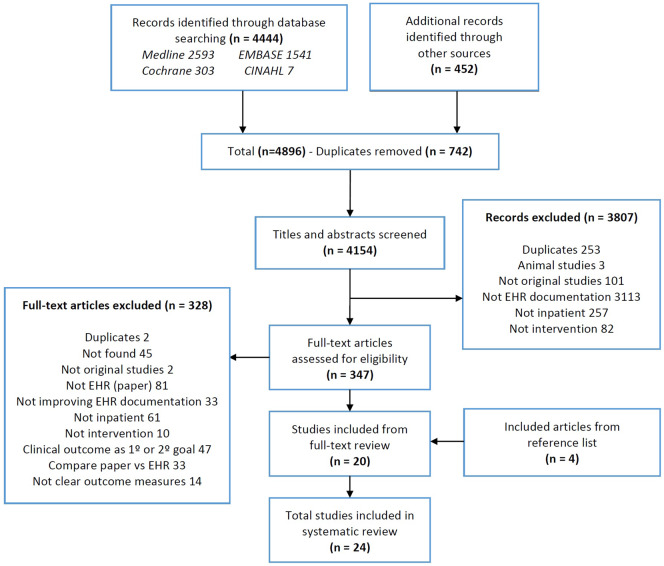

Study screening and selection is summarized in Figure 2. From 4896 citations, 347 were included for full-text review. The kappa score was 0.45 (95% confidence interval, 0.41-0.50) for the title and abstract screening, and 0.77 (95% confidence interval, 0.65-0.89) for the full-text screening. In total, 24 studies satisfied the eligibility criteria and were included in the final review.26–49 Publication dates range from to 2004 to 2016. Seventeen (71%) studies were conducted in North America (13 in the United States, 4 in Canada) and 22 (92%) were conducted in single centers. Participant populations were combinations of physicians, nurses, or trainees. Eight studies did not report on number of study participants; however, in those that did report study participants (n = 16), the number ranged from 5 to 3232. The number of EHRs used in each study ranged from 25 to 21 595, with a total of 43 611 records used among all 24 studies. Interventions were done on a variety of EHR documents, mostly operative reports (n = 7) and discharge summaries (n = 6), with a few studies reporting on problem lists (n = 2), all documents in the EHR (n = 3), and others (Table 2).

Figure 2.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses flow diagram. EHR: electronic health record.

Table 2.

Study characteristics

| Author (year) | Country (continent) | Study setting (specialty), population | Study design | Length of study (follow up) | Type of record (n) | EHR documentation improvement interventions | Description |

|---|---|---|---|---|---|---|---|

| Eichholz (2004)39 | United States (NA) | SC (Obstetrics/Gynecology) | QE | 1 mo and 1 wk | Operative reports (1) | 1. Education | A teaching program on operative record dictation with a 30-min teaching session was implemented. Discussion of various key elements of operative notes and examples was given. |

| Residents | (1 mo) | ||||||

| Laflamme (2005)26 | United States (NA) | SC (Obstetrics/Gynecology) | OBS | 16 wk | Operative reports (336) |

|

A 20-min orientation to electronic templates with randomization of residents to either standard or template dictation was implemented. |

| Koivikko (2008)35 | Finland (EU) | SC (Radiology) | OBS | 2 y | Radiology reports (21 595) |

|

A new speech recognition program was implemented for the radiological dictation process. |

| Radiologists | (1 y) | ||||||

| Bergkvist (2009)49 | Sweden (EU) | SC (Internal medicine) | OBS | 9 mo | Medication report (172) | 1. Feedback | Clinical pharmacists reviewed and gave feedback to the physician on the discharge summary before patient discharge, using a structured checklist, focusing on correctness of the medication report and the medication list. |

| Physicians | Medication list (105) | ||||||

| Haberman (2009)44 | United States (NA) | MC-2 (Obstetrics) | RCT. Nonblinded | 6 mo | All documents (10 996) | 1. Electronic alerts | An advanced clinical-decision support tool (IPROB) specific to obstetrics was used, where the computer prompt requested documentation of an indication for labor induction. |

| Nurses, Physicians, Residents | |||||||

| Johnson (2009)27 | United States (NA) | SC (Radiology/Neurology) | OBS | 6 mo | Radiology reports (25) |

|

A new EHR software system using structured reporting (SRS) was implemented. There were group and individual training sessions with the SRS vendor during a 2-mo period. |

| Radiology residents | (4 mo) | ||||||

| Maslove (2009)28 | Canada (NA) | SC (Internal medicine) | RCT. Nonblinded | 2 mo | Discharge summaries (94) | 1. New EHR documentation system (electronic discharge summaries) | A novel web-based computer program was implemented as a method of discharge summary generation. |

| Physicians, Residents, Interns, Medical students | |||||||

| Galanter (2010)37 | United States (NA) | SC (All) | OBS | 5 mo | Problem lists (NR) |

|

An automated CDS software was created, with an alert system that prompted clinicians to add a diagnosis to the problem list, based on medications chosen by house staff. |

| Physicians, Housestaff | (2 mo) | ||||||

| Park (2010)29 | United States (NA) | SC (Internal medicine) | OBS | 4 mo | Operative reports (214) | 1. New EHR documentation system (synoptic reports) | Electronic synoptic operative reports used a smart navigation system that incorporated relevant items in checkboxes, drop-down menus, and prepopulated fields. |

| Physicians (surgeons) | |||||||

| Dinescu (2011)48 | United States (NA) | SC (Geriatric) | QE | 1 y | Discharge summaries (158) |

|

Dictated DC summaries were audited with a 21-item completion checklist. Feedback was given on areas needing improvement with one-on-one 30-min sessions. |

| Fellows | |||||||

| Hoffer (2012)30 | Canada (NA) | SC (Nephrology) | OBS | 2 y and 3 mo | Operative reports (200) |

|

A clinical documentation tool, eKidney, was used for kidney cancer patient care which generated electronic operative notes with structured templates. |

| Physicians (attending surgeon), Residents | |||||||

| Bischoff (2013)40 | United States (NA) | SC (All) | QE | 1 y and 2 mo | Discharge summaries (240) |

|

Discharge summary improvement bundles were implemented, which employed an educational curriculum, an electronic discharge summary template, regular data feedback and a financial incentive. |

| Residents | (6 mo) | ||||||

| Falck (2013)38 | United States (NA) | SC (All) | OBS | 3 y | Problem lists (NR) |

|

A clinical decision support (CDS) tool was used, where an alert system prompted ordering physicians to select from problem lists based on medications entered into system. |

| Physicians (attending), Residents | |||||||

| Russell (2013)41 | Australia (OC) | SC (General medicine) | QE | 1 y and 10 wk | Discharge summaries (140) | 1. Education | A 30-min training session of 5 interns during their first week of general medicine service was given. |

| Interns | |||||||

| Axon (2014)42 | United States (NA) | SC (Internal Medicine) | QE | 6 mo | Discharge summaries (NR) |

|

A 60-min lecture was given to all residents, accompanied by team based feedback, and discharge summary template cards. |

| Residents, Housestaff | |||||||

| Jakob (2014)47 | Germany (EU) | SC (All) | QE | 4 mo | Operative reports (188) | 1. Reminders | Monthly reminder emails to surgeons and chief of staff were sent. |

| Physicians | |||||||

| Maniar (2014)31 | Canada (NA) | SC (All) | OBS | 2 y | Operative reports (160) |

|

WebSMR used a combination of menu selection and free text fields to generate an SR that was available in patients' electronic medical record or could be printed as a hard copy. |

| Physicians | |||||||

| Dean (2015)33 | United States (NA) | SC (Pediatric) | QE | 1 y | Progress notes (50) |

|

This intervention bundle consisted of establishing note-writing guidelines, developing an aligned note template, and educating interns about the guidelines and using the template. |

| Interns | |||||||

| Maniar (2015)32 | Canada (NA) | SC (All) | OBS | 4 y | Operative reports (194) | 1. New EHR documentation system (synoptic reports) | The template-based reporting system included fields for patient demographics, preoperative investigations and treatments, and the important procedure-related steps and findings. |

| Physicians | |||||||

| Sandau (2015)45 | United States (NA) | MC -10 (Cardiology) | QE | 1 y and 6 mo | Nursing notes (4011) |

|

A reminder alert was sent if the patient was taking QT prolonging medication. Staff received an online education module. |

| Nurses | (3-6 mo) | ||||||

| Tan (2015)43 | Australia (OC) | SC (General medicine) | QE | 14 wk | Discharge summaries (772) |

|

Charts were audited, and feedback was provided to EHR users regularly. Incentive was provided with coffee vouchers. Prior to auditing, users were taught on the importance of documentation. |

| Physicians, Residents, Interns | |||||||

| Vogel (2015)36 | Germany (EU) | SC (Trauma/Pediatric) | RCT. Nonblinded | 4 mo | All documents (1455) |

|

A new web-based medical automatic speech recognition system for clinical documentation in the German language was implemented, along with a short training session. |

| Physicians, Interns | |||||||

| Mehta (2016)34 | United States (NA) | SC (All) | OBS | 4 mo | All documents (200) |

|

A new group of focused structured templates organized as clinical decision aids, called problem-oriented templates, were created. There was a voluntary 2-wk “Training Period,” followed by a 2-wk intervention period. |

| Physicians | (3 mo) | ||||||

| Vercheval (2016)46 | Belgium (EU) | SC (All) | QE | 1 y and 3 mo | Operative reports (2306) |

|

Guidelines were implemented, along with the distribution of educational materials and group educational interactive sessions for physicians and nurses in all wards. |

| Nurses, Physicians | (1 y) |

CDS: clinical decision support; EU: Europe; MC: multicenter; NA: North America; NR: not reported; OBS: observational; OC: Oceania; QE: quasi-experimental; QT: QT-interval in cardiac rhythm monitoring; RCT: randomized controlled trial; SC: single center; SR: synoptic report; SRS: structured reporting system.

Interventions and comparators

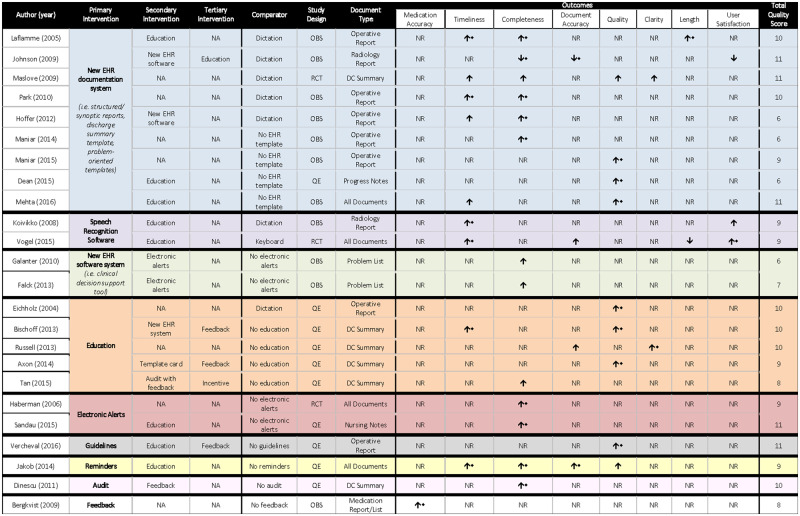

A summary of studies, interventions (primary, secondary, and tertiary), and effect on outcomes is displayed in Figure 3.

Figure 3.

Semiquantitative synthesis of included studies. DC: discharge; EHR: electronic health record; NA: not applicable; NR: not reported; OBS: observational; QE: quasi-experimental; RCT: randomized controlled trial.

For the purpose of analysis, interventions were stratified into 3 levels: primary, secondary, and tertiary. Primary interventions were the main interventions used in each study, while secondary and tertiary were supporting interventions (weight and importance of interventions were assigned by each study author). The most commonly used primary intervention was the creation of a new EHR documentation system (n = 9). The most commonly used intervention throughout all levels was education (n = 14). A detailed description of the interventions used by each study is provided in Figure 3. Comparators varied; however, the most commonly used comparator was dictation (n = 7).

Outcomes

To measure documentation improvement, 9 outcomes were selected and defined from relevant literature (medication accuracy, timeliness, completeness, document accuracy, overall quality, clarity, length, user satisfaction, and document capture). Only 1 study contradicted a definition used in this review, specific to length.36 No studies reported on document capture; therefore, it was eliminated from the analysis.

All studies provided quantifiable outcomes, expressed in means and standard deviations, or proportions. The degree of improvement among studies for one specific outcome could not be compared because of extreme heterogeneity in outcome measurement (eg, scoring system, checklist, study reviewer), and because of the large number of variables contributing to any given outcome (ie, types of interventions, study participants, type of document, or comparator). Therefore, the reported statistical significance was used to determine whether the intervention was effective or not. Five studies did not report any statistically significant outcomes, for which study quality, study setting, and study design varied widely (Figure 3).

Medication accuracy and clarity were reported by 1 study each. The most frequently reported outcome was completeness, assessed in 13 studies, of which 9 (62%) showed a statistically significant change. Timeliness and quality were each reported by 9 studies. Nonetheless, statistically significant improvements in quality were seen in 8 of the 9 (89%) studies. Quality of the EHR document improved most with the implementation of a new EHR documentation system (n = 3) or through an educational intervention (n = 3). However, caution should be used when evaluating the effect of these interventions on reported outcomes, due to their high frequency among all 24 studies. Other interventions varied in outcome reporting (Figure 3). There was no association between the number of interventions used and the number of outcomes affected. When referring to effectiveness of interventions, Education proved to be the most effective by improving EHR documentation in 12 of 14 (86%) studies, followed by the implementation of a new EHR documentation system, which improved EHR documentation in 7 of 9 (78%) studies. Statistically significant outcomes and metrics are further examined in Supplementary Appendix S3.

Owing to heterogeneity in outcome measurement, the secondary study question (What tools and metrics were used to measure the improved EHR documentation?) could not be fully addressed. However, existence of a validated quality reporting tool led to the exploration of metrics used for one of the outcomes: overall quality. Of the 9 studies reporting on this outcome, 8 used an ad hoc tool or questionnaire, whereas only 1 study used the validated Physician Documentation Quality Instrument (PDQI-9) tool.20

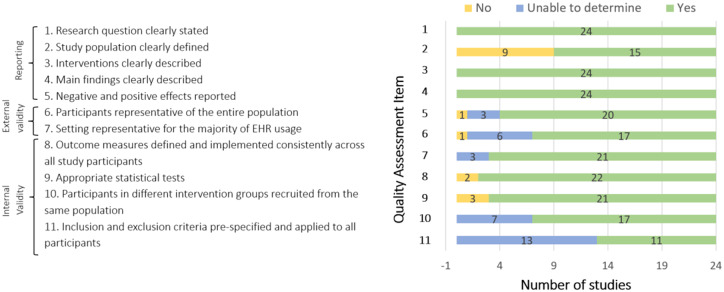

Study quality

Study quality was generally good; 17 of 24 studies scored ≥9 on the 11-point scale, and the lowest score was 6. No differences were seen in study quality pertaining to different study designs or number of interventions used. Of note, a high rating on the quality assessment tool successfully captured studies reporting both positive and negative outcomes. Study quality remained ≥9 for the 3 nonblinded RCTs. Additionally, observational and quasi-experimental study designs yielded high-quality scores in several studies. The mean quality score was 9 overall, and scores by type of primary intervention were as follows: new EHR documentation system = 8.9, speech recognition software = 9, new EHR software system = 6.5, education = 9.4, electronic alerts = 10, guidelines = 11, feedback = 8, audit = 10, reminders = 9 (Figure 3). Figure 4 provides an outline of study quality scores, depicting number of studies per quality assessment tool item.

Figure 4.

Quality assessment results.

DISCUSSION

Principal findings

This systematic review identified 11 different interventions that aimed to improve inpatient EHR documentation. There was marked heterogeneity in study design, study participants, type of interventions, comparators, outcomes, and metrics used within each outcome. The most widely used interventions were education and the introduction of a new EHR documentation system, which improved EHR documentation on a number of different outcomes (primarily completeness, timeliness, and overall quality). It can be concluded that regardless of the type of intervention used, there is likely to be an improvement in EHR documentation in at least 1 outcome.

The studies reporting the largest number of statistically significant improved outcomes were Laflamme et al26 and Jakob et al.47 The former used the most commonly reported document type (operative report) and comparator (dictation), and positively affected timeliness, completeness, and length, whereas the latter improved timeliness, completeness, and document accuracy instead.26,47 In contrast, it should be noted that Johnson et al27 consistently reported negative findings. After investigating the methods used within this study, it was found that the EHR documentation system was perceived by users as “time consuming” and placed constraints on the ability to document. The newly implemented system (eDictation) worsened completeness, document accuracy, and user satisfaction outcomes.

With regard to study quality, owing to the high importance assigned to the study population in the quality scoring tool, up to 4 items could be negatively affected if study population was not adequately described. In Figure 4, items 2, 6, 10 and 11 contain visibly more “no” and “unable to determine” responses, as these were the 4 items pertaining to study population. Unfortunately, many of the included studies failed to report the study population, by either not specifying the specific EHR user or not describing the patient care setting where the EHR documentation was completed in. As a result, differences among demographic characteristics of users, and experience level with EHR (years of use), could not be assessed.

Last, there was an inconsistent definition of the length outcome between the 2 studies that reported on it.26,36 With EHR use, there has been an increased ease with which EHR users can “note-bloat,” a term referring to the unnecessary copy and paste from previous consultation notes into the current visit note, adding extraneous information that does not benefit the reader. Not only does this increase time spent reading the note for the receiving physician, but also it has the potential to obscure relevant information that could impede patient care delivery.50 For coders, longer documents can take more time to code, and unnecessary information can create difficulty in finding the relevant diagnoses for that visit.51 For this review, improved length was described as a shorter document, which is supported by the literature, especially considering the preferences of primary care physicians when receiving an EHR document. As per Coit et al,21 “PCPs value summaries that are brief and focused.” Rao et al22 found that physicians perceive shorter length as an important element in quality of documentation. Nevertheless, in one of the included studies, Vogel et al,36 defined improved length as a longer, more complete document, and, therefore, its interventions were considered to worsen EHR documentation. The authors recognize the ambiguity surrounding the benefit of a shortened document, since a shorter document may not necessarily mean it is of higher quality, nor does it ensure conciseness and decreased redundancy. However, neither conciseness nor redundancy were reported as outcome measures for documentation quality in any of the included studies. Therefore, to ensure consistency with existing literature, and to capture all outcomes reported by the studies used in this review, length was kept as an outcome measure.

When addressing the secondary research question, it was found that evaluating EHR documentation improvement is generally a difficult task, owing to heterogeneity in measures used to assess the different outcomes (eg, percentages, frequency, customized checklists, personalized scoring tools). There is minimal literature stating the availability or need for a gold-standard tool to measure outcomes, with the exception of overall quality. To the reviewers’ knowledge, there exists only 1 validated tool to measure documentation quality in the outpatient setting (QNOTE)52 and 1 to measure documentation quality in the inpatient setting (PDQI-9); however, the PDQI-9 relies on physician’s impression scores.20 Additionally, there was only 1 study that used PDQI-9, compared with the other 8 studies that used ad hoc tools. The absence of a gold-standard to report this subjective outcome decreases the generalizability of this review’s study findings, and highlights the need for a gold-standard quality measurement tool.

Relevance to existing research

This review provides a new synthesis of data identifying interventions to improve inpatient EHR documentation. Results are consistent with those of Hyppönen et al,14 who also found heterogeneity of outcomes in their systematic review targeting structured EHR documentation in both the outpatient and inpatient setting. Furthermore, structured reporting (new EHR documentation systems) was shown to improve document quality, which was also found in this review, but did not lead to better patient outcomes, which suggests that there may be poor correlation between EHR documentation and patient outcomes.13

Additionally, Hamade5 found that interventions that successfully improved EHR documentation focused on feature add-ons, educational sessions, and incentives. In relation to the current review, education was similarly found to be the most effective intervention. However, Hamade’s5 findings proved to be beneficial in the outpatient setting, which may not be transferable to an inpatient setting. For instance, incentives were not effective in improving EHR documentation in this review, and were one of the least commonly reported interventions. Further research is necessary to assess the effectiveness of incentives as interventions in the inpatient setting. Moreover, this finding suggests that different interventions may apply to the inpatient and outpatient documentation setting.

Strengths and limitations

The exploration of EHR documentation improvement proved to be a novel, yet robust, area, demonstrated by the recent publication dates of included studies (ranging from 2004 to present) and the high number of search results. This might be attributed to the large uptake of EMRs worldwide, hence the relevance and necessity of this topic. Additionally, it provides a methodologically rigorous template for future systematic or scoping reviews exploring the effectiveness of interventions to improve EHR documentation. Outcomes of this study will be applicable to clinicians, policymakers, health information managers, quality improvement specialists, and coding organizations, and will provide a direction for future researchers seeking to improve administrative discharge database quality.

This review has several limitations. First, due to the large amount of literature found, sharpening of the inclusion and exclusion criteria was necessary, which resulted in excluding many articles whose focus differed from the main question. In particular, studies with a primary or secondary goal of improving clinical outcomes, but also indirectly improving EHR documentation, were excluded. By excluding these studies, important articles may have been missed. However, the direct impact of improved EHR documentation on patient outcomes is still largely unknown and it merits attention in a separate analysis. Second, there was a lack of information within studies regarding type of patient record used (ie, electronic or paper), the study setting, and the study population. Although study authors were contacted to clarify these ambiguities, for those that did not respond, relevant information remains unclear. Finally, only a few studies (n = 8) reported a follow-up period; therefore, it was difficult to determine the long-term success of the interventions.

Future directions

This systematic review demonstrates that there is a large number of interventions being implemented to improve inpatient EHR documentation. However, it also highlights important weaknesses in the evidence. Standardization for reporting in the EHR is lacking, which is demonstrated by the heterogeneity in outcomes, interventions, and metrics. Thus, there is a need for implementing standardized document formatting and for developing standardized measurement tools as well as evidence-based interventions to improve EHR documentation. This would facilitate communication among healthcare providers and enhance continuity of care between healthcare settings.21 Second, as evidenced by Figure 1, electronic records are coded and used for administrative data purposes as part of the data management chain. Standardized documentation would reduce burden in health information management caused by coding challenges related to poor quality EHR documents.53 Third, the standardized EHR documentation practice would facilitate data sharing between centers, regions and countries. A globally used EHR documentation system or software would be ideal.54 However, results show that EHR adoption is dependent on the individualized needs of each site or department. Therefore, generalizability may not be a feasible option. Nonetheless, future researchers should aim at using the most effective interventions and most commonly used EHR documents to initiate a standardized reporting process.

CONCLUSION

This systematic review provides an overview of current interventions to improve EHR documentation within an inpatient setting and identifies the means by which the quality of EHR documentation could be improved. An analysis of the 24 included studies demonstrated that new EHR documentation system as well as education are the most widely used interventions. The large heterogeneity between studies (document type, comparator, participants, interventions, and outcome measures) and multifactorial study outcome results demonstrate there is a need for a standardized reporting process that is amenable to all specialty areas, EHR users, and geographical locations. This could also be beneficial for coded data and, by extension, administrative databases used for research purposes. Furthermore, although this systematic review did not measure patient outcomes, literature shows that continuity of care can be negatively affected with poor documentation. Future researchers should aim at implementing the most successful interventions presented in this systematic review to improve EHR documentation, which could be the first step toward the development of standardized documentation procedures.

AUTHOR CONTRIBUTIONS

This study was conceptualized by HQ. HLR, PER, LOV, and NW developed the search strategy. LOV and NW completed the search and drafted the protocol. DJN, PER, NI, and HQ critically appraised the protocol and also contributed to its development by revising subsequent versions. LOV and NW contributed equally to the data collection and analysis, as well as the interpretation of the review. All authors critically revised the review, and read and approved the final manuscript.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Conflict of interest statement

None declared.

Supplementary Material

REFERENCES

- 1. Maddox T, Matheny M.. Natural language processing and the promise of big data. Circ Cardiovasc Qual Outcomes 2015; 8: 463–5. [DOI] [PubMed] [Google Scholar]

- 2. Clinical Documentation (Healthcare) - Definition from SearchHealthIT. 2014. https://searchhealthit.techtarget.com/definition/clinical-documentation-healthcare. Accessed March, 2019.

- 3. Dhavle AA, Corley ST, Rupp MT.. Evaluation of a user guidance reminder to improve the quality of electronic prescription messages. Appl Clin Inform 2014; 53: 699–707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. O'leary KJ, Liebovitz DM, Feinglass J, et al. Creating a better discharge summary: improvement in quality and timeliness using an electronic discharge summary. J Hosp Med 2009; 44: 219–25. [DOI] [PubMed] [Google Scholar]

- 5. Hamade N. Improving the use of electronic medical records in primary health care: a systematic review and meta-analysis. Western Graduate and Postdoctoral Studies: Electronic Thesis and Dissertation Repository 2017: 4420..https://ir.lib.uwo.ca/etd/4420. Accessed December, 2017.

- 6. Dimick C. Documentation bad habits: shortcuts in electronic records pose risk. J AHIMA 2008; 796: 40–3. [PubMed] [Google Scholar]

- 7. Virginio LA, Ricarte IL.. Identification of patient safety risks associated with electronic health records: a software quality perspective. Stud Health Technol Inform 2015; 216: 55–9. [PubMed] [Google Scholar]

- 8. So L, Beck CA, Brien S, et al. Chart documentation quality and its relationship to the validity of administrative data discharge records. Health Informatics J 2010; 162: 101–13. [DOI] [PubMed] [Google Scholar]

- 9. Canadian Institute for Health Information. Clinical Administrative Databases Privacy Impact Assessment. 2013. https://www.cihi.ca/en/cad_pia_jan_2013_en.pdf Accessed April 2, 2019.

- 10. Neri PM, Volk LA, Samaha S, et al. Relationship between documentation method and quality of chronic disease visit notes. Appl Clin Inform 2014; 52: 480–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Lorenzetti D, Quan H, Lucyk K, et al. Strategies for improving physician documentation in the emergency department: a systematic review. BMC Emerg Med 2018; 181: 36.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Wang N, Hailey D, Yu P.. Quality of nursing documentation and approaches to its evaluation: a mixed-method systematic review. J Adv Nurs 2011; 679: 1858–75. [DOI] [PubMed] [Google Scholar]

- 13. Arditi C, Rège-Walther M, Durieux P, et al. Computer-generated reminders delivered on paper to healthcare professionals, effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev 2017; 7: CD001175.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Hyppönen H, Saranto K, Vuokko R, et al. Impacts of structuring the electronic health record: a systematic review protocol and results of previous reviews. Int J Med Inform 2014; 833: 159–69. [DOI] [PubMed] [Google Scholar]

- 15. Moher D, Shamseer L, Clarke M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev 2015; 41: 1.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Cochrane Handbook. Search Strategies for Identifying Randomized Trials in MEDLINE. http://handbook-5-1.cochrane.org/ Accessed October 10, 2017.

- 17. BMJ Clinical Evidence. Study Design Search Filters. http://clinicalevidence.bmj.com/x/set/static/ebm/learn/665076.html Accessed October 10, 2017.

- 18. PubMed Health. Search Strategies for the Identification of Clinical Studies. https://www.ncbi.nlm.nih.gov/pubmedhealth/PMH0042152/ Accessed October 10, 2017.

- 19. Otero Varela L, Wiebe N, Niven DJ, et al. Evaluation of interventions to improve electronic health record documentation within the inpatient setting: a protocol for a systematic review. Syst Rev 2019; 138: 54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Stetson PD, Bakken S, Wrenn JO, et al. Assessing electronic note quality using the Physician Documentation Quality Instrument (PDQI-9). Appl Clin Inform 2012; 32: 164–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Coit MH, Katz JT, McMahon GT.. The effect of workload reduction on the quality of residents’ discharge summaries. J Gen Intern Med 2011; 261: 28–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Rao P, Andrei A, Fried A, et al. Assessing quality and efficiency of discharge summaries. Am J Med Qual 2005; 206: 337–43. [DOI] [PubMed] [Google Scholar]

- 23. Harris P, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009; 422: 377–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Downs S, Black N.. The feasibility of creating a checklist for the assessment of the methodological quality both of randomised and non-randomised studies of health care interventions. J Epidemiol Community Health 1998; 526: 377–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Wells GA, Shea B, O'Connell D, et al. The Newcastle-Ottawa Scale (NOS) for Assessing the Quality of Non-Randomised Studies in Meta-Analyses. 2011. http://www.ohri.ca/programs/clinical_epidemiology/oxford.asp. Accessed October 2, 2017.

- 26. Laflamme MR, Dexter PR, Graham MF, et al. Efficiency, comprehensiveness and cost-effectiveness when comparing dictation and electronic templates for operative reports. AMIA Annu Symp Proc 2015; 2015: 425–9. [PMC free article] [PubMed]

- 27. Johnson AJ, Chen MY, Swan JS, et al. Cohort study of structured reporting compared with conventional dictation. Radiology 2009; 2531: 74–80. [DOI] [PubMed] [Google Scholar]

- 28. Maslove DM, Leiter RE, Griesman J, et al. Electronic versus dictated hospital discharge summaries: a randomized controlled trial. J Gen Intern Med 2009; 249: 995–1001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Park J, Pillarisetty VG, Brennan MF, et al. Electronic synoptic operative reporting: assessing the reliability and completeness of synoptic reports for pancreatic resection. J Am Coll Surg 2010; 2113: 308–15. [DOI] [PubMed] [Google Scholar]

- 30. Hoffer DN, Finelli A, Chow R, et al. Structured electronic operative reporting: comparison with dictation in kidney cancer surgery. Int J Med Inform 2012; 813: 182–91. [DOI] [PubMed] [Google Scholar]

- 31. Maniar RL, Hochman DJ, Wirtzfeld DA, et al. Documentation of quality of care data for colon cancer surgery: comparison of synoptic and dictated operative reports. Ann Surg Oncol 2014; 2111: 3592–7. [DOI] [PubMed] [Google Scholar]

- 32. Maniar RL, Sytnik P, Wirtzfeld DA, et al. Synoptic operative reports enhance documentation of best practices for rectal cancer. J Surg Oncol 2015; 1125: 555–60. [DOI] [PubMed] [Google Scholar]

- 33. Dean SM, Eickhoff JC, Bakel LA.. The effectiveness of a bundled intervention to improve resident progress notes in an electronic health record. J Hosp Med 2015; 102: 104–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Mehta R, Radhakrishnan NS, Warring CD, et al. The use of evidence-based, problem-oriented templates as a clinical decision support in an inpatient electronic health record system. Appl Clin Inform 2016; 73: 790–802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Koivikko MP, Kauppinen T, Ahovuo J.. Improvement of report workflow and productivity using speech recognition–a follow-up study. J Digit Imaging 2008; 214: 378–82. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Vogel M, Kaisers W, Wassmuth R, et al. Analysis of documentation speed using web-based medical speech recognition technology: randomized controlled trial. J Med Internet Res 2015; 1711: e247.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Galanter WL, Hier DB, Jao C, et al. Computerized physician order entry of medications and clinical decision support can improve problem list documentation compliance. Int J Med Inform 2010; 795: 332–8. [DOI] [PubMed] [Google Scholar]

- 38. Falck S, Adimadhyam S, Meltzer DO, et al. A trial of indication based prescribing of antihypertensive medications during computerized order entry to improve problem list documentation. Int J Med Inform 2013; 8210: 996–1003. [DOI] [PubMed] [Google Scholar]

- 39. Eichholz AC, Van Voorhis BJ, Sorosky JI, et al. Operative note dictation: should it be taught routinely in residency programs? Obstet Gynecol 2004; 1032: 342–6. [DOI] [PubMed] [Google Scholar]

- 40. Bischoff K, Goel A, Hollander H, et al. The Housestaff Incentive Program: improving the timeliness and quality of discharge summaries by engaging residents in quality improvement. BMJ Qual Saf 2013; 229: 768–74. [DOI] [PubMed] [Google Scholar]

- 41. Russell P, Hewage U, Thompson C.. Method for improving the quality of discharge summaries written by a general medical team. Intern Med J 2014; 443: 298–301. [DOI] [PubMed] [Google Scholar]

- 42. Axon RN, Penney FT, Kyle TR, et al. A hospital discharge summary quality improvement program featuring individual and team-based feedback and academic detailing. Am J Med Sci 2014; 3476: 472–7. [DOI] [PubMed] [Google Scholar]

- 43. Tan B, Mulo B, Skinner M.. Discharge documentation improvement project: a pilot study. Intern Med J 2015; 4512: 1280–5. [DOI] [PubMed] [Google Scholar]

- 44. Haberman S, Feldman J, Merhi ZO, et al. Effect of clinical-decision support on documentation compliance in an electronic medical record. Obstet Gynecol 2009; 114 (2 Pt 1): 311–7. [DOI] [PubMed] [Google Scholar]

- 45. Sandau KE, Sendelbach S, Fletcher L, et al. Computer-assisted interventions to improve QTc documentation in patients receiving QT-prolonging drugs. Am J Crit Care 2015; 242: e6–15. [DOI] [PubMed] [Google Scholar]

- 46. Vercheval C, Gillet M, Maes N, et al. Quality of documentation on antibiotic therapy in medical records: evaluation of combined interventions in a teaching hospital by repeated point prevalence survey. Eur J Clin Microbiol Infect Dis 2016; 359: 1495–500. [DOI] [PubMed] [Google Scholar]

- 47. Jakob J, Marenda D, Sold M, et al. Dokumentationsqualität intra-und postoperativer Komplikationen. Chirurg 2014; 858: 705–10. [DOI] [PubMed] [Google Scholar]

- 48. Dinescu A, Fernandez H, Ross JS, et al. Audit and feedback: an intervention to improve discharge summary completion. J Hosp Med 2011; 61: 28–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Bergkvist A, Midlöv P, Höglund P, et al. Improved quality in the hospital discharge summary reduces medication errors- LIMM: Landskrona Integrated Medicines Management. Eur J Clin Pharmacol2009; 65: 1037–46. [DOI] [PubMed] [Google Scholar]

- 50. Kuhn T, Basch P, Barr M, Yackel T.. Clinical documentation in the 21st century: executive summary of a policy position paper from the American College of Physicians. Ann Intern Med 2015; 1624: 301–3. [DOI] [PubMed] [Google Scholar]

- 51. Sulmasy LS, López AM, Horwitch CA.. Ethical implications of the electronic health record: in the service of the patient. J Gen Intern Med 2017; 328: 935–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Burke HB, Hoang A, Becher D, et al. QNOTE: an instrument for measuring the quality of EHR clinical notes. J Am Med Inform Assoc 2014; 215: 910–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Tang KL, Lucyk K, Quan H.. Coder perspectives on physician-related barriers to producing high-quality administrative data: a qualitative study. CMAJ Open 2017; 53: E617.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Rijnbeek PR. Converting to a common data model: what is lost in translation?: Commentary on “fidelity assessment of a clinical practice research datalink conversion to the OMOP common data model”. Drug Saf 2014; 3711: 893–6. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.