Abstract

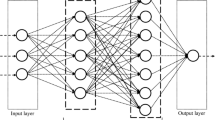

The article discusses the search for a global extremum in the training of artificial neural networks using a correlation indicator. A method based on a mathematical model of an artificial neural network presented as an information transmission system is proposed. Drawing attention to the fact that in information transmission systems widely used methods that allow effective analysis and recovery of useful signal against the background of various interferences: Gaussian, concentrated, pulsed, etc., it is possible to make an assumption about the effectiveness of the mathematical model of artificial neural network, presented as a system of information transmission. The article analyzes the convergence of training and experimentally obtained sequences based on a correlation indicator for fully-connected neural network. The possibility of estimating the convergence of the training and experimentally obtained sequences based on the joint correlation function as a measure of their energy similarity (difference) is confirmed. To evaluate the proposed method, a comparative analysis is made with the currently used indicators. The potential sources of errors in the least-squares method and the possibilities of the proposed indicator to overcome them are investigated. Simulation of the learning process of an artificial neural network has shown that the use of the joint correlation function together with the Adadelta optimizer allows us to get again in learning speed 2-3 times compared to CrossEntropyLoss.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.REFERENCES

Kolmogorov, A.N., On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition, Am. Math. Soc. Transl., Ser. 2, 1963, vol. 28, pp. 55–59.

Arnol’d, V.I., On the representation of functions of several variables by superpositions of functions of fewer variables, Mat. Prosveshchenie., 1958, no. 3, pp. 41–61.

Hecht-Nielsen, R., Neurocomputing, Addison-Wesley, 1989.

Dzyadyk, V.K., Introduction to the Theory of the Uniform Approximation of Functions by Polynomials, Moscow: Nauka, 1977.

Hebb, D.O., The Organization of Behavior, New York: Wiley, 1949.

Hinton, G.E., Training products of experts by minimizing contrastive divergence, Neural Comput., 2002, vol. 14, no. 8, pp. 1771–1800.

Hinton, G.E., Learning multiple layers of representation, Trends Cognit. Sci., 2007, vol. 11, pp. 428–434.

Nuzhny, A.S., Bayes regularization in the selection of weight coefficients in the predictor ensembles, Proc. ISP RAS, 2019, vol. 31, no. 4, pp. 113–120. https://doi.org/10.15514/ISPRAS-2019-31(4)-7

García-Hernández, L.E., et al., Multi-objective configuration of a secured distributed cloud data storage, in Proc. 6th Latin American Conf. on High Performance Computing CARLA 2019, Cham: Springer, 2019, vol. 1087. https://doi.org/10.1007/978-3-030-41005-6_6

Nikolenko, S., Kadurin, A., and Arhangel’skaya, E., Deep Learning, St. Petersburg: Piter, 2018.

Dorogov, A.Y., Implementation of spectral transformations in the class of fast neural networks, Program. Comput. Software, 2003, vol. 29, no. 4, pp. 187–198.

Adjemov, S.S., et al., The use of artificial neural networks for classification of signal sources in cognitive radio systems, Program. Comput. Software, 2016, vol. 42, no. 3, pp. 121–128.

Vershkov, N.A., Kuchukov, V.A., Kuchukova, N.N., and Babenko, M., The wave model of artificial neural network, Proc. IEEE Conf. of Russian Young Researchers in Electrical and Electronic Engineering, EIConRus 2020, Moscow, St. Petersburg, 2020, pp. 542–547. https://doi.org/10.1109/EIConRus49466.2020.9039172

Shannon, C., Works on Information Theory and Cybernetics, Moscow: Izd. inostrannoi literatury, 1963.

Sikarev, A.A. and Lebedev, O.N., Microelectronic Devices for the Generation and Processing of Complex Signals, Moscow: Radio i svyaz’, 1983.

Widrow, B., Adaptive sampled-data systems, a statistical theory of adaptation, in IRE WESCON Convention Record, New York: Institute of Radio Engineers, 1959, part 4.

Ifeachor, E.C. and Jervis, B.W., Digital Signal Processing: a Practical Approach, Pearson Education, 2002.

Solodov, A.V., Information Theory and Its Application to Tasks of Automatic Control and Monitoring, Moscow: Nauka, 1967.

Schmidhuber, J., Deep learning in neural networks: an overview, Neural Networks, 2015, vol. 1, pp. 85–117. https://doi.org/10.1016/j.neunet.2014.09.003

Erofeeva, V.A., An overview of data mining concepts based on neural networks, Stohasticheskaya Optim. Inf., 2015, vol. 11, no. 3, pp. 3–17.

Cypkin, Y.A., Information Theory of Identification, Moscow: Nauka, Fizmatlit, 1995.

Vershkov, N.N., Kuchukov, V.A., and Kuchukova, N.N., The theoretical approach to the search for a global extremum in the training of neural networks, Proc. ISP RAS, 2019, vol. 31, no. 2, pp. 41–52.

Haykin. S., Neural Networks: a Comprehensive Foundation, Prentice Hall, 1999.

Linnik, Yu.V., The Method of Least Squares and Basics of Mathematical-Statistical Theory of Processing Observations, Moscow: Gos. izd. fizikomatematich. lit., 1958.

Rao, D. and McMahan, B., Natural Language Processing with PyTorch: Build Intelligent Language Applications Using Deep Learning, O’Reilly Media Inc., 2019.

LeCun, Y., The MNIST database of handwritten digits, 1998. http://yann.lecun.com/exdb/mnist/. Accessed 03.11.19.

PyTorch. https://pytorch.org/. Accessed 03.11.19.

ACKNOWLEDGMENTS

The reported study was funded by RFBR, project number 20-37-70023, and Russian Federation President Grant MK-341.2019.9 and SP-2236.2018.5.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Vershkov, N., Babenko, M., Kuchukov, V. et al. Search for the Global Extremum Using the Correlation Indicator for Neural Networks Supervised Learning. Program Comput Soft 46, 609–618 (2020). https://doi.org/10.1134/S0361768820080265

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S0361768820080265