Abstract

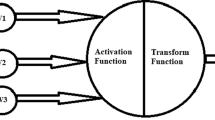

The paper discusses optimization issues of training Artificial Neural Networks (ANNs) using a nonlinear trigonometric polynomial function. The proposed method presents the mathematical model of an ANN as an information transmission system where effective techniques to restore signals are widely used. To optimize ANN training, we use energy characteristics assuming ANNs as data transmission systems. We propose a nonlinear layer in the form of a trigonometric polynomial that approximates the “syncular” function based on the generalized approximation theorem and the wave model. To confirm the theoretical results, the efficiency of the proposed approach is compared with standard ANN implementations with sigmoid and Rectified Linear Unit (ReLU) activation functions. The experimental evaluation shows the same accuracy of standard ANNs with a time reduction of the training phase of supervised learning for the proposed model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.REFERENCES

Kolmogorov, A.N., On the representation of continuous functions of many variables by superposition of continuous functions of one variable and addition, Am. Math. Soc. Transl.: Ser. 2, 1963, vol. 28, pp. 55–59.

Arnol’d, V.I., On the representation of functions of several variables as a superposition of functions of a smaller number of variables, in Collected Works, Arnold, V.I., Ed., Springer, 2009, vol. 1.

Hecht-Nielsen, R., Neurocomputing, Addison-Wesley, 1989.

McCulloch, W.S. and Pitts, W., A logical calculus of the ideas immanent in nervous activity, Bull. Math. Biophys., 1943, vol. 5, pp. 115–133.

Lashley, K.S., The Brain and Intellect, Moscow-Leningrad: State Social and Economic Publishing House, 1933.

Dzyadyk, V.K., Introduction to the Theory of the Uniform Approximation of Functions by Polynomials, Moscow: Nauka, 1977.

Stone, M.N., The generalized Weierstrass approximation theorem, Math. Mag., 1948, vol. 21, pp. 167–183, 237–254.

Gorban, A.N., Generalized approximation theorem and computational capabilities of neural networks, Sib. J. Comput. Math., 1998, vol. 1, no. 1, pp. 12–24.

Ahmed, N. and Rao, K.R., Orthogonal Transformations in Digital Signal Processing, Berlin, Heidelberg: Springer-Verlag, 1975.

Hebb, D.O., The Organization of Behavior, Wiley, 1949.

Hinton, G.E., Training products of experts by minimizing contrastive divergence, Neural Comput., 2002, vol. 14, no. 8, pp. 1771–1800.

Hinton, G.E., Learning multiple layers of representation, Trends Cognit. Sci., 2007, vol. 11, pp. 428–434.

Vershkov, N.A., Babenko, M.G., Kuchukov, V.A., and Kuchukova, N.N., Advanced supervised learning in multi-layer perceptrons to the recognition tasks based on correlation indicator, Tr. Inst. Sist. Program. Russ. Akad. Nauk, 2021, vol. 33, issue 1, pp. 33–46.

Nikolenko, S., Kadurin, A., and Arhangel’skaya, E., Deep Learning, St. Petersburg: Piter, 2018.

Dorogov, A.Y., Implementation of spectral transformations in the class of fast neural networks, Program. Comput. Software, 2003, vol. 29, pp. 187–198. https://doi.org/10.1023/A:1024966508452

Adjemov, S.S., Klenov, N.V., Tereshonok, M.V., et al., The use of artificial neural networks for classification of signal sources in cognitive radio systems, Program. Comput. Software, 2016, vol. 42, pp. 121–128. https://doi.org/10.1134/S0361768816030026

Vershkov, N.A., Kuchukov, V.A., Kuchukova, N.N., and Babenko, M., The wave model of artificial neural network, Proc. IEEE Conf. of Russian Young Researchers in Electrical and Electronic Engineering, EIConRus 2020, 2020, pp. 542–547.

Shannon, C., Works on Information Theory and Cybernetics, Moscow: Izd. Inostrannoi Literatury, 1963.

Sikarev, A.A. and Lebedev, O.N., Microelectronic Devices for the Generation and Processing of Complex Signals, Moscow: Radio i Svyaz’, 1983.

Widrow, B., Adaptive sampled–data systems, a statistical theory of adaptation, IRE WESCON Conv. Rec., 1959, vol. 4, pp. 74–85.

Ifeachor, E.C. and Jervis, B.W., Digital Signal Processing: a Practical Approach, Pearson Education, 2002.

Solodov, A.V., Information Theory and Its Application to Tasks of Automatic Control and Monitoring, Moscow: Nauka, 1967.

Tsypkin, Ya.Z., Information Theory of Identification, Moscow: Nauka, Fizmatlit, 1995.

Vershkov, N.N., Kuchukov, V.A., and Kuchukova, N.N., The theoretical approach to the search for a global extremum in the training of neural networks, Tr. Inst. Sist. Program. Russ. Akad. Nauk, 2019, vol. 31, issue 2, pp. 41–52. https://doi.org/10.15514/ISPRAS-2019-31(2)-4

Haykin, S., Neural Networks: A Comprehensive Foundation, Prentice Hall, 1999.

Linnik, Yu.V., The Method of Least Squares and the Foundations of the Mathematical-Statistical Theory of Observation Processing, Moscow: Fizmatgiz, 1958.

Osovsky, S., Neural Networks for Information Processing, Moscow: Finance and Statistics, 2002.

Kharkevich, A.A., Information Theory. Pattern Recognition. Selected Works in Three Vols., Moscow: Nauka, 1973, vol. 3, p. 524.

Cook, C.E. and Bernfeld, M., Radar Signals. An Introduction to Theory and Application, New York, London: Acad. Press, 1967.

LeCun, Y., Cortes, C., and Burges, C.J.C., The MNIST Database of handwritten digits. http://yann.lecun.com/exdb/mnist/. Accessed February 10, 2020

PyTorch. https://pytorch.org/. Accessed November 10, 2019.

Zeiler, M.D., Adadelta: an adaptive learning rate method, 2012. arXiv:1212.5701

Bracewell, R.N., The Hartley Transform, Oxford Univ. Press, 1986.

Vershkov, N., Babenko, M., Kuchukov, V., et al., Search for the global extremum using the correlation indicator for neural networks supervised learning, Program. Comput. Software, 2020, vol. 46, pp. 609–618. https://doi.org/10.1134/S0361768820080265

Tchernykh, A., Babenko, M., Chervyakov, N., Miranda-López, V., Avetisyan, A., Drozdov, A.Y., and Du, Z., Scalable data storage design for nonstationary IoT environment with adaptive security and reliability, IEEE Internet Things J., 2020, vol. 7, no. 10, pp. 10171–10188.

ACKNOWLEDGMENTS

This work was supported in part by the Russian Science Foundation, project number 19-71-10033.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Vershkov, N., Babenko, M., Tchernykh, A. et al. Optimization of Neural Network Training for Image Recognition Based on Trigonometric Polynomial Approximation. Program Comput Soft 47, 830–838 (2021). https://doi.org/10.1134/S0361768821080272

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1134/S0361768821080272