Abstract

Manual device interaction requires precise coordination which may be difficult for users with motor impairments. Muscle interfaces provide alternative interaction methods that may enhance performance, but have not yet been evaluated for simple (eg. mouse tracking) and complex (eg. driving) continuous tasks. Control theory enables us to probe continuous task performance by separating user input into intent and error correction to quantify how motor impairments impact device interaction. We compared the effectiveness of a manual versus a muscle interface for eleven users without and three users with motor impairments performing continuous tasks. Both user groups preferred and performed better with the muscle versus the manual interface for the complex continuous task. These results suggest muscle interfaces and algorithms that can detect and augment user intent may be especially useful for future design of interfaces for continuous tasks.

Keywords: User intent, control theory, interaction, muscle interfaces, electromyography, motor impairments, accessibility, Human-centered computing → User models, User studies

INTRODUCTION

Users predominantly interact with devices using manual interfaces such as mice, touchscreens, steering wheels, and joysticks. However, many of these interfaces may be difficult or impossible to use for individuals with upper-extremity motor impairments after neurologic injury. Such users may have difficulty precisely coordinating arm and hand function to control manual interfaces due to weakness of the arm muscles, spasticity provoking unintended movement, and muscle tightness limiting mobility [18]. The lack of accessibility of manual interfaces for users with motor impairments is well-documented [13, 17, 25, 28]. People with neurologic injuries that impact one side of the body like stroke or cerebral palsy tend to solely use their unaffected side for device interaction [39]. This leads to slower and more error-prone technology use and increases fatigue [36]. Alternatives that can be personalized and require less strength and coordination could encourage greater use and utility of the affected side. Muscle interfaces are one potential alternative to manual interfaces that may enable users with and without motor impairments to interact effectively and unobtrusively with their device [32]. The placement of the muscle sensors can be personalized so that users can adapt the interface to their own ability level [40]. Such interfaces may decrease errors, increase use of the affected side, and enhance long-term function.

In this paper, we investigate the performance of a muscle versus a manual interface for continuous trajectory tracking tasks in users with and without motor impairments using modeling techniques from control theory. While other performance metrics for modeling continuous task performance exist [1, 24], we demonstrate that control theory techniques provide powerful insights not available with other techniques. To the best of our knowledge, there are no methods in human-computer interaction (HCI) that separate and quantify user intent (feedforward control) from error correction (feedback control). This could be particularly useful for users with motor impairments. Users with motor impairments after neurologic injury often retain the ability to determine the input needed to control a device to follow a desired trajectory in the absence of errors. However, they may have difficulty correcting for errors that arise from unexpected disturbances like arm tremor [18] (Fig. 1). We apply techniques from control theory to decode user intent, providing a foundation for future development of HCI algorithms that assist users as they perform continuous tasks like mouse tracking and driving.

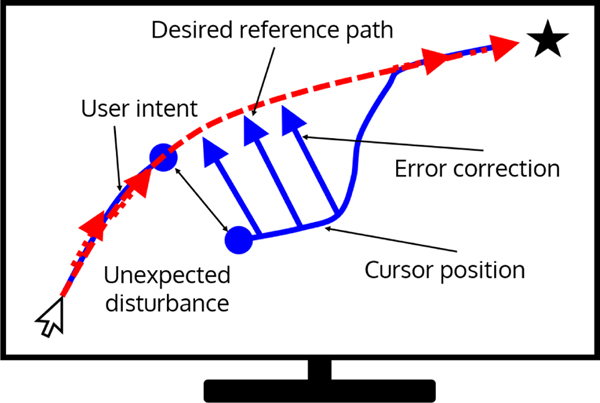

Figure 1.

Successfully completing continuous tasks with manual or muscle interfaces is crucial for many tasks including cursor navigation. While a user may intend to follow a desired reference path (dotted red line) with user intent (dotted red arrows), unexpected disturbances (sudden change in cursor position between the two blue circles) introduce errors that must be corrected with error correction (solid blue arrows). The user input (mouse position) combines user intent and error correction and maps to the cursor position on the screen (blue solid line).

We used frequency-domain analysis to separate and quantify feedforward and feedback control for simple (velocity-based) and complex (acceleration-based) continuous tasks using a muscle and manual interface in eleven users without motor impairments. We also studied muscle and manual interface performance for the complex task for three participants with motor impairments. We computed two performance metrics: (i) time-domain error between a desired trajectory and actual cursor position and (ii) frequency-domain error between the user’s feedforward controller and the controller required to perfectly follow a reference in the absence of errors.

The contributions of this paper are threefold:

C1 extend control theory-based quantitative modeling techniques that separate user intent (feedforward control) and error correction (feedback control) to muscle interfaces;

C2 experimentally compare muscle versus manual interface performance for simple (velocity-based) and complex (acceleration-based) continuous trajectory tracking tasks;

C3 conduct preliminary evaluations of muscle versus manual interface performance for a complex continuous task for users with motor impairments.

We report two key experimental findings:

F1 users without motor impairments were 49% better at tracking continuous trajectories using the muscle than the manual interface for the complex continuous task;

F2 users without motor impairments were 61% better at tracking high-frequencies above 0.35 Hz with the muscle versus the manual interface.

Our paper proposes and extends an experimental and analytical method to guide future development of accessible interfaces like muscle interfaces using control theory. The results demonstrate the feasibility of using methods from control theory to inform future interface design and develop assistive algorithms to aid users with motor impairments in achieving desired tasks.

RELATED WORK

Accessible Interfaces for Users With Motor Impairments

Despite technological advancements in personal computing, device accessibility for users with motor impairments and alternate abilities remains a challenge [40]. Researchers have demonstrated how ability-based assumptions underlying traditional interfaces such as touchscreens [13, 17, 37] and mice [13] are inappropriate for users with motor impairments, and how these assumptions can be modified to encompass users of all abilities. Other researchers have worked on using artificial intelligence to adapt current interfaces such as touchscreens [28, 46] and screen layouts for use with a mouse [15] such that they take into account each user’s ability level. Researchers have also worked on modeling stroke gestures on touchscreens for users with motor impairments [38]

We are interested in whether alternative interfaces could provide performance advantages for continuous tasks. Although it is crucial to understand how traditional interfaces can be adapted for users with motor impairments, novel interfaces like smart watches [25] and headsets [26] are quickly being developed for commercial use. Understanding whether muscle interfaces provide performance benefits for users of all abilities is important for encouraging development of muscle interfaces.

Electromyography as Non-Invasive Muscle Sensors

Although muscle interfaces have gained popularity in research as a hands-free interaction method, muscle electrical signals are most often used in clinical research to quantitatively assess impairments level, track progress, and evaluate clinical interventions for various clinical populations [5, 8, 35]. Electromyography (EMG) sensors are commonly used in these settings to noninvasively measure muscle electrical activity from the skin surface. Dry or wet electrodes passively measure these electrical signals, which can then be relayed to a computing unit for analysis. EMG technology is still limited to short-term use due to low battery life, bulky form factor, high cost, and lack of comfort [4, 11, 29]. Researchers are currently addressing these limitations by developing novel electrodes and hardware for long-term EMG use [30, 42, 43].

Electromyography in Human-Computer Interaction

Muscle interfaces for HCI have mainly focused on gesture classification tasks for hands-free device use for users without motor impairments. Such interfaces have been demonstrated to have high gesture classification accuracy even when: hands are occupied with other objects [32, 33], EMG signals are weak [22], consumer-level EMG sensors are used [19], and a large number of gestures are attempted [2]. These studies demonstrate that users without motor impairments can successfully use muscle interfaces to reliably perform discrete tasks like tapping and swiping.

Work on enabling discrete interactions with EMG data is crucial for the adoption of muscle interfaces into everyday life, but little work has studied the use of muscle interfaces for continuous tasks or for users with upper-extremity motor impairments. In addition, prior research on gesture classification with EMG data demonstrates the strength of muscle interfaces in scenarios where manual interfaces would be difficult to use, but have not directly compared performance of muscle and manual interfaces.

Continuous Control Using Muscle Interfaces

Prior work on continuous muscle interfaces focused on measuring EMG signals from residual muscles of amputees for prosthetic control. EMG control is desirable for prosthesis users because it requires minimal effort, allows for intuitive device manipulation, and is noninvasive [10, 34]. In this application, EMG signals are measured from the user and fed into a proportionality controller to manipulate position, speed, or acceleration of the prosthesis [14].

Preliminary research on continuous muscle interfaces for prosthetic control is limited and compares manual and muscle interfaces for simple tasks that map the user input to the position or velocity of a cursor on a screen. Researchers [7, 23] performed investigations where they compared force-based, EMG-based, and position-based interfaces for controlling position and velocity of a cursor. They demonstrated that users tracked a desired reference more accurately with force-based and position-based interfaces. However, they also found that users could track higher frequency signals with the muscle interface than with the manual interfaces.

Our study builds on this work by using metrics from control theory to compare simple and complex task performance for users with and without motor impairments. Understanding tradeoffs between muscle and manual interface performance for simple and complex tasks may lead to greater incorporation of muscle interfaces that are more accurate, easier to use, and encourage muscle use in users with motor impairments.

Feedforward Controller Formulation for Manual Interfaces

An emerging technique for modeling continuous human and device interactions is using control theory to separate user input into a feedforward component that expresses the intended output and a feedback component that corrects for errors [9, 27, 31, 41, 44, 45]. The feedforward component can be considered a performance metric to determine whether the user has learned how their user input maps to the device output in the absence of errors (Fig. 1). Control theory provides established frequency-domain techniques for separating and quantifying feedforward and feedback controllers for users without motor impairments. In the 1960’s, McRuer et al. [27] used trajectory tracking data collected from pilots to demonstrate feasibility of estimating a user’s feedforward and feedback controllers from data. More recent work focuses on quantifying user performance using the estimated feedforward controller. Researchers demonstrated that users without motor impairments using a manual interface develop good feedforward controllers for predictable [9, 31, 45] and unpredictable [41, 44] trajectories. In addition, researchers also demonstrated that users’ feedforward controllers improve as users gain experience performing a trajectory tracking task [45].

Our study extends the control theory-based experimental methods and analyses previously used to study how users without motor impairments use manual interfaces. Our paper focuses on how users with and without motor impairments use muscle interfaces. Understanding how feedforward and feedback controllers are affected by alternative interfaces and motor impairments is crucial for improving device interaction for all users.

BACKGROUND

What is a Continuous Task?

Continuous tasks can range from simple to more complex. We define simple tasks as being position-based (eg. mouse tracking, where the position of the mouse determines the cursor position) or velocity-based (eg. wheelchair navigation, where the joystick position determines the velocity of the wheelchair). We define complex tasks as being acceleration-based (eg. automobile or robot control, where the user input determines the acceleration of the mechanical system). Mathematically, the increase in task complexity arises from the increased number of derivatives that relate the user input to the device output. These complex tasks require more abstraction (derivatives) for the user to determine the input they should apply to produce the desired device output.

In continuous tasks, the user input is theorized to be a combination of i) user intent (feedforward control; the input that yields the desired device output in the absence of any errors) and (ii) error correction (feedback control; the input that corrects for errors that can arise from unexpected perturbations, inappropriate inputs (eg. due to motor impairments), or unexpected changes in the task) [16]. In this paper, controller or control refer to the process by which the user determines their input in response to device output. Mathematically, a controller is a function that transforms time- and/or frequency-domain signals. Taking the example of mouse tracking as a continuous task, user intent could express the user’s desire to move the cursor along a specific path, while error correction could compensate for deviations from the intended path caused by unintentional tremors of the user’s arm (Fig. 1).

Neurologic injuries like stroke or cerebral palsy that result in motor impairments usually do not affect the cerebellum, where user intent (feedforward control) is formed [6]. Instead, the injury usually occurs in the motor cortex that coordinates and transmits signals to arm muscles [18]. The injury to the motor cortex can cause errors between the user’s intended motion and the implementation of the desired motion (eg. causing unintentional arm tremor), making it difficult to perform error correction (feedback control). Thus, we hypothesize that neurologic injury may impair feedback but not feedforward elements of user input. To test this hypothesis, we separately quantify user intent and error correction in continuous tasks for users with and without motor impairments using frequency-domain techniques from control theory. These techniques have previously been applied to participants without motor impairments using manual interfaces [27, 41, 44, 45].

This study extends the applicability of these tools to include simple and complex tasks using muscle interfaces and users with motor impairments after neurologic injury (post-stroke).

Decoding User Intent with Control Theory

Control theory is an engineering discipline that provides techniques for modeling and manipulating the dynamics of systems like humans and devices working together to achieve a task that changes over time [3]. In the context of the present study, we argue that control theory provides powerful estimation techniques that enable us to separate and quantify user intent and error correction during continuous interactions between users and devices.

Decoding user intent is challenging for continuous tasks since both components of the user input (intent and error correction) are intertwined in time-domain measurements. Control theory techniques based on frequency-domain analysis enable us to separate user intent and error correction for continuous tasks.

Previous studies have demonstrated that data collected from users performing continuous trajectory tracking tasks can be modeled as a function of prescribed (reference trajectory R, disturbance signal D, task dynamics M), computed (user’s feedforward F and feedback B controllers), and measured (device output Y, user input U) signals and controllers (Fig. 2i) [27, 41, 44, 45]. In the context of a real-world example such as a user navigating a cursor from one corner of a computer screen to another using a mouse as in Fig.1, the reference signal R is the path that the user wants to follow (such as clicking and dragging the cursor along a specific path to draw a curve), the disturbance signal D are unpredictable changes due to perturbations, inappropriate inputs (eg. due to motor impairments), or unexpected changes in the task that deviates the device output from the intended path (such as a cat bumping the user’s hand controlling the mouse), and the device dynamics M is the mapping that transforms the (possibly disturbed) position of the mouse U + D into the position of the cursor on the screen Y. To follow a reference path R, users can employ their feedforward controller F to predict the mouse input necessary to produce the desired cursor path Y. However, unintended disturbance signals D will need to be corrected by the user’s feedback controller B, which aims to minimize the error between where the cursor currently is, and where the user wants to be along the reference path.

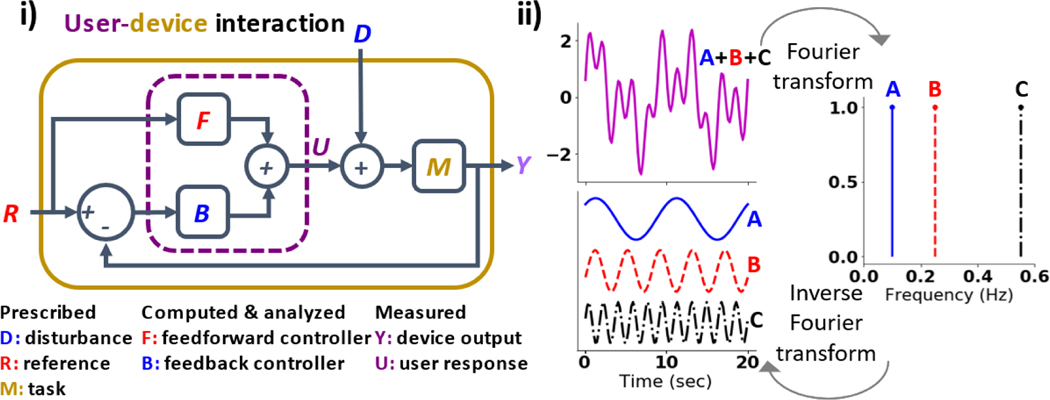

Figure 2.

(i) Block diagram representation of user interacting with device adapted from [27]. The user, contained within the purple dotted square, transforms external reference R and tracking error R − Y through feedforward (user intent, F in red) and feedback (error correction, B in blue) controllers to produce user input U. The device transforms the sum of user input U and external disturbance D to device output Y via mapping M. (ii) Signals in the time-domain (left top graph) can be considered as a sum of many sine waves at different frequencies (left bottom graph). These sinusoidal waves can be difficult to separate in the time-domain (left), but easy to separate in the frequency domain (right). The Fourier transform is used to convert time-domain signals to frequency-domain signals. It is a linear operator that represents time-domain signals using a linear combination of sinuisoids [3].

In control theory, the block diagram in Fig. 2i is a precise specification of mathematical transformations that relate time-domain or frequency-domain signals (represented by arrows). For the problem formulation specified in Fig. 2i, the user input U is determined by feedforward F and feedback B controllers as well as prescribed disturbance D and reference R signals and task dynamics M as:

| (1) |

Control theory provides a mathematical framework for analyzing time-varying signals. Continuous signals have both time-domain and frequency-domain representations. In the time-domain, signals like the position of a mouse are represented as a function of time. In the frequency-domain, the same position signals are represented by a linear combination of sinusoidal functions at different frequencies (Fig. 2ii). Take for example the sound of a 100 Hz tuning fork. In the time-domain, it is a sinusoidal wave of the sound represented over time, with magnitude A and frequency 100 Hz. In the frequency-domain, it is one single element with magnitude A represented at one frequency (100 Hz). Frequency-domain analysis is useful for continuous HCI tasks like mouse or vehicle navigation because the user’s response to stimuli can be analyzed independently at each frequency [41]. Frequency-domain analysis is also useful in cleverly designed experiments, because it enables distinct signal separation that is difficult in the time-domain.

In this research, we leverage the latter advantage to design experiments to separately quantify feedforward and feedback control [41, 44]. For example, in Eq. 1, we can see that if we isolate the effects of the reference and disturbance stimuli on the user input, we can compute the feedforward and feedback controllers. This is difficult in the time-domain as the reference and disturbance signals are shown concurrently to the user. However, by designing our experiments to expose the user to solely reference or disturbance signals at different frequencies, we can isolate the effects of the two signals on the user input in the frequency-domain (Fig. 2). Then, we can use Eq. 1 to algebraically compute the feedforward (F) and feedback (B) contributions to the user input (U) at each frequency of interest. Applying these principles to our task, eq. (1) can be manipulated algebraically to estimate the user’s feedback (B) and feedforward (F) controllers:

| (2) |

Frequency-domain analysis enables us to monitor, model, and predict a user’s feedforward and feedback controllers. This is particularly powerful in the context of decoding user intent for users with motor impairments. Separating feedforward and feedback contributions to the user input allows us to predict how a user will respond to a given reference or disturbance. Given an estimate of the user’s feedforward controller F, we can predict the user’s intended input in the absence of disturbances for a given reference trajectory R by applying transformation F to signal R. From there, algorithms can monitor and correct for errors arising from motor impairments between the planned input and the actual input to the device.

METHODS

Experimental Design

We manipulated the following conditions – interface (muscle versus manual); task (simple versus complex); and population (with versus without motor impairments). We conducted two types of experiments. First, we ran a 2 × 2 factorial design study with eleven participants without motor impairments (interface (muscle versus manual); task (simple versus complex)). Second, we conducted a case series study with three participants with motor impairments after stroke, and compared muscle versus manual interface performance for the complex task. We compared the results from this experiment against the participants without motor impairments. To shorten the study and avoid fatigue, we only collected data for the complex tasks for participants with motor impairments. The order of presentation for the conditions was randomized for each participant.

Participants

We recruited eleven participants without motor impairments for this study from the broader community (4 female, 7 male; 1 left-handed, 10 right handed; age: 25±3.7 years, height: 171±11.3 cm; weight: 68±10.5 kg). All were daily computer users and played video games monthly or yearly. Six participants were familiar with the concept of EMG signals, and one participant regularly worked with EMG signals.

We also recruited three participants who had a stroke that affected one side of their body from clinics and local stroke survivor support groups (Table 1). P1 and P2 predominantly used their unaffected arm for activities of daily living, including using a computer or phone. As shown by the self-reported impairments, P3 had fairly good control over her affected arm, and used her affected side for mouse navigation and writing. However, she only uses her affected side to use the mouse, and types solely with her non-affected side. Potential participants were asked if they could touch their shoulder and move their arm back as a measure of bicep and tricep control.

Table 1.

Participant Characteristics

| Self-reported impairments |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Age (yrs) | Sex | Yrs since stroke | Affected side | Mo | Sp | St | Tr | Co | Fa | Gr | Ho | Se | Dir | Dis | |

|

| |||||||||||||||

| P1 | 48 | M | 2 | L | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ||

| P2 | 47 | M | 11 | R | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ | |

| P3 | 51 | F | 6 | R | ✓ | ✓ | ✓ | ✓ | |||||||

Mo = slow movements, Sp = spasm, St = low strength, Tr = tremor, Co = poor coordination, Fa = rapid fatigue, Gr = difficulty gripping, Ho = difficulty holding, Se = lack of sensation, Dir = difficulty controlling direction, Dis = difficulty controlling distance. Self-reported impairments adopted from Findlater et. al [12] and Mott et. al [28]

Task

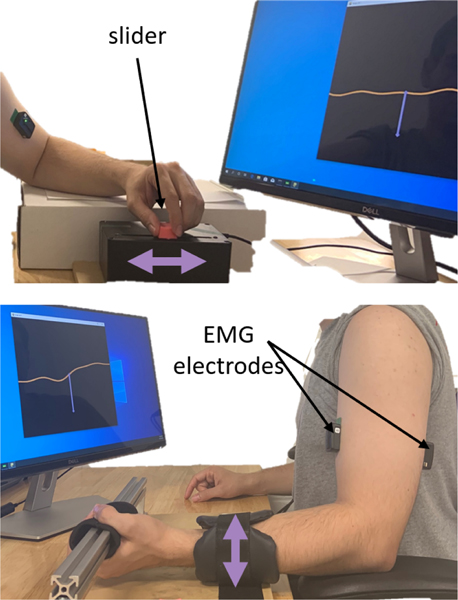

Participants used their muscles or a slider to control a cursor on a screen to track a yellow trajectory (Fig. 3). Since one goal of this work is to encourage bilateral device interaction, participants without motor impairments used their non-dominant arm and participants with motor impairments used their affected arm to complete the tasks. When using their muscles, participants were strapped into a padded rigid device with their palms facing up (Fig. 3 bottom). Participants moved the cursor up by pulling up against the rigid device to activate the biceps, and moved the cursor down by pushing down into the rigid device to activate the triceps. We previously found during a pilot study on participants without motor impairments that participants moved the slider in many different ways from flicking the slider to using their whole arm to move the slider. To standardize how participants moved the slider, participants were asked to lay their elbow on a hard surface and move the slider with their biceps and triceps (Fig. 3 top).

Figure 3.

Participants controlled a purple cursor on a computer screen using either a manual slider (top) or muscle EMG (bottom) interface.

The user input was mapped to the output of the device as either the velocity (simple task) or the acceleration (complex task) of the cursor. Participants without motor impairments performed 30 trials per condition, each 45 seconds long. At the end of each trial the error between the reference trajectory and the cursor position was displayed as a scaled number between 0 and 100%. Participants were asked to make this number as small as possible. Participants with and without motor impairments were highly encouraged to take breaks between trials and between conditions and were reminded that they were free to stop the experiment at any time. Reset screens where participants could take breaks were shown between each 45 second trial. Participants with motor impairments performed at least 20 trials per condition, depending on fatigue. Direct observation of continued clonus or spasticity (more than once per 45-second trial) was also used to indicate muscle fatigue as a break or stop point during the experiment.

After each condition, participants filled out the NASA Task Load Index (TLX) [20] to subjectively quantify the difficulty of completing the trajectory tracking task across six different categories – mental demand, physical demand, temporal demand, performance, effort, and frustration. The NASA TLX rates the workload of a task from 0 (low workload) to 100 (high workload). At the end of the experiment, we asked participants whether they preferred the muscle or slider interface.

Game Development

The experiment was described to participants as a trajectory tracking game (Fig. 3). Participants were asked to control a purple diamond cursor on the screen using a slider or their muscles. The cursor was restricted to motion in one-dimension (up or down). Participants controlled the cursor by either manipulating a manual interface (slider) towards or away from the body, or activating the muscle interface by pulling up or pushing down against a rigid device.

The trajectory tracking task was visualized using pygame 1.9.4 in Python3.5. We used a randomly phase-shifted sum-of-sines at eight fixed frequencies between 0.1–0.95 Hz and amplitude to generate pseudorandom references and disturbances (Fig. 2ii). Researchers previously found that frequencies much higher than 1 Hz are difficult to track in the context of this experiment [27, 41, 44]. The position of the cursor on the screen was updated by the user input at 60 Hz, the same update frequency as a standard computer screen. This game was adapted from work by [27] and more recently by [41, 44].

Muscle Interface Development

We used the Delsys Trigno EMG System (Delsys Inc. Massachusetts, USA) to collect EMG activity from the biceps and triceps of our participants. The Delsys sensor is a wireless dry electrode commonly used in clinical settings, and collects EMG data at 1926 Hz. The electrodes were placed on the biceps and triceps according to Surface Electromyography for the Non-Invasive Assessment of Muscle (SENIAM) [21] guidelines. The Delsys software development kit was used to import raw EMG signals from the Delsys unit to Python for further processing.

EMG values were normalized by calibrating the EMG activity against participants’ maximum voluntary contraction. At the beginning of the trial, we asked each participant to flex their biceps or triceps as hard as they could three times, each for two seconds, while secured by the rigid device or by a researcher. The 95th percentile of the EMG data collected was saved for each 2-second trial, and the average of the three trials was saved as the maximum contraction.

Raw EMG signals were processed similarly to [7, 23]. EMG signals were filtered by processing 100 ms of EMG data at a time. Each 100 ms window was further split up into two, 50 ms windows and delinearized before taking the average of the two windows. We then scaled the filtered EMG activity by the value of the maximum contraction for each muscle. If scaled user input for both the biceps and triceps were below a specified threshold (defined as 2.5% of the maximum contraction for participants without motor impairments), the user input was set to zero. This ensured that participants could reach zero despite minor fluctuations in EMG signal from measurement noise. Otherwise, the muscle with the larger scaled value was returned as the user input. If the biceps had a larger scaled value than the triceps, the cursor would move up, and if the triceps had a larger scaled value than the biceps, the cursor would move down.

All three participants who had a stroke could not sufficiently relax their muscles to obtain a zero user input with the 2.5% threshold due to weaker maximum contractions. The threshold for zero user input for the muscle interface was adapted to a maximum of 12% of the maximum contraction, depending on the level of EMG activity we observed during rest.

Slider Interface Development

Participants manipulated a custom slider connected to a 10 kΩ potentiometer. An Arduino Due (Arduino.cc) was used to measure and import the potentiometer values into Python for further processing. The slider was 35 mm wide × 12 mm tall × 22 mm deep and printed with a 3D printer using ABS filament. Pushing the slider required very little strength, similar to pushing a pen across a table.

Data Analysis

User input from either the muscle or manual interface, reference and disturbance trajectories, and position of the cursor on the screen was collected at 60 Hz. Collected data was analyzed in Python3.5. To quantify user performance taking into account both user intent and error correction, we compute the mean-square error (MSE) between the prescribed reference R and the measured position of the cursor Y over time t:

| (3) |

To quantify user performance solely taking intent into account and ignoring difficulties with error correction arising from motor impairments, we compute the MSE between the inverse of the device dynamics M−1 and the estimated feedforward controller F over frequencies w:

| (4) |

The performance for the last five trials was averaged as a measure of error after learning for both performance metrics.

For the 2 × 2 factorial design with participants without motor impairments, we looked for potential differences between conditions (interface; task) for the two performance metrics defined in eq. (3, 4) using the two-way analysis of variance (ANOVA) test. We tested the normality distribution assumption of our data using the Shapiro-Wilks test and allowed for minor violations in normality because of the robustness of the ANOVA. We hypothesized that participants will perform worse when performing the complex task compared to the simple task due to the added abstraction (derivative). Additionally, we hypothesized that muscle interfaces will perform worse than manual interfaces because participants will be more acquainted with manual interfaces than the muscle interface. Paired t-tests with α = 0.05 were used as a post-hoc test.

Similarly to previous studies [7, 23], we also hypothesized that we will see performance differences between muscle and manual interfaces at higher frequencies. Although researchers previously only compared muscle and manual interfaces for the simple task, we hypothesized that their finding will extend to the complex task as well. We tested for differences in frequency-domain performance at each frequency between the muscle and manual interface with the paired t-test for the complex task with α = 0.05.

As we only had three participants with motor impairments, comparisons between users with and without motor impairments are descriptive. This experiment was mainly to assess the viability of a muscle interface for users without motor impairments. We hypothesized that users with motor impairments will perform worse than users without motor impairments with the time-domain performance metric, but perform similarly for the frequency-domain performance metric. This is because motor impairments after neurologic injury usually affect the error correction (feedback), not user intent (feeforward) contributions to user input [18].

RESULTS

Study 1: Muscle versus Manual Interfaces for Simple and Complex Tasks

To determine when muscle interfaces may provide performance advantages over manual interfaces, this study compared performance for users without motor impairments using muscle and manual interfaces for simple and complex continuous tasks.

Muscle Interface Improves Performance for Complex Task

The time-domain performance metric (MSEtime) quantifies the performance of continuous trajectory tracking tasks when taking into account both user intent and error correction. The two-way ANOVA only found a main effect for the task difficulty (Table 2). Contrary to our hypothesis that participants will perform better with the manual interface, participants without motor impairments performed equally well with either interface for both the simple and complex tasks (simple: t=1.88, p = 0.09; complex: t=−0.15, p = 0.88) (Fig. 4). However, participants performed significantly worse for the complex task compared to the simple task using the manual interface (t=−5.76, p < 0.001) (Fig. 4). This suggests that participants did find the increased abstraction (derivative) more difficult, but only when using the manual interface.

Table 2.

Two way ANOVA (interface × task) results for time-domain (MSEtime ) and frequency-domain (MSE freq ) measures of performance.

| Factor | F 1,40 | p | Partial η2 |

|---|---|---|---|

| MSE time | |||

|

| |||

| Interface | 1.19 | 0.28 | 0.025 |

| Task | 6.55 | 0.014 | 0.14 |

| Interface × task | 0.74 | 0.39 | 0.15 |

|

| |||

| MSE freq | |||

|

| |||

| Interface | 4.83 | 0.034 | 0.047 |

| Task | 43.5 | <0.001 | 0.43 |

| Interface × task | 13.5 | <0.001 | 0.13 |

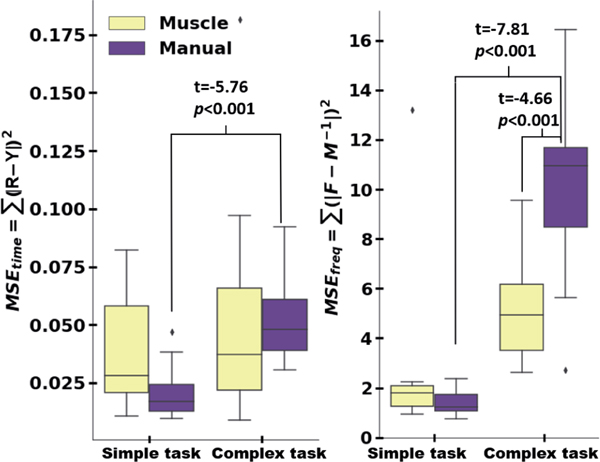

Figure 4.

Time-domain (left) and frequency-domain (right) measures of error for both simple and complex tasks (box plot: median, 25/75 percentile, bars from minimum to maximum value, * outliers). Lower values indicate better performance. Statistically significant differences are marked with their respective p values.

The frequency-domain performance metric (MSE freq ) quantifies the performance of continuous trajectory tracking tasks when only taking into account the user intent. This metric ignores how well or poorly participants perform error correction. We found a significant main (interface; task) and interaction (interface × task) effect for the frequency-domain performance (Table 2). As expected, users developed a better feedforward controller for the simple task compared to the complex task when using the manual interface (t=−7.81, p < 0.001) (Fig. 4). This suggests that participants found it easier to determine the input required to track the desired trajectory for the simple task compared to the complex task when using the manual interface. Participants performed equally well for simple and complex tasks when using the muscle interface (t=−2.09, p = 0.063). Surprisingly, users had 49% more accurate feedforward controllers when performing the complex task with the muscle interface than the manual interface (t=−4.66, p < 0.001). This means that in the absence of errors users could track reference trajectories more accurately with the muscle interface than the manual interface, but only for the complex acceleration-based task. This suggests that interface performance is task-dependent and feedforward controller accuracy is dependent on the type of interface used.

Comparing the performance of muscle and manual interfaces by solely quantifying user intent (MSE freq ) enabled us to detect differences between the two interfaces that were not readily apparent when also taking into account error correction (MSEtime ). While the two interfaces performed similarly in the time-domain performance metric, the muscle interface performed significantly better than the manual interface in the frequency-domain metric. Having an accurate prediction of what the user intends to do is critical for developing algorithms that assist the user in performing tasks.

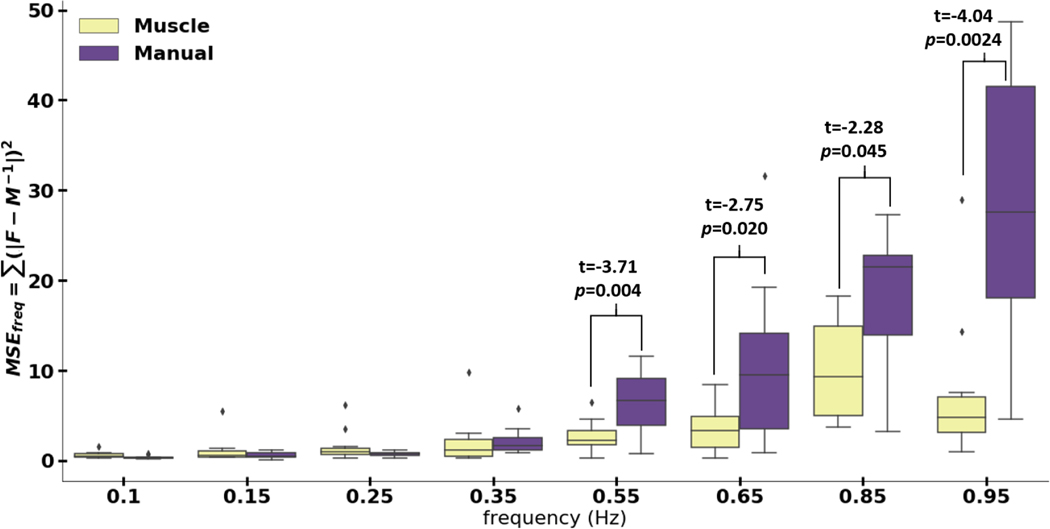

Muscle Interface Accurately Tracks High-Frequency Signals

To more deeply understand why the muscle interface performed better than the manual interface for the complex task in the frequency-domain, we compared the frequency-domain performance at each stimulus frequency. For the complex task, participants performed significantly better at frequencies above 0.35 Hz with the muscle interface than the manual interface (Fig. 5). Overall, participants performed 61% better at high frequencies above 0.35 Hz with the muscle than the manual interface. Without accounting for error corrections, users’ inputs more accurately tracked faster moving components of the reference trajectory with the muscle than the manual interface. This suggests that if a task requires users to track rapidly changing trajectories, like navigating a drone in a forest at a high speed, they may find it easier to do so with the muscle than the manual interface.

Figure 5.

Frequency-based performance across different frequencies for complex acceleration-based task. Participants performed significantly better with the muscle (yellow) than the manual (purple) interface at higher frequencies.

Study 2: Comparing Interface Performance for Users With Motor Impairments

We learned from the first study that users without motor impairments performed better using the muscle compared to the manual interface while conducting the complex task in the absence of errors. In this study, we conducted a preliminary study comparing performance for users with and without motor impairments during a complex task with muscle or manual interfaces. This study was a proof-of-concept case study with three participants (P1, P2, P3) who had a stroke to investigate whether muscle interfaces were a viable alternative to manual interfaces for users with motor impairments.

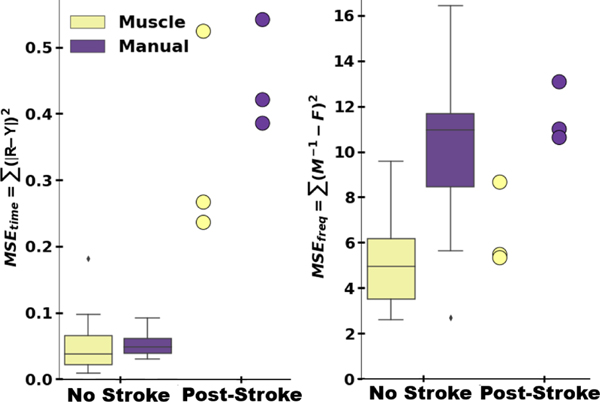

Muscle Interface Improves Performance

Participants with motor impairments successfully completed the complex trajectory tracking task with both muscle and manual interfaces. P1 learned to use the muscle interface quickly and preferred it over the manual interface. P2 and P3 had more difficulties learning to use the muscle interface and isolating bicep and tricep activation. They expressed that they would have performed better if they had more time to practice. Despite the limited practice time, the three users with motor impairments performed 24% and 44% better using the muscle than the manual interface with the time-domain and frequency-domain performance metrics respectively (Fig. 6).

Figure 6.

Time-domain (left) and frequency-domain (right) measures of error for complex task for users with and without motor impairments. Lower values equals better performance. The time-domain error for users with motor impairments is much higher than users without motor impairments for both the muscle and manual interface. Users with motor impairments perform comparably to users without motor impairments in forming feedforward models in the frequency-domain.

As we only had three participants, the performance of each participant with motor impairments is shown as a dot.

The preliminary results support our hypothesis that users with motor impairments have worse time-domain performance (MSEtime ) than users without motor impairments (Fig. 6). However, frequency-domain performance (MSE freq ) for users with motor impairments were within the range observed for users without motor impairments. Since frequency-domain performance excludes contributions from error correction while time-domain performance accounts for both user intent and error correction, we can conclude that users with motor impairments had more difficulty with error correction, but not with forming user intent compared to users without motor impairments.

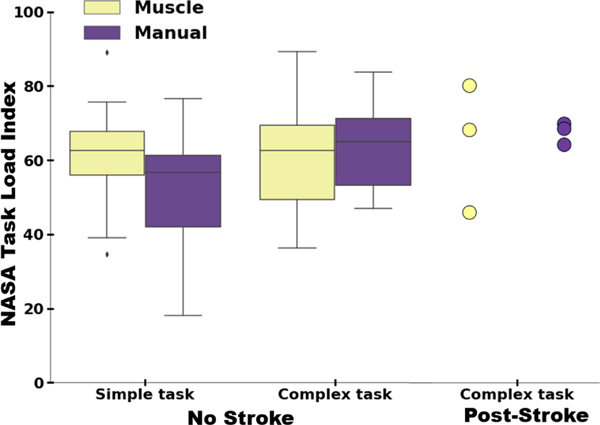

NASA Task Load Index (TLX)

Users with and without motor impairments perceived no differences in task load across tasks and interfaces (Fig. 7). We found no significant main (interface (muscle versus manual); task (simple versus complex)) or interaction (interface × task) effects from the results of the NASA TLX for participants without motor impairments. NASA TLX for users with motor impairments ranged from 45 to 80, well within the range of users without motor impairments. This suggests that users found all interfaces equally easy to manipulate, despite the muscle interface being a novel interface for many participants.

Figure 7.

Results from NASA TLX demonstrates similar subjective workload across all tasks for users with and without motor impairments.

DISCUSSION

We demonstrate for the first time that users without motor impairments perform 49% better when using a muscle interface compared to a manual interface for a complex (acceleration-based) continuous task. We additionally found that users without motor impairments improved performance by 61% at frequencies above 0.35 Hz. However, this was solely the case for the frequency-domain performance metric that quantifies accuracy of intent, and there was no significant difference between the two interfaces when quantifying performance for both intent and error correction. This suggests that while users form better intent with the muscle interface, users conversely perform error correction better with the manual interface.

It may be that muscle interfaces are particularly intuitive for acceleration-based tasks. The electrical activity that we measure as the user input for the muscle interface is a result of electrical signals sent from the brain to the muscle fibers, which then produce force that generates movement [8]. Since force (F) is correlated to acceleration (a) and the mass of the system (m) by F = ma, EMG activity can be mapped to acceleration without abstraction. This is one possible explanation for why the muscle interface was preferred and performed better for the complex acceleration-based task. Future experiments should further investigate this relationship by comparing the muscle interface against a force-based manual interface instead of a position-based manual interface that we used for this study. If direct mapping between the user input and device output is important for performance, then muscle interfaces and force-based interfaces should perform similarly for the complex task.

Muscle interfaces performing better for complex acceleration-based high-frequency tasks have implications for interface design for users with and without motor impairments. Muscle interfaces may be beneficial for tasks where the user controls the acceleration of the device that require quick maneuvers like flying a drone through a dense forest or remotely controlling a running robot through rocky terrain. Continuing research on comparing how various interfaces perform with frequency-domain analysis from control theory is useful for informing intuitive interface design for device control.

Our preliminary findings from three participants suggest that control theory may be useful in deriving intent for users with motor impairments. We found that the three users with motor impairments also preferred and performed better with the muscle than the manual interface for both time-domain and frequency-domain performance metrics. Previous studies solely compared performance between manual and muscle interfaces from users without motor impairments [7, 23]. Muscle interfaces provide an attractive alternative interaction method to manual interfaces that could also encourage bilateral interaction for users with motor impairments after neurologic injury. The fine coordination of multiple arm and finger muscles required to use manual interfaces are simplified to activating one or two user-chosen muscles with muscle interfaces. For users with difficulties performing error correction like users with motor impairments, quantifying the intent from the user input while ignoring error correction is an important metric for quantifying interface performance. In the frequency-domain, we demonstrated preliminary success in deriving user intent for users with motor impairments, and showed that the quantified feedforward controllers were within range of users without motor impairments. This is consistent with what is known about motor impairments after stroke, where the brain injury generally affects the motor and sensory cortices of the brain that control muscle recruitment and sensory feedback, but not the planning of the movement [18]. To the best of our knowledge, we proposed the first performance metric for users with and without motor impairments that quantifies user intent, that is, the user input needed to control a device to follow a desired trajectory in the absence of errors.

These promising results suggest that muscle interfaces may be a viable alternative to manual interfaces to enable bilateral and unobstrusive device interaction for users with and without motor impairments. It is especially exciting that users with motor impairments appear to perform better using the muscle than the manual interface even when the performance metric takes into account error correction. However, we expect that users with motor impairments will perform even better when their intent is used by artificial intelligence to assist with error correction. In future work, we envision building a system that 1) quickly characterizes how well a specific user implements intent or error correction, 2) suggests alternative interfaces that may improve performance, and 3) employs adaptive algorithms to enhance performance. We can leverage frequency-domain methods to develop user-specific algorithms that augment user input to compensate for error correction. As an example, consider the design of interfaces for games that require quick accurate movements. We can recommend an interface that performs well in rapid high-frequency tasks and implement an algorithm that augments the user’s ability to implement intent and correct for errors. Frequency-domain analysis is a powerful tool to help model and enhance user interaction.

Limitations

We only compared the muscle interface against a custom-built slider, one type of manual interface that is not as commonly used in daily life and was not designed for user comfort or performance. Additionally, users were not able to customize how the physical displacement of the slider mapped to the movement on the screen. In the future, comparing the muscle interface against commercially-available manual interfaces like touchscreens, joysticks, and mice and allowing for customization of interface sensitivity would inform when muscle interfaces are a desirable alternative to manual interfaces for complex high-frequency continuous tasks.

There were also a number of restrictions placed on the participants during this study that would not be in place during everyday use that may have affected the results of the study. To standardize how participants interacted with the manual interface, we asked participants to place their elbow on a hard surface and solely use their biceps and triceps, rather than using their wrists or fingers to manipulate the slider. We also do not know the effect of handedness on muscle or manual interface performance. Participants may have performed better with the manual than the muscle interface if they had used their dominant hand since users generally have better coordination with their dominant hand.

Lastly, with such a small population of participants with motor impairments (three), it is not possible to draw statistically significant conclusions. In addition, motor control ability in users who have had a stroke is diverse, and even in our case study we saw large heterogeneity in user capabilities, making it challenging to draw general conclusions about users with motor impairments. However, our preliminary results suggest that muscle interfaces are a promising alternative to manual interfaces and modeling methods from control theory can be used to quantify user intent separate from error correction for users with motor impairments.

CONCLUSION

This is the first paper to report on the performance of a muscle versus a manual interface for simple (velocity-based) and complex (acceleration-based) continuous tasks for users without motor impairments. We introduced techniques from control theory to quantify the performance of user intent in the absence of errors (like unintended tremor from motor impairments). Users without motor impairments performed 49% better with the muscle than the manual interface for tasks that required rapid changes to user inputs. Preliminary results suggest that users with motor impairments retained similar abilities to create feedforward models of a system as users without motor impariments, and also demonstrated more accurate intent with the muscle than the manual interface. Muscle interfaces may provide performance advantages in developing intent for complex tasks for users with and without motor impairments. These results suggest that control theory modeling can provide a platform to successfully quantify device performance in the absence of errors arising from motor impairments. Such alternate interfaces should continue being developed to support users of all abilities.

Supplementary Material

ACKNOWLEDGMENTS

We thank all of our study participants, and everyone who reviewed and provided helpful feedback on this paper. The first author was supported by a fellowship from the University of Washington Institute of Neuroengineering. This research was funded by the National Science Foundation under the CISE CRII CPS (Award # 1836819) and the National Institute of Biomedical Imaging and Bioengineering of the National Institutes of Health (Award # R01EB021935).

Contributor Information

Momona Yamagami, University of Washington Seattle, WA.

Katherine M. Steele, University of Washington Seattle, WA

Samuel A. Burden, University of Washington Seattle, WA

REFERENCES

- [1].Accot Johnny, Zhai Shumin, and others. 1997. Beyond Fitts’ Law: Models for Trajectory-Based HCI Tasks.. In CHI, Vol. 97. Citeseer, 295–302. [Google Scholar]

- [2].Amma Christoph, Krings Thomas, Böer Jonas, and Schultz Tanja. 2015. Advancing muscle-computer interfaces with high-density electromyography. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. ACM, 929–938. [Google Scholar]

- [3].Aström Karl Johan and Murray Richard M. 2010. Feedback systems: an introduction for scientists and engineers. Princeton university press. [Google Scholar]

- [4].Baek Ju-Yeoul, An Jin-Hee, Choi Jong-Min, Park Kwang-Suk, and Lee Sang-Hoon. 2008. Flexible polymeric dry electrodes for the long-term monitoring of ECG. Sensors and Actuators A: Physical 143, 2 (2008), 423–429. [Google Scholar]

- [5].Basmajian John V. 1962. Muscles alive. Their functions revealed by electromyography. Academic Medicine 37, 8 (1962), 802. [Google Scholar]

- [6].Bastian Amy J. 2006. Learning to predict the future: the cerebellum adapts feedforward movement control. Current opinion in neurobiology 16, 6 (2006), 645–649. [DOI] [PubMed] [Google Scholar]

- [7].Corbett Elaine A, Perreault Eric J, and Kuiken Todd A. 2011. Comparison of electromyography and force as interfaces for prosthetic control. Journal of rehabilitation research and development 48, 6 (2011), 629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].De Luca Carlo J. 1997. The use of surface electromyography in biomechanics. Journal of applied biomechanics 13, 2 (1997), 135–163. [Google Scholar]

- [9].Drop Frank M, Pool Daan M, Damveld Herman J, van Paassen Marinus M, and Mulder Max. 2013. Identification of the feedforward component in manual control with predictable target signals. IEEE transactions on cybernetics 43, 6 (2013), 1936–1949. [DOI] [PubMed] [Google Scholar]

- [10].Farina Dario, Jiang Ning, Rehbaum Hubertus, Holobar Aleš, Graimann Bernhard, Dietl Hans, and Aszmann Oskar C. 2014. The extraction of neural information from the surface EMG for the control of upper-limb prostheses: emerging avenues and challenges. IEEE Transactions on Neural Systems and Rehabilitation Engineering 22, 4 (2014), 797–809. [DOI] [PubMed] [Google Scholar]

- [11].Feldner Heather A, Howell Darrin, Kelly Valerie E, McCoy Sarah Westcott, and Steele Katherine M. 2019. “Look, Your Muscles Are Firing!”: A Qualitative Study of Clinician Perspectives on the Use of Surface Electromyography in Neurorehabilitation. Archives of physical medicine and rehabilitation 100, 4 (2019), 663–675. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Findlater Leah, Jansen Alex, Shinohara Kristen, Dixon Morgan, Kamb Peter, Rakita Joshua, and Wobbrock Jacob O. 2010. Enhanced area cursors: reducing fine pointing demands for people with motor impairments. In Proceedings of the 23nd annual ACM symposium on User interface software and technology. ACM, 153–162. [Google Scholar]

- [13].Findlater Leah, Moffatt Karyn, Froehlich Jon E, Malu Meethu, and Zhang Joan. 2017. Comparing touchscreen and mouse input performance by people with and without upper body motor impairments. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. ACM, 6056–6061. [Google Scholar]

- [14].Fougner Anders, Stavdahl Øyvind, Kyberd Peter J, Losier Yves G, and Parker Philip A. 2012. Control of upper limb prostheses: Terminology and proportional myoelectric control – A review. IEEE Transactions on neural systems and rehabilitation engineering 20, 5 (2012), 663–677. [DOI] [PubMed] [Google Scholar]

- [15].Gajos Krzysztof Z, Weld Daniel S, and Wobbrock Jacob O 2010. Automatically generating personalized user interfaces with Supple. Artificial Intelligence 174, 12–13 (2010), 910–950. [Google Scholar]

- [16].Gandolfo Francesca, Mussa-Ivaldi FA, and Bizzi Emilio. 1996. Motor learning by field approximation. Proceedings of the National Academy of Sciences 93, 9 (1996), 3843–3846. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Guerreiro Tiago, Nicolau Hugo, Jorge Joaquim, and Gonçalves Daniel. 2010. Towards accessible touch interfaces. In Proceedings of the 12th international ACM SIGACCESS conference on Computers and accessibility. ACM, 19–26. [Google Scholar]

- [18].Hallett Mark. 2001. Plasticity of the human motor cortex and recovery from stroke. Brain research reviews 36, 2–3 (2001), 169–174. [DOI] [PubMed] [Google Scholar]

- [19].Haque Faizan, Nancel Mathieu, and Vogel Daniel. 2015. Myopoint: Pointing and clicking using forearm mounted electromyography and inertial motion sensors. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. ACM, 3653–3656. [Google Scholar]

- [20].Hart Sandra Gand Staveland Lowell E. 1988. Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research. In Advances in psychology. Vol. 52. Elsevier, 139–183. [Google Scholar]

- [21].Hermens Hermie J, Freriks Bart, Merletti Roberto, Stegeman Dick, Blok Joleen, Rau Günter, Disselhorst-Klug Cathy, and Hägg Göran. 1999. European recommendations for surface electromyography. Roessingh research and development 8, 2 (1999), 13–54. [Google Scholar]

- [22].Huang Donny, Zhang Xiaoyi, Saponas T Scott, Fogarty James, and Gollakota Shyamnath. 2015. Leveraging dual-observable input for fine-grained thumb interaction using forearm EMG. In Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology. ACM, 523–528. [Google Scholar]

- [23].Lobo-Prat Joan, Keemink Arvid QL, HA Stienen Arno, Schouten Alfred C, Veltink Peter H, Koopman Bart FJM. 2014. Evaluation of EMG, force and joystick as control interfaces for active arm supports. Journal of neuroengineering and rehabilitation 11, 1 (2014), 68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].MacKenzie I Scott 1992. Fitts’ law as a research and design tool in human-computer interaction. Human-computer interaction 7, 1 (1992), 91–139. [Google Scholar]

- [25].Malu Meethu, Chundury Pramod, and Findlater Leah. 2018. Exploring accessible smartwatch interactions for people with upper body motor impairments. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, 488. [Google Scholar]

- [26].Malu Meethu and Findlater Leah. 2015. Personalized, wearable control of a head-mounted display for users with upper body motor impairments. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. ACM, 221–230. [Google Scholar]

- [27].McRuer Duane Tand Jex Henry R. 1967. A review of quasi-linear pilot models. IEEE transactions on human factors in electronics 3 (1967), 231–249. [Google Scholar]

- [28].Mott Martez E, Vatavu Radu-Daniel, Kane Shaun K, and Wobbrock Jacob O. 2016. Smart touch: Improving touch accuracy for people with motor impairments with template matching. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems. ACM, 1934–1946. [Google Scholar]

- [29].Periyaswamy Thamizhisai and Balasubramanian Mahendran. 2019. Ambulatory cardiac bio-signals: From mirage to clinical reality through a decade of progress. International journal of medical informatics 130 (2019), 103928–103928. [DOI] [PubMed] [Google Scholar]

- [30].Posada-Quintero Hugo F, Rood Ryan T, Burnham Ken, Pennace John, and Chon Ki H. 2016. Assessment of carbon/salt/adhesive electrodes for surface electromyography measurements. IEEE journal of translational engineering in health and medicine 4 (2016), 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Roth Eatai, Howell Darrin, Beckwith Cydney, and Buarden Samuel A. 2017. Toward experimental validation of a model for human sensorimotor learning and control in teleoperation. In Micro-and Nanotechnology Sensors, Systems, and Applications IX, Vol. 10194. International Society for Optics and Photonics, 101941X. [Google Scholar]

- [32].Saponas T Scott, Tan Desney S, Morris Dan, and Balakrishnan Ravin. 2008. Demonstrating the feasibility of using forearm electromyography for muscle-computer interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. ACM, 515–524. [Google Scholar]

- [33].Saponas T Scott, Tan Desney S, Morris Dan, Balakrishnan Ravin, Turner Jim, and Landay James A. 2009. Enabling always-available input with muscle-computer interfaces. In Proceedings of the 22nd annual ACM symposium on User interface software and technology. ACM, 167–176. [Google Scholar]

- [34].Scott RN and Parker PA. 1988. Myoelectric prostheses: state of the art. Journal of medical engineering & technology 12, 4 (1988), 143–151. [DOI] [PubMed] [Google Scholar]

- [35].Shuman Benjamin R, Goudriaan Marije, Desloovere Kaat, Schwartz Michael H, and Steele Katherine M. 2019. Muscle synergies demonstrate only minimal changes after treatment in cerebral palsy. Journal of neuroengineering and rehabilitation 16, 1 (2019), 46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [36].Trewin Shari and Pain Helen. 1999. Keyboard and mouse errors due to motor disabilities. International Journal of Human-Computer Studies 50, 2 (1999), 109–144. [Google Scholar]

- [37].Trewin Shari, Swart Cal, and Pettick Donna. 2013. Physical accessibility of touchscreen smartphones. In Proceedings of the 15th International ACM SIGACCESS Conference on Computers and Accessibility. ACM, 19. [Google Scholar]

- [38].Ungurean Ovidiu-Ciprian, Vatavu Radu-Daniel, Leiva Luis A, and Plamondon Réjean. 2018. Gesture input for users with motor impairments on touchscreens: Empirical results based on the kinematic theory. In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems. ACM, LBW537. [Google Scholar]

- [39].Vega-González Arturo and Granat Malcolm H. 2005. Continuous monitoring of upper-limb activity in a free-living environment. Archives of physical medicine and rehabilitation 86, 3 (2005), 541–548. [DOI] [PubMed] [Google Scholar]

- [40].Wobbrock Jacob O, Kane Shaun K, Gajos Krzysztof Z, Harada Susumu, and Froehlich Jon. 2011. Ability-based design: Concept, principles and examples. ACM Transactions on Accessible Computing (TACCESS) 3, 3 (2011), 9. [Google Scholar]

- [41].Yamagami Momona, Howell Darrin, Roth Eatai, and Burden Samuel A. 2019. Contributions of feedforward and feedback control in a manual trajectory-tracking task. IFAC-PapersOnLine 51, 34 (2019), 61–66. [Google Scholar]

- [42].Yamagami Momona, Peters Keshia, Milovanovic Ivana, Kuang Irene, Yang Zeyu, Lu Nanshu, and Steele Katherine. 2018. Assessment of dry epidermal electrodes for long-term electromyography measurements. Sensors 18, 4 (2018), 1269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Yang Kaiwen, Nicolini Luke, Kuang Irene, Lu Nanshu, and Djurdjanovic Dragan. Long-Term Modeling and Monitoring of Neuromusculoskeletal System Performance Using Tattoo-Like EMG Sensors. (????). [PMC free article] [PubMed] [Google Scholar]

- [44].Bo Yu R Gillespie Brent, Freudenberg James S, and Cook Jeffrey A. 2014. Human control strategies in pursuit tracking with a disturbance input. In 53rd IEEE Conference on Decision and Control. IEEE, 3795–3800. [Google Scholar]

- [45].Zhang Xingye, Wang Shaoqian, Hoagg Jesse B, and Seigler T Michael. 2017. The roles of feedback and feedforward as humans learn to control unknown dynamic systems. IEEE transactions on cybernetics 48, 2 (2017), 543–555. [DOI] [PubMed] [Google Scholar]

- [46].Zhong Yu, Weber Astrid, Burkhardt Casey, Weaver Phil, and Bigham Jeffrey P. 2015. Enhancing Android accessibility for users with hand tremor by reducing fine pointing and steady tapping. In Proceedings of the 12th Web for All Conference. ACM, 29. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.