Abstract

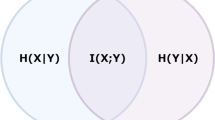

Feature selection is an important approach to dimensionality reduction in the field of text classification. Because of the difficulty in handling the problem that the selected features always contain redundant information, we propose a new simple feature selection method, which can effectively filter the redundant features. First, to calculate the relationship between two words, the definitions of word frequency based relevance and correlative redundancy are introduced. Furthermore, an optimal feature selection (OFS) method is chosen to obtain a feature subset FS1. Finally, to improve the execution speed, the redundant features in FS1 are filtered by combining a predetermined threshold, and the filtered features are memorized in the linked lists. Experiments are carried out on three datasets (WebKB, 20-Newsgroups, and Reuters-21578) where in support vector machines and naïve Bayes are used. The results show that the classification accuracy of the proposed method is generally higher than that of typical traditional methods (information gain, improved Gini index, and improved comprehensively measured feature selection) and the OFS methods. Moreover, the proposed method runs faster than typical mutual information-based methods (improved and normalized mutual information-based feature selections, and multilabel feature selection based on maximum dependency and minimum redundancy) while simultaneously ensuring classification accuracy. Statistical results validate the effectiveness of the proposed method in handling redundant information in text classification.

Similar content being viewed by others

References

Alatas B, 2010. Chaotic harmony search algorithms. Appl Math Comput, 216(9):2687–2699. https://doi.org/10.1016/j.amc.2010.03.114

Apte C, Damerau F, Weiss S, 1999. Text mining with decision trees and decision rules. Conf on Automated Learning and Discovery, p.169–198.

Battiti R, 1994. Using mutual information for selecting fea-tures in supervised neural net learning. IEEE Trans Neur Netw, 5(4):537–550. https://doi.org/10.1109/72.298224

Breiman L, Friedman JH, Olshen RA, et al., 1984. Classifica-tion and Regression Trees. Wadsworth International Group, Monterey, USA.

Caruana G, Li MZ, Liu Y, 2013. An ontology enhanced parallel SVM for scalable spam filter training. Neurocomputing, 108:45–57. https://doi.org/10.1016/j.neucom.2012.12.001

Cevenini G, Barbini E, Massai MR, et al., 2013. A naïve Bayes classifier for planning transfusion requirements in heart surgery. J Eval Clin Pract, 19(1):25–29. https://doi.org/10.1111/j.1365-2753.2011.01762.x

Chang CC, Lin CJ, 2007. LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol, 2(3), Article 27. https://doi.org/10.1145/1961189.1961199

Chen JN, Huang HK, Tian SF, et al., 2009. Feature selection for text classification with naïve Bayes. Exp Syst Appl, 36(3):5432–5435. https://doi.org/10.1016/j.eswa.2008.06.054

Dallachiesa M, Palpanas T, Ilyas IF, 2014. Top-k nearest neighbor search in uncertain data series. Proc VLDB En-dowm, 8(1):13–24. https://doi.org/10.14778/2735461.2735463

De Souza AF, Pedroni F, Oliveira E, et al., 2009. Automated multi-label text categorization with VG-RAM weightless neural networks. Neurocomputing, 72(10-12):2209–2217. https://doi.org/10.1016/j.neucom.2008.06.028

Drucker H, Wu DH, Vapnik VN, 1999. Support vector ma-chines for spam categorization. IEEE Trans Neur Netw, 10(5):1048–1054. https://doi.org/10.1109/72.788645

Elghazel H, Aussem A, Gharroudi O, et al., 2016. Ensemble multi-label text categorization based on rotation forest and latent semantic indexing. Exp Syst Appl, 57:1–11. https://doi.org/10.1016/j.eswa.2016.03.041

Estevez PA, Tesmer M, Perez CA, et al., 2009. Normalized mutual information feature selection. IEEE Trans Neur Netw, 20(2):189–201. https://doi.org/10.1109/TNN.2008.2005601

Geem ZW, Kim JH, Loganathan GV, 2001. A new heuristic optimization algorithm: harmony search. Simulation, 76(2): 60–68. https://doi.org/10.1177/003754970107600201

Han M, Ren WJ, 2015. Global mutual information-based feature selection approach using single-objective and multi-objective optimization. Neurocomputing, 168:47–54. https://doi.org/10.1016/j.neucom.2015.06.016

Hoque N, Bhattacharyya DK, Kalita JK, 2014. MIFS-ND: a mutual information-based feature selection method. Exp Syst Appl, 41(14):6371–6385. https://doi.org/10.1016/j.eswa.2014.04.019

Jing LP, Ng MK, Huang JZ, 2010. Knowledge-based vector space model for text clustering. Knowl Inform Syst, 25(1):35–55. https://doi.org/10.1007/s10115-009-0256-5

Joachims T, 1998. Text categorization with support vector machines: learning with many relevant features. Proc 10th European Conf on Machine Learning, p.137–142. https://doi.org/10.1007/BFb0026683

Kruskal JB, Wish M, 1978. Multidimensional Scaling. Sage, London, UK.

Lin YJ, Hu QH, Liu JH, et al., 2015. Multi-label feature se-lection based on max-dependency and min-redundancy. Neurocomputing, 168:92–103. https://doi.org/10.1016/j.neucom.2015.06.010

Liu H, Yu L, 2005. Toward integrating feature selection algo-rithms for classification and clustering. IEEE Trans Knowl Data Eng, 17(4):491–502. https://doi.org/10.1109/TKDE.2005.66

McCallum A, Nigam K, 2001. A comparison of event models for naive Bayes text classification. AAAI-98 Workshop on Learning for Text Categorization, p.41–48.

Napoletano P, Colace F, De Santo M, et al., 2012. Text classi-fication using a graph of terms. 6th Int Conf on Complex, Intelligent and Software Intensive Systems. p.1030–1035. https://doi.org/10.1109/CISIS.2012.183

Peng HC, Long FH, Ding C, 2005. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Patt Anal Mach Intell, 27(8):1226–1238. https://doi.org/10.1109/TPAMI.2005.159

Porter MF, 1997. An algorithm for suffix stripping. In: Jones KS, Willett P (Eds.), Readings in Information Retrieval. Morgan Kaufmann Publishers Inc., San Francisco, USA, p.313–316.

Schneider KM, 2003. A comparison of event models for naive Bayes anti-spam e-mail filtering. Proc 10th Conf on Eu-ropean Chapter of the Association for Computational Linguistics, p.307–314. https://doi.org/10.3115/1067807.1067848

Sebastiani F, 2002. Machine learning in automated text cate-gorization. ACM Comput Surv, 34(1):1–47. https://doi.org/10.1145/505282.505283

Shang WQ, Huang HK, Zhu HB, et al., 2007. A novel feature selection algorithm for text categorization. Exp Syst Appl, 33(1):1–5. https://doi.org/10.1016/j.eswa.2006.04.001

Taheri SM, Hesamian G, 2013. A generalization of the Wil-coxon signed-rank test and its applications. Stat Paper, 54(2):457–470. https://doi.org/10.1007/s00362-012-0443-4

Tenenhaus M, Vinzi VE, Chatelin YM, et al., 2005. PLS path modeling. Comput Stat Data Anal, 48(1):159–205. https://doi.org/10.1016/j.csda.2004.03.005

Uguz H, 2011. A two-stage feature selection method for text categorization by using information gain, principal com-ponent analysis and genetic algorithm. Knowl-Based Syst, 24(7):1024–1032. https://doi.org/10.1016/j.knosys.2011.04.014

Wang DQ, Zhang H, Liu R, et al., 2012. Feature selection based on term frequency and T-test for text categorization. Proc 21st ACM Int Conf on Information and Knowledge Management, p.1482–1486. https://doi.org/10.1145/2396761.2398457

Wang YW, Liu YN, Feng LZ, et al., 2014. Novel feature se-lection method based on harmony search for email classi-fication. Knowl-Based Syst, 73:311–323. https://doi.org/10.1016/j.knosys.2014.10.013

Wilcoxon F, 1945. Individual comparisons by ranking methods. Biom Bull, 1(6):80–83. https://doi.org/10.2307/3001968

Yang JM, Liu YN, Zhu XD, et al., 2012. A new feature selec-tion based on comprehensive measurement both in inter-category and intra-category for text categorization. Inform Process Manag, 48(4):741–754. https://doi.org/10.1016/j.ipm.2011.12.005

Yan J, Liu N, Zhang B, et al., 2005. OCFS: optimal orthogonal centroid feature selection for text categorization. Int ACM SIGIR Conf on Research and Development in Information Retrieval, p.122–129. https://doi.org/10.1145/1076034.1076058

Yang JM, Qu ZY, Liu ZY, 2014. Improved feature-selection method considering the imbalance problem in text cate-gorization. Sci World J, 2014:625342. https://doi.org/10.1155/2014/625342

Yang YM, Pedersen JO, 1997. A comparative study on feature selection in text categorization. Proc 14th Int Conf on Machine Learning, p.412–420.

Zhang W, Yoshida T, Tang XJ, 2011. A comparative study of TF*IDF, LSI and multi-words for text classification. Exp Syst Appl, 38(3):2758–2765. https://doi.org/10.1016/j.eswa.2010.08.066

Zhang W, Clark RAJ, Wang YY, et al., 2016. Unsupervised language identification based on latent Dirichlet Alloca-tion. Comput Speech Lang, 39:47–66. https://doi.org/10.1016/j.csl.2016.02.001

Zhang YS, Zhang ZG, 2012. Feature subset selection with cumulate conditional mutual information minimization. Exp Syst Appl, 39(5):6078–6088. https://doi.org/10.1016/j.eswa.2011.12.003

Author information

Authors and Affiliations

Corresponding author

Additional information

Project supported by the Joint Funds of the National Natural Science Foundation of China (No. U1509214), the Beijing Natural Science Foundation, China (No. 4174105), the Key Projects of National Bureau of Statistics of China (No. 2017LZ05), and the Discipline Construction Foundation of the Central University of Finance and Economics, China (No. 2016XX02)

Rights and permissions

About this article

Cite this article

Wang, Yw., Feng, Lz. A new feature selection method for handling redundant information in text classification. Frontiers Inf Technol Electronic Eng 19, 221–234 (2018). https://doi.org/10.1631/FITEE.1601761

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1631/FITEE.1601761

Key words

- Feature selection

- Dimensionality reduction

- Text classification

- Redundant features

- Support vector machine

- Naïve Bayes

- Mutual information