Abstract

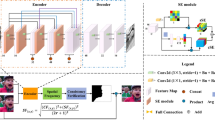

We propose a multi-focus image fusion method, in which a fully convolutional network for focus detection (FD-FCN) is constructed. To obtain more precise focus detection maps, we propose to add skip layers in the network to make both detailed and abstract visual information available when using FD-FCN to generate maps. A new training dataset for the proposed network is constructed based on dataset CIFAR-10. The image fusion algorithm using FD-FCN contains three steps: focus maps are obtained using FD-FCN, decision map generation occurs by applying a morphological process on the focus maps, and image fusion occurs using a decision map. We carry out several sets of experiments, and both subjective and objective assessments demonstrate the superiority of the proposed fusion method to state-of-the-art algorithms.

Similar content being viewed by others

References

Amin-Naji M, Aghagolzadeh A, Ezoji M, 2019. Ensemble of CNN for multi-focus image fusion. Inform Fus, 51:201214. https://doi.org/10.1016/j.inffus.2019.02.003

Aslantas V, Kurban R, 2010. Fusion of multi-focus images using differential evolution algorithm. Expert Syst Appl, 37(12):8861–8870. https://doi.org/10.1016/j.eswa.2010.06.011

Ayyalasomayajula KR, Malmberg F, Brun A, 2019. PDNet: semantic segmentation integrated with a primal-dual network for document binarization. Patt Recogn Lett, 121:52–60. https://doi.org/10.1016/j.patrec.2018.05.011

Bai XZ, Zhang Y, Zhou FG, et al., 2015. Quadtree-based multi-focus image fusion using a weighted focus-measure. Inform Fus, 22:105–118. https://doi.org/10.1016/j.inffus.2014.05.003

Bavirisetti DP, Dhuli R, 2016. Fusion of infrared and visible sensor images based on anisotropic diffusion and Karhunen-Loeve transform. IEEE Sens J, 16(1):203–209. https://doi.org/10.1109/JSEN.2015.2478655

Chen Y, Qin Z, 2015. Gradient-based compressive image fusion. Front Inform Technol Electron Eng, 16(3):227–237. https://doi.org/10.1631/FITEE.1400217

Han Y, Cai YZ, Cao Y, et al., 2013. A new image fusion performance metric based on visual information fidelity. Inform Fus, 14(2):127–135. https://doi.org/10.1016/jinffus.2011.08.002

He KM, Sun J, Tang XO, 2013. Guided image filtering. IEEE Trans Patt Anal Mach Intell, 35(6):1397–1409. https://doi.org/10.1109/TPAMI.2012.213

Huang W, Jing ZL, 2007. Multi-focus image fusion using pulse coupled neural network. Patt Recogn Lett, 28(9): 1123–1132. https://doi.org/10.1016/j.patrec.2007.01.013

Huhle B, Schairer T, Jenke P, et al., 2010. 1. Comput Vis Image Understand, 114(12):1336–1345. https://doi.org/10.1016/jxviu.2009.1L004

Juočas L, Raudonis V, Maskeliünas R, et al., 2019. Multifocusing algorithm for microscopy imagery in assembly line using low-cost camera. Int J Adv Manuf Technol, 102(9–12):3217–3227. https://doi.org/10.1007/s00170-019-03407-9

Kim KI, Kwon Y, 2010. Single-image super-resolution using sparse regression and natural image prior. IEEE Trans Patt Anal Mach Intell, 32(6):1127–1133. https://doi.org/10.1109/TPAMI.2010.25

LeCun Y, Bengio Y, 1995. Convolutional networks for images, speech, and time-series. In: Arbib MA (Ed.), The Handbook of Brain Theory and Neural Networks. MIT Press, Cambridge, p.255–258.

Li ST, Kwok JT, Wang YN, 2002. Multifocus image fusion using artificial neural networks. Patt Recogn Lett, 23(8): 985–997. https://doi.org/10.1016/s0167-8655(02)00029-6

Li ST, Kang XD, Hu JW, 2013a. Image fusion with guided filtering. IEEE Trans Image Process, 22(7):2864–2875. https://doi.org/10.1109/TIP.2013.2244222

Li ST, Kang XD, Hu JW, et al., 2013b. Image matting for fusion of multi-focus images in dynamic scenes. Inform Fus, 14(2):147–162. https://doi.org/10.1016/j.inffus.2011.07.001

Li ST, Kang XD, Fang LY, et al., 2017. Pixel-level image fusion: a survey of the state of the art. Inform Fus, 33: 100–112. https://doi.org/10.1016/jinffus.2016.05.004

Liu Y, Chen X, Peng H, et al., 2017. Multi-focus image fusion with a deep convolutional neural network. Inform Fus, 36:191–207. https://doi.org/10.1016/jinffus.2016.12.001

Mustafa HT, Yang J, Zareapoor M, 2019. Multi-scale convolutional neural network for multi-focus image fusion. Image Vis Comput, 85:26–35. https://doi.org/10.1016/jimavis.2019.03.001

Piella G, Heijmans H, 2003. A new quality metric for image fusion. Proc Int Conf on Image Processing, p.173–176. https://doi.org/10.1109/ICIP.2003.1247209

Qu GH, Zhang DL, Yan PF, 2002. Information measure for performance of image fusion. Electron Lett, 38(7):313–315. https://doi.org/10.1049/el:20020212

Ren WQ, Zhang JG, Xu XY, et al., 2019. Deep video dehazing with semantic segmentation. IEEE Trans Image Process, 28(4):1895–1908. https://doi.org/10.1109/TIP.2018.2876178

Saha A, Bhatnagar G, Wu QMJ, 2013. Mutual spectral residual approach for multifocus image fusion. Dig Signal Process, 23(4):1121–1135. https://doi.org/10.1016/j.dsp.2013.03.001

Saleem A, Beghdadi A, Boashash B, 2011. Image quality metrics based multifocus image fusion. 3rd European Workshop on Visual Information Processing, p.77–82. https://doi.org/10.1109/EuVIP.2011.6045547

Shelhamer E, Long J, Darrell T, 2017. Fully convolutional networks for semantic segmentation. IEEE Trans Patt Anal Mach Intell, 39(4):640–651. https://doi.org/10.1109/TPAMI.2016.2572683

Wang B, Yuan XY, Gao XB, et al., 2019. A hybrid level set with semantic shape constraint for object segmentation. IEEE Trans Cybern, 49(5):1558–1569. https://doi.org/10.1109/TCYB.2018.2799999

Xydeas CS, Petrovic V, 2000. Objective image fusion performance measure. Electron Lett, 36(4):308–309. https://doi.org/10.1049/el:20000267.

Yang Y, Huang SY, Gao JF, et al., 2014. Multi-focus image fusion using an effective discrete wavelet transform based algorithm. Meas Sci Rev, 14(2):102–108. https://doi.org/10.2478/msr-2014-0014

Yang Y, Tong S, Huang SY, et al., 2015. Multifocus image fusion based on NSCT and focused area detection. IEEE Sens J, 15(5):2824–2838. https://doi.org/10.1109/JSEN.2014.2380153

Zhang BH, Lu XQ, Jia WT, 2013. A multi-focus image fusion algorithm based on an improved dual-channel PCNN in NSCT domain. Optik, 124(20):4104–4109. https://doi.org/10.1016/j.ijleo.2012.12.032

Zhang Q, Levine MD, 2016. Robust multi-focus image fusion using multi-task sparse representation and spatial context. IEEE Trans Image Process, 25(5):2045–2058. https://doi.org/10.1109/TIP.2016.2524212

Zhao WD, Wang D, Lu HC, 2019. Multi-focus image fusion with a natural enhancement via a joint multi-level deeply supervised convolutional neural network. IEEE Trans Circ Syst Video Technol, 29(4):1102–1115. https://doi.org/10.1109/TCSVT.2018.2821177

Zhou ZQ, Li S, Wang B, 2014. Multi-scale weighted gradient-based fusion for multi-focus images. Inform Fus, 20:60–72. https://doi.org/10.1016/j.inffus.2013.11.005

Zhu XB, Zhang XM, Zhang XY, et al., 2019. A novel framework for semantic segmentation with generative adversarial network. J Vis Commun Image Represent, 58:532–543. https://doi.org/10.1016/j.jvcir.2018.11.020

Author information

Authors and Affiliations

Contributions

Xiao-yu DONG designed the research. Rui GUO and Xuan-jing SHEN processed the data. Rui GUO drafted the manuscript. Xiao-li ZHANG helped organize the manuscript. Rui GUO and Xiao-li ZHANG revised and finalized the paper.

Corresponding author

Ethics declarations

Rui GUO, Xuan-jing SHEN, Xiao-yu DONG, and Xiao-li ZHANG declare that they have no conflict of interest.

Additional information

Project supported by the National Natural Science Foundation of China (No. 61801190), the Natural Science Foundation of Jilin Province, China (No. 20180101055JC), and the Outstanding Young Talent Foundation of Jilin Province, China (No. 20180520029JH)

Rights and permissions

About this article

Cite this article

Guo, R., Shen, Xj., Dong, Xy. et al. Multi-focus image fusion based on fully convolutional networks. Front Inform Technol Electron Eng 21, 1019–1033 (2020). https://doi.org/10.1631/FITEE.1900336

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1631/FITEE.1900336