Abstract

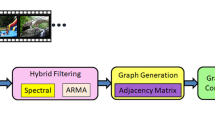

Video summarization has established itself as a fundamental technique for generating compact and concise video, which alleviates managing and browsing large-scale video data. Existing methods fail to fully consider the local and global relations among frames of video, leading to a deteriorated summarization performance. To address the above problem, we propose a graph convolutional attention network (GCAN) for video summarization. GCAN consists of two parts, embedding learning and context fusion, where embedding learning includes the temporal branch and graph branch. In particular, GCAN uses dilated temporal convolution to model local cues and temporal self-attention to exploit global cues for video frames. It learns graph embedding via a multi-layer graph convolutional network to reveal the intrinsic structure of frame samples. The context fusion part combines the output streams from the temporal branch and graph branch to create the context-aware representation of frames, on which the importance scores are evaluated for selecting representative frames to generate video summary. Experiments are carried out on two benchmark databases, SumMe and TVSum, showing that the proposed GCAN approach enjoys superior performance compared to several state-of-the-art alternatives in three evaluation settings.

摘要

视频摘要已成为生成浓缩简洁视频的一种基础技术, 该技术有利于管理和浏览大规模视频数据. 已有方法并未充分考虑各视频帧之间的局部和全局关系, 导致摘要性能下降. 提出一种基于图卷积注意力网络 (graph convolutional attention network, GCAN) 的视频摘要方法. GCAN由嵌入学习和上下文融合两部分组成, 其中嵌入学习包括时序分支和图分支. 具体而言, GCAN使用空洞时序卷积对局部线索和时序自注意力建模, 能有效利用各视频帧的全局线索; 同时利用多层图卷积网络学习图嵌入, 反映视频帧样本的本征结构. 上下文融合部分将时序分支和图分支的输出信息流合并形成视频帧的上下文表示, 然后计算其重要性得分, 据此选择具有代表性的帧, 生成视频摘要. 在两个基准数据集SumMe和TVSum上的实验结果表明, 相比其他多种先进方法, GCAN方法在3种不同评测环境下取得更优越的性能.

Similar content being viewed by others

Explore related subjects

Discover the latest articles and news from researchers in related subjects, suggested using machine learning.References

Aner A, Kender JR, 2002. Video summaries through mosaic-based shot and scene clustering. Proc 7th European Conf on Computer Vision, p.388–402. https://doi.org/10.1007/3-540-47979-1_26

Basavarajaiah M, Sharma P, 2019. Survey of compressed domain video summarization techniques. ACM Comput Surv, 52(6):116. https://doi.org/10.1145/3355398

Chen YW, Tsai YH, Lin YY, et al., 2020. VOSTR: video object segmentation via transferable representations. Int J Comput Vis, 128(4):931–949. https://doi.org/10.1007/s11263-019-01224-x

Chu WS, Song YL, Jaimes A, 2015. Video co-summarization: video summarization by visual co-occurrence. Proc IEEE Conf on Computer Vision and Pattern Recognition, p.3584–3592. https://doi.org/10.1109/CVPR.2015.7298981

Cisco, 2020. Cisco Global Networking Trends Report. https://www.cisco.com/c/m/en_us/solutions/enterprise-networks/networking-report.html

Cong Y, Yuan JS, Luo JB, 2012. Towards scalable summarization of consumer videos via sparse dictionary selection. IEEE Trans Multim, 14(1):66–75. https://doi.org/10.1109/TMM.2011.2166951

Cong Y, Liu J, Sun G, et al., 2017. Adaptive greedy dictionary selection for web media summarization. IEEE Trans Image Process, 26(1):185–195. https://doi.org/10.1109/TIP.2016.2619260

de Avila SEF, Lopes APB, da Luz A Jr, et al., 2011. VSUMM: a mechanism designed to produce static video summaries and a novel evaluation method. Patt Recogn Lett, 32(1):56–68. https://doi.org/10.1016/j.patrec.2010.08.004

Elhamifar E, Sapiro G, Vidal R, 2012. See all by looking at a few: sparse modeling for finding representative objects. Proc IEEE Conf on Computer Vision and Pattern Recognition, p.1600–1607. https://doi.org/10.1109/CVPR.2012.6247852

Gong BQ, Chao WL, Grauman K, et al., 2014. Diverse sequential subset selection for supervised video summarization. Proc 27th Int Conf on Neural Information Processing Systems, p.2069–2077.

Guan GL, Wang ZY, Lu SY, et al., 2013. Keypoint-based keyframe selection. IEEE Trans Circ Syst Video Technol, 23(4):729–734. https://doi.org/10.1109/TCSVT.2012.2214871

Gygli M, Grabner H, Riemenschneider H, et al., 2014. Creating summaries from user videos. Proc 13th European Conf on Computer Vision, p.505–520. https://doi.org/10.1007/978-3-319-10584-0_33

Hannane R, Elboushaki A, Afdel K, et al., 2016. An efficient method for video shot boundary detection and keyframe extraction using SIFT-point distribution histogram. Int J Multim Inform Retr, 5(2):89–104. https://doi.org/10.1007/s13735-016-0095-6

Huang JH, Di XG, Wu JD, et al., 2020. A novel convolutional neural network method for crowd counting. Front Inform Technol Electron Eng, 21(8):1150–1160. https://doi.org/10.1631/FITEE.1900282

Ji Z, Xiong KL, Pang YW, et al., 2020. Video summarization with attention-based encoder-decoder networks. IEEE Trans Circ Syst Video Technol, 30(6):1709–1717. https://doi.org/10.1109/TCSVT.2019.2904996

Jung Y, Cho D, Kim D, et al., 2019. Discriminative feature learning for unsupervised video summarization. Proc AAAI Conf on Artificial Intelligence, p.8537–8544. https://doi.org/10.1609/aaai.v33i01.33018537

Kipf TN, Welling M, 2017. Semi-supervised classification with graph convolutional networks. Int Conf on Learning Representations, p.1–14.

Kuanar SK, Panda R, Chowdhury AS, 2013. Video key frame extraction through dynamic Delaunay clustering with a structural constraint. J Vis Commun Image Represent, 24(7):1212–1227. https://doi.org/10.1016/j.jvcir.2013.08.003

Lei SS, Xie G, Yan GW, 2014. A novel key-frame extraction approach for both video summary and video index. Sci World J, 2014:695168. https://doi.org/10.1155/2014/695168

Li JN, Zhang SL, Wang JD, et al., 2019. Global-local temporal representations for video person re-identification. Proc IEEE/CVF Int Conf on Computer Vision, p.3957–3966. https://doi.org/10.1109/ICCV.2019.00406

Li P, Ye QH, Zhang LM, et al., 2021. Exploring global diverse attention via pairwise temporal relation for video summarization. Patt Recogn, 111:107677. https://doi.org/10.1016/j.patcog.2020.107677

Li YD, Wang LQ, Yang TB, et al., 2018. How local is the local diversity? Reinforcing sequential determinantal point processes with dynamic ground sets for supervised video summarization. Proc 15th European Conf on Computer Vision, p.156–174. https://doi.org/10.1007/978-3-030-01237-3_10

Lu SY, Wang ZY, Mei T, et al., 2014. A bag-of-importance model with locality-constrained coding based feature learning for video summarization. IEEE Trans Multim, 16(6):1497–1509. https://doi.org/10.1109/TMM.2014.2319778

Luan Q, Song ML, Liau CY, et al., 2014. Video summarization based on nonnegative linear reconstruction. IEEE Int Conf on Multimedia and Expo, p.1–6. https://doi.org/10.1109/ICME.2014.6890332

Mahasseni B, Lam M, Todorovic S, 2017. Unsupervised video summarization with adversarial LSTM networks. Proc IEEE Conf on Computer Vision and Pattern Recognition, p.2982–2991. https://doi.org/10.1109/CVPR.2017.318

Mahmoud KM, Ghanem NM, Ismail MA, 2013. VGRAPH: an effective approach for generating static video summaries. Proc IEEE Int Conf on Computer Vision Workshops, p.811–818. https://doi.org/10.1109/ICCVW.2013.111

Mei SH, Guan GL, Wang ZY, et al., 2015. Video summarization via minimum sparse reconstruction. Patt Recogn, 48(2):522–533. https://doi.org/10.1016/j.patcog.2014.08.002

Potapov D, Douze M, Harchaoui Z, et al., 2014. Category-specific video summarization. Proc 14th European Conf on Computer Vision, p.540–555. https://doi.org/10.1007/978-3-319-10599-4_35

Rochan M, Wang Y, 2019. Video summarization by learning from unpaired data. Proc IEEE/CVF Conf on Computer Vision and Pattern Recognition, p.7894–7903. https://doi.org/10.1109/CVPR.2019.00809

Rochan M, Ye LW, Wang Y, 2018. Video summarization using fully convolutional sequence networks. Proc 15th European Conf on Computer Vision, p.358–374. https://doi.org/10.1007/978-3-030-01258-8_22

Shen T, Zhou TY, Long GD, et al., 2018. Bi-directional block self-attention for fast and memory-efficient sequence modeling. Proc 6th Int Conf on Learning Representations, p.1–18.

Song YL, Vallmitjana J, Stent A, et al., 2015. TVSum: summarizing web videos using titles. Proc IEEE Conf on Computer Vision and Pattern Recognition, p.5179–5187. https://doi.org/10.1109/CVPR.2015.7299154

Szegedy C, Liu W, Jia YQ, et al., 2015. Going deeper with convolutions. Proc IEEE Conf on Computer Vision and Pattern Recognition, p.1–9. https://doi.org/10.1109/CVPR.2015.7298594

Wei HW, Ni BB, Yan YC, et al., 2018. Video summarization via semantic attended networks. Proc AAAI Conf on Artificial Intelligence, p.216–223.

Yu F, Koltun V, 2016. Multi-scale context aggregation by dilated convolutions. http://arxiv.org/abs/1511.07122

Yuan L, Tay FE, Li P, et al., 2019. Cycle-SUM: cycle-consistent adversarial LSTM networks for unsupervised video summarization. Proc AAAI Conf on Artificial Intelligence, p.9143–9150. https://doi.org/10.1609/aaai.v33i01.33019143

Yuan YT, Mei T, Cui P, et al., 2019. Video summarization by learning deep side semantic embedding. IEEE Trans Circ Syst Video Technol, 29(1):226–237. https://doi.org/10.1109/tcsvt.2017.2771247

Zhang K, Chao WL, Sha F, et al., 2016. Video summarization with long short-term memory. Proc 14th European Conf on Computer Vision, p.766–782. https://doi.org/10.1007/978-3-319-46478-7_47

Zhao B, Xing EP, 2014. Quasi real-time summarization for consumer videos. Proc IEEE Conf on Computer Vision and Pattern Recognition, p.2513–2520. https://doi.org/10.1109/CVPR.2014.322

Zhao B, Li XL, Lu XQ, 2018. HSA-RNN: hierarchical structure-adaptive RNN for video summarization. Proc IEEE/CVF Conf on Computer Vision and Pattern Recognition, p.7405–7414. https://doi.org/10.1109/CVPR.2018.00773

Zhao B, Li XL, Lu XQ, 2020. Property-constrained dual learning for video summarization. IEEE Trans Neur Netw Learn Syst, 31(10):3989–4000. https://doi.org/10.1109/TNNLS.2019.2951680

Zhou KY, Qiao Y, Xiang T, 2018. Deep reinforcement learning for unsupervised video summarization with diversity-representativeness reward. Proc AAAI Conf on Artificial Intelligence, p.7582–7589.

Zhuang YT, Rui Y, Huang TS, et al., 1998. Adaptive key frame extraction using unsupervised clustering. Proc Int Conf on Image Processing, p.866–870. https://doi.org/10.1109/ICIP.1998.723655

Author information

Authors and Affiliations

Contributions

Ping LI designed the research. Ping LI and Chao TANG processed the data and drafted the manuscript. Xianghua XU helped organize the manuscript. Ping LI revised and finalized the paper.

Corresponding author

Ethics declarations

Ping LI, Chao TANG, and Xianghua XU declare that they have no conflict of interest.

Additional information

Project supported by the National Natural Science Foundation of China (Nos. 61872122 and 61502131), the Zhejiang Provincial Natural Science Foundation of China (No. LY18F020015), the Open Project Program of the State Key Lab of CAD&CG, China (No. 1802), and the Zhejiang Provincial Key Research and Development Program, China (No. 2020C01067)

Rights and permissions

About this article

Cite this article

Li, P., Tang, C. & Xu, X. Video summarization with a graph convolutional attention network. Front Inform Technol Electron Eng 22, 902–913 (2021). https://doi.org/10.1631/FITEE.2000429

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1631/FITEE.2000429

Key words

- Temporal learning

- Self-attention mechanism

- Graph convolutional network

- Context fusion

- Video summarization