Abstract

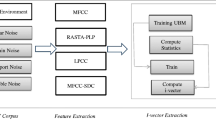

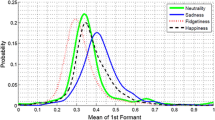

The shapes of speakers’ vocal organs change under their different emotional states, which leads to the deviation of the emotional acoustic space of short-time features from the neutral acoustic space and thereby the degradation of the speaker recognition performance. Features deviating greatly from the neutral acoustic space are considered as mismatched features, and they negatively affect speaker recognition systems. Emotion variation produces different feature deformations for different phonemes, so it is reasonable to build a finer model to detect mismatched features under each phoneme. However, given the difficulty of phoneme recognition, three sorts of acoustic class recognition—phoneme classes, Gaussian mixture model (GMM) tokenizer, and probabilistic GMM tokenizer—are proposed to replace phoneme recognition. We propose feature pruning and feature regulation methods to process the mismatched features to improve speaker recognition performance. As for the feature regulation method, a strategy of maximizing the between-class distance and minimizing the within-class distance is adopted to train the transformation matrix to regulate the mismatched features. Experiments conducted on the Mandarin affective speech corpus (MASC) show that our feature pruning and feature regulation methods increase the identification rate (IR) by 3.64% and 6.77%, compared with the baseline GMM-UBM (universal background model) algorithm. Also, corresponding IR increases of 2.09% and 3.32% can be obtained with our methods when applied to the state-of-the-art algorithm i-vector.

Similar content being viewed by others

References

Arslan, L.M., Hansen, J.H.L., 1994. Minimum cost based phoneme class detection for improved iterative speech enhancement. IEEE Int. Conf. on Acoustics, Speech, and Signal Processing, p.45–48. [doi:10.1109/ICASSP.1994.389722]

Balasubramanian, M., Schwartz, E.L., 2002. The isomap algorithm and topological stability. Science, 295(5552):7. [doi:10.1126/science.295.5552.7a]

Bao, H.J., Xu, M.X., Zheng, T.F., 2007. Emotion attribute projection for speaker recognition on emotional speech. Proc. 8th Annual Conf. of the Int. Speech Communication Association, p.601–604.

Bitouk, D., Verma, R., Nenkova, A., 2010. Class-level spectral features for emotion recognition. Speech Commun., 52(7–8):613–625. [doi:10.1016/j.specom.2010.02.010]

Brady, M.C., 2005. Synthesizing affect with an analog vocal tract: glottal source. Toward Social Mechanisms of Android Science: a CogSci Workshop, p.45–49.

Chen, L., Yang, Y.C., Yao, M., 2011. Reliability detection by fuzzy SVM with UBM component feature for emotional speaker recognition. Proc. 8th Int. Conf. on Fuzzy Systems and Knowledge Discovery, p.458–461. [doi:10.1109/FSKD.2011.6019484]

Cowie, R., Cornelius, R.R., 2003. Describing the emotional states that are expressed in speech. Speech Commun., 40(1–2):5–32. [doi:10.1016/S0167-6393(02)00071-7]

Dehak, N., Kenny, P., Dehak, R., et al., 2011. Front-end factor analysis for speaker verification. IEEE Trans. Audio Speech Lang. Process., 19(4):788–798. [doi:10.1109/tasl.2010.2064307]

Drygajlo, A., El-Maliki, M., 1998. Speaker verification in noisy environments with combined spectral subtraction and missing feature theory. Proc. IEEE Int. Conf. on Acoustics, Speech and Signal Processing, p.121–124. [doi:10.1109/ICASSP.1998.674382]

El Ayadi, M., Kamel, M.S., Karray, F., 2011. Survey on speech emotion recognition: features, classification schemes, and databases. Patt. Recog., 44(3):572–587. [doi:10.1016/j.patcog.2010.09.020]

Gadek, J., 2009. Influence of upper respiratory system disease on the performance of automatic voice recognition systems. Comput. Med. Act., 65:211–221. [doi:10.1007/978-3-642-04462-5_21]

Ghiurcau, M.V., Rusu, C., Astola, J., 2011a. A study of the effect of emotional state upon text-independent speaker identification. IEEE Int. Conf. on Acoustics, Speech and Signal Processing, p.4944–4947. [doi:10.1109/ICASSP.2011.5947465]

Ghiurcau, M.V., Rusu, C., Astola, J., 2011b. Speaker recognition in an emotional environment. Proc. Signal Processing and Applied Mathematics for Electronics and Communications, p.81–84.

Huang, T., Yang, Y.C., 2008. Applying pitch-dependent difference detection and modification to emotional speaker recognition. Proc. 9th Annual Conf. of the Int. Speech Communication Association, p.2751–2754.

Huang, T., Yang, Y.C., 2010. Learning virtual HD model for bi-model emotional speaker recognition. Proc. 20th Int. Conf. on Pattern Recognition, p.1614–1617. [doi:10.1109/ICPR.2010.399]

Jawarkar, N.P., Holambe, R.S., Basu, T.K., 2012. Textindependent speaker identification in emotional environments: a classifier fusion approach. Front. Comput. Educ., 133:569–576. [doi:10.1007/978-3-642-27552-4_77]

Jin, Q., Schultz, T., Waibel, A., 2007. Far-field speaker recognition. IEEE Trans. Audio Speech Lang. Process., 15(7):2023–2032. [doi:10.1109/tasl.2007.902876]

Kelly, F., Harte, N., 2011. Effects of long-term ageing on speaker verification. Proc. European Workshop on Biometrics and ID Management, p.113–124. [doi:10.1007/978-3-642-19530-3_11]

Lee, C.M., Yildirim, S., Bulut, M., et al., 2004. Effects of emotion on different phoneme classes. J. Acoust. Soc. Am., 116:2481. [doi:10.1121/1.4784911]

Li, A., Fang, Q., Hu, F., et al., 2010. Acoustic and articulatory analysis on Mandarin Chinese vowels in emotional speech. Proc. 7th Int. Symp. on Chinese Spoken Language Processing, p.38–43. [doi:10.1109/ISCSLP.2010.5684866]

Lin, C.F., Wang, S.D., 2002. Fuzzy support vector machines. IEEE Trans. Neur. Netw., 13(2):464–471. [doi:10.1109/72.991432]

Platt, J.C., 1999. Probabilistic outputs for support vector machines and comparisons to regularized likelihood methods. Adv. Large Margin Classifiers, 10(3):61–74.

Reynolds, D.A., 2003. Channel robust speaker verification via feature mapping. IEEE Int. Conf. on Acoustics, Speech, and Signal Processing, p.53–56. [doi:10.1109/ICASSP.2003.1202292]

Reynolds, D.A., Rose, R.C., 1995. Robust text-independent speaker identification using Gaussian mixture speaker models. IEEE Trans. Speech Audio Process., 3(1):72–83. [doi:10.1109/89.365379]

Reynolds, D.A., Quatieri, T.F., Dunn, R.B., 2000. Speaker verification using adapted Gaussian mixture models. Digit. Signal Process., 10(1–3):19–41. [doi:10.1006/dspr.1999.0361]

Rose, R.C., Hofstetter, E.M., Reynolds, D.A., 1994. Integrated models of signal and background with application to speaker identification in noise. IEEE Trans. Speech Audio Process., 2(2):245–257. [doi:10.1109/89.279273]

Scherer, K., Johnstone, T., Bänziger, T., 1998. Automatic verification of emotionally stressed speakers: the problem of individual differences. Proc. Int. Conf. on Speech and Computer, p.233–238.

Shahin, I., 2013. Speaker identification in emotional talking environments based on CSPHMM2s. Eng. Appl. Artif. Intell., 26(7):1652–1659. [doi:10.1016/j.engappai.2013.03.013]

Shan, Z.Y., Yang, Y.C., 2008. Learning polynomial function based neutral-emotion GMM transformation for emotional speaker recognition. Proc. 19th Int. Conf. on Pattern Recognition, p.1–4. [doi:10.1109/icpr.2008.4761647]

Shan, Z.Y., Yang, Y.C., Ye, R.Z., 2007. Natural-emotion GMM transformation algorithm for emotional speaker recognition. Proc. 8th Annual Conf. of the Int. Speech Communication Association, p.782–785.

Shriberg, E., Graciarena, M., Bratt, H., et al., 2008. Effects of vocal effort and speaking style on text-independent speaker verification. Proc. 9th Annual Conf. of the Int. Speech Communication Association, p.609–612.

Torres-Carrasquillo, P.A., Reynolds, D.A., Deller, J.R.Jr., 1993. Language identification using Gaussian mixture model tokenization. IEEE Int. Conf. on Acoustics, Speech, and Signal Processing, p.757–760. [doi:10.1109/ICASSP.2002.5743828]

Triefenbach, F., Jalalvand, A., Schrauwen, B., et al., 2010. Phoneme recognition with large hierarchical reservoirs. Proc. 24th Annual Conf. on Neural Information Processing Systems, p.2307–2315.

Twaddell, W.F., 1935. On defining the phoneme. Language, 11(1):5–62. [doi:10.2307/522070]

Yang, Y.C., Chen, L., 2012. Toward emotional speaker recognition: framework and preliminary results. Proc. 7th Chinese Conf. on Biometric Recognition, p.235–242. [doi:10.1007/978-3-642-35136-5_29]

Author information

Authors and Affiliations

Corresponding author

Additional information

Supported by the National Basic Research Program (973) of China (No. 2013CB329504), the National Natural Science Foundation of China (No. 60970080), and the National High-Tech R&D Program (863) of China (No. 2006AA01Z136)

Rights and permissions

About this article

Cite this article

Chen, L., Yang, Yc. & Wu, Zh. Mismatched feature detection with finer granularity for emotional speaker recognition. J. Zhejiang Univ. - Sci. C 15, 903–916 (2014). https://doi.org/10.1631/jzus.C1400002

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1631/jzus.C1400002