1.

Introduction

With the continuous development and progress of computing technology over the past decade, deep learning has taken a big step on the road of evolution. Deep learning can be found in many fields, such as imaging and natural language. Scholars have begun to apply deep learning to solve some complex partial differential equations (PDEs), which include PDEs with high order derivatives [1], high-dimensional PDEs [2,3], subdiffusion problems with noisy data [4] and so on. Based on the deep learning method, Raissi et al. [5] proposed a novel algorithm called the physics informed neural network (PINN), which has made excellent achievements for solving forward and inverse PDEs. It integrates physical information described by PDEs into a neural network. In recent years, the PINNs algorithm has attracted extensive attention. To solve forward and inverse problems of integro-differential equations (IDEs), Yuan et al. [6] proposed auxiliary physics informed neural network (A-PINN). Lin and Chen [7] designed a two-stage physics informed neural network for approximating localized wave solutions, which introduces the measurement of conserved quantities in stage two. Yang et al. [8] developed Bayesian physics informed neural networks (B-PINNs) that takes Bayesian neural network and Hamiltonian Monte Carlo or the variational inference as a priori and posteriori estimators, respectively. Scholars have also presented other variant algorithms of PINN, such as RPINNs [9] and hp-VPINNs [10]. PINN has also achieved good performance for solving physical problems, including high-speed flows [11] and heat transfer problems [12]. In addition to integer-order differential equations, authors have studied the application of PINN in solving fractional differential equations such as fractional advection-diffusion equations (see Pang et al. [13] for fractional physics informed neural network (fPINNs)), high dimensional fractional PDEs (see Guo et al. [14] for Monte Carlo physics-informed neural networks (MC-PINNs)) and fractional water wave models (see Liu et al. [15] for time difference PINN).

In recent decades, fractional differential equations (FDEs) have been concerned and studied in many fields, such as image denoising [16] and physics [17,18,19]. The reason why fractional differential equations have attracted wide attention is that they can more clearly describe complex physical phenomena. As an indispensable part of fractional problems, distributed-order differential equations are difficult to solve due to the complexity of distributed-order operators. To solve the time multi-term and distributed-order fractional sub-diffusion equations, Gao et al. [20] proposed a second order numerical difference formula. Jian et al. [21] derived a fast second-order implicit difference scheme of time distributed-order and Riesz space fractional diffusion-wave equations and analyzed the unconditional stability and second-order convergence. Li et al. [22] applied the mid-point quadrature rule with finite volume method to approximate the distributed-order equation. For the nonlinear distributed-order sub-diffusion model [23], the distributed-order derivative and the spatial direction were approximated by the FBN-θ formula with a second-order composite numerical integral formula and the H1-Galerkin mixed finite element method, respectively. In [24], Guo et al. adopted the Legendre-Galerkin spectral method for solving 2D distributed-order space-time reaction-diffusion equations. For the two-dimensional Riesz space distributed-order equation, Zhang et al. [25] used Gauss quadrature to calculate the distributed-order derivative and applied an alternating direction implicit (ADI) Galerkin-Legendre spectral scheme to approximate the spatial direction. For the distributed-order fourth-order sub-diffusion equation, Ran and Zhang [26] developed new compact difference schemes and proved their stability and convergence. In [27,28], authors developed spectral methods for the distributed-order time fractional fourth-order PDEs.

As we know, distributed-order fractional PDEs can be regarded as the limiting case of multi-term fractional PDEs [29]. Moreover, Diethelm and Ford [30] have observed that small changes in the order of a fractional PDE lead to only slight changes in the final solution, which gives initial support to the employed numerical integration method. In view of this, we develop the FBN-θ [31,32] with a second-order composite numerical integral formula combined with a multi-output neural network for solving 1D and 2D nonlinear time distributed-order models. Based on the idea of using a single output neural network combined with the discrete scheme of fractional models [13], we also use a single output neural network combined with a time discrete scheme to solve the nonlinear time distributed-order models. However, the accuracy of the prediction solution calculated by the single output neural network scheme is low and the training progress takes a lot of time. Therefore, we introduce a multi-output neural network to obtain the numerical solution of the time discrete scheme. Compared with the single output neural network scheme, the proposed multi-output neural network scheme has two main advantages as follows:

● Saving more computing time. The multi-output neural network scheme makes the sampling domain of the collocation points from the spatiotemporal domain to the spatial domain, which decreases the number of the training dataset and thus reduces the training time.

● Improving the accuracy of predicted solution. Due to the discrete scheme of the distributed-order derivative, the n-th output item of the multi-output neural network will be constrained by the previous n−1 output items.

The remainder of this article is as follows: In Section 2, we show what the components of neural network are and how to construct a neural network. In Section 3, we give the lemmas used to approximate the distributed-order derivative and the process of building the loss function. In Section 4, we provide some numerical results to confirm the capability of our proposed method. Finally, we make some conclusions in Section 5.

2.

Neural network

In face of different objectives in various fields, scholars have developed many different types of neural networks, such as feed-forward neural network (FNN) [6], recurrent neural network (RNN) [33] and convolutional neural network (CNN) [34]. The FNN considered in this article can effectively solve most PDEs. Input layer, hidden layer and output layer are three indispensable components of FNN, which can be given, respectively, by

Wk∈Rλk×λk−1 and bk∈Rλk represent the weight matrix and the bias vector in the kth layer, respectively. We define δ={Wk,bk}1≤k≤K, which is the trainable parameters of FNN. λk represents the number of neurons included in the kth layer. σ is a nonlinear activation function. In this article, the hyperbolic tangent function [3,6] is selected as the activation function. There are many other functions that can be considered as activation functions, such as the rectified linear unit (ReLU) σ(x)=max{x,0} [4] and the logistic sigmoid σ(x)=11+e−x [35].

3.

Methodology

3.1. Problem setup

In this article, we consider a nonlinear distributed-order model with the following general form:

where Ω⊂Rd(d≤2) and J=(0,T]. N[⋅] is a nonlinear differential operator. Dwtu represents the distributed-order derivative and has the following definition:

where ω(α)≥0, ∫10ω(α)dα=c0>0 and C0Dαtu(x,t) is the Caputo fractional derivative expressed by

The specific boundary condition is determined by the practical problem.

3.2. Some lemmas

For simplicity, choosing a mesh size Δα=12I, we denote the nodes on the interval [0,1] with coordinates αi=iΔα for i=0,1,2,⋯,2I. The time interval [0,T] is divided as the uniform mesh with the grid points tn=nΔt(n=0,1,2,⋯,N) and Δt=T/N is the time step size. We denote vn≈un=u(x,tn), un+12t=un+1−unΔt+O(Δt2) and un+12:=un+1+un2. vn is defined as the approximation solution of the time discrete scheme. The following lemmas are introduced to construct the numerical discrete formula of (3.1):

Lemma 3.1. (See [23]) Supposing ω(α)∈C2[0,1], we can get

where

Lemma 3.2. From [23,31,32], the discrete formula of the Caputo fractional derivative (3.3) can be obtained by

where

The parameters κ(α)i(i=0,1,⋯,n+1) that are the coefficients of FBN-θ (θ∈[−12,1]) can be given by

where

and

Lemma 3.3. (See [23]) The distributed-order term Dωtu at t=tn+12 can be calculated by the following formula:

where

and

3.3. The loss function

Based on the above lemmas, the discrete scheme of the distributed-order model (3.1) at t=tn+12(n=0,1,2,⋯,N−1) can be expressed by the following equality:

Then we can obtain the system of equations as follows:

The system of Eq (3.15) can be rewritten as the following matrix form:

where the symbols ρ0, v(x), f(x) and N(v(x)) are vectors, which are given, respectively, by

The symbol M is an N×N matrix that has the following definition:

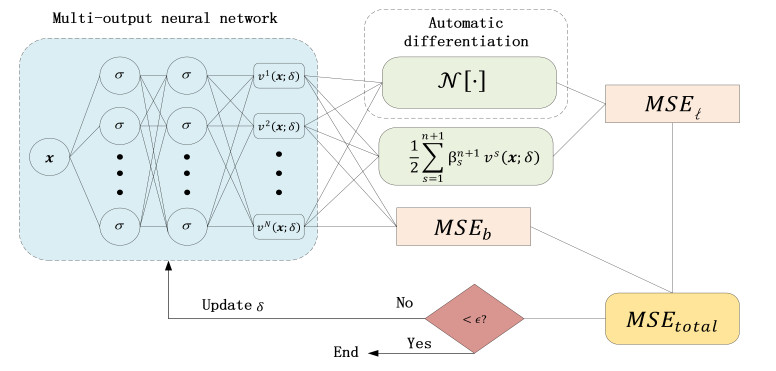

Now, we introduce a multi-output neural network v(x;δ)=[v1(x;δ),v2(x;δ),⋯,vN(x;δ)] into Eq (3.16), which takes x as an input and is used to approximate time discrete solutions v(x)=[v1(x),v2(x),⋯,vN(x)]. This will result in a multi-output PINN ℓ(x)=[ℓ1(x),ℓ2(x),⋯,ℓN(x)]:

where ℓn+1(x) is denoted as residual error of the discrete scheme (3.14), which is given by

The loss function is constructed in the form of mean square error. Combined with the boundary condition loss, the total loss function can be expressed by the following formula:

where

and boundary condition loss

Here, {xiℓ}Nxi=1 corresponds to the collocation points on the space domain Ω and {xib}Nbi=1 denotes the boundary training data. The schematic diagram of using the multi-output neural network scheme to solve nonlinear time distributed-order models is shown in Figure 1.

4.

Algorithm implementation

In this section, we consider two nonlinear time distributed-order equations to verify the feasibility and effectiveness of our proposed method. The performance is evaluated by calculating the relative L2 error between the predicted and exact solutions. The definition of relative L2 error is given by

Table 1 indicates which optimizer is selected for each example to minimize the loss function.

We use Python to code our algorithms and all codes run on a Lenovo laptop with AMD R7-6800H CPU @ 3.20 GHz and 16.0GB RAM.

4.1. The distributed-order sub-diffusion model

Here, we solve the following distributed-order sub-diffusion model:

with boundary condition

and initial condition

where the nonlinear term G(u)=u2. The symbol Δ is the Laplace operator. Based on Eqs (3.14)–(3.21), the loss function MSEtotal can be obtained by

where

Example 1.

For this example, we set space domain Ω=(0,1) and time interval J=(0,12]. The training set consists of the boundary points and Nx=200 collocation points randomly selected in the space domain Ω. Choosing ω(α)=Γ(3−α) and the source term

then we can obtain the exact solution u(x,t)=t2sin(2πx).

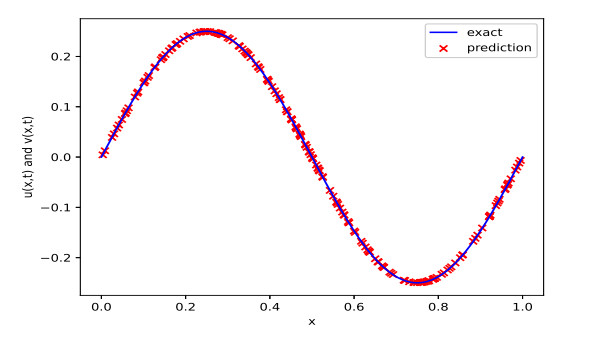

To evaluate the performance of our proposed method, the exact solution and the predicted solution solved by a multi-output neural network that consists of 6 hidden layers with 40 neurons in each hidden layer are showed in Figure 2.

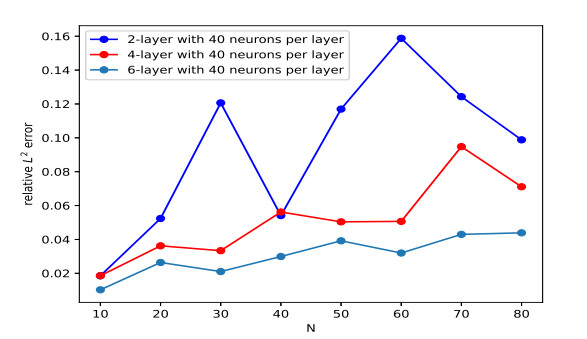

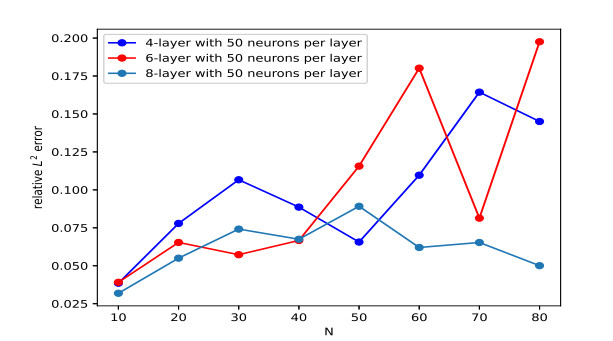

The influence of different network structures on our proposed method to solve Example 1 is presented in Table 2. The accuracy of the predicted solution fluctuates with different network architectures, and shows a fluctuating growth with the increase of the number of hidden layers. Based on the three network architectures, Figure 3 shows the behavior performance of the proposed method with gradual decrease of the time step size. With the increase of the number of grid points in the time interval, we observe that the behavior of relative L2 error generally presents an upward trend for fixed network architecture and expanding the depth of the hidden layer can effectively improve the accuracy of the predicted solution.

Numerical results calculated by the single output neural network and multi-output neural network schemes are presented in Table 3, where we select 200 collocation points in the given spatial domain by random sampling method and set N=10, θ=1 and Δα=1500. It is easy to find that the accuracy of the predicted solution calculated by the multi-output neural network scheme is higher than that solved by the single output neural network scheme and replacing single output neural network with multi-output neural network can save a lot of computing time.

Example 2.

In this numerical example, considering the space domain Ω=(0,1)×(0,1), the time interval J=(0,12], ω(α)=Γ(3−α) and the exact solution u(x,y,t)=t2sin(2πx)sin(2πy), the source term can be given by

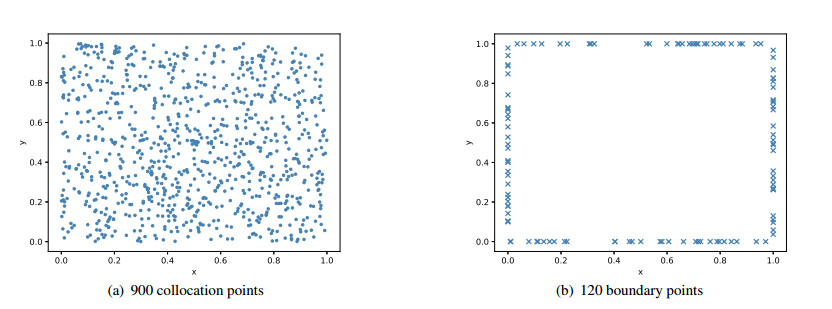

The training dataset is shown in Figure 5 and the collocation and boundary points are selected by random sampling method.

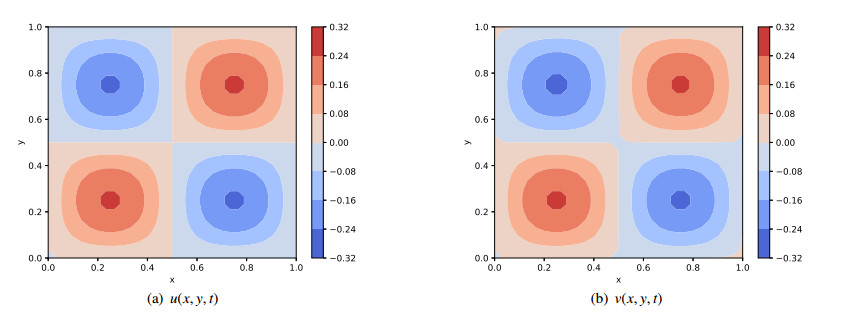

To better illustrate the behavior of the predicted solution, Figure 4 portrays the contour plot of the exact and predicted solutions, where the training set consists of 961 collocation points and 124 boundary points selected by the equidistant uniform sampling method and the network architecture consists of 12 hidden layers with 60 neurons per layer.

Table 4 shows the impact of depth and width of the network on the accuracy of the predicted solution. In Figure 6, we present the behavior of how relative L2 error changes with respect to different grid points N. Combined with Table 4 and Figure 6, we observe that increasing the number of hidden layers or neurons has a positive effect on reducing relative L2 error in general.

The results shown in Table 5 reveal the performance of the single output neural network and multi-output neural network schemes, where we select 40 boundary points and 200 collocation points in the given spatial domain by random sampling method and set N=10, θ=1 and Δα=1500. One can see that using multi-output neural network effectively improves the precision and reduces the computing time.

4.2. The distributed-order fourth-order sub-diffusion model

Further, we consider the following distributed-order fourth-order sub-diffusion model:

with boundary condition

and initial condition

where the nonlinear term G(x)=u2.

Similarly, the corresponding loss function MSEtotal can be calculated by

where

Example 3.

Here, we define the space-time domain Ω×J=(0,1)×(0,12]. Considering ω(α)=Γ(3−α) and the source term

the exact solution can be given by u(x,t)=t2sin(πx). Similar to Example 1, we also randomly sample 200 collocation points in the space domain Ω.

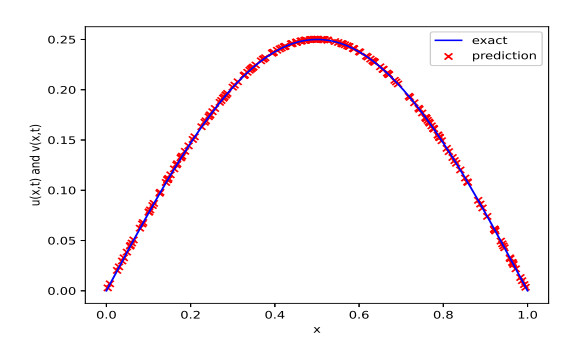

In order to conveniently observe the capability of our proposed method, Figure 7 shows the change in the trajectory of the predicted and exact solutions with respect to the space point x, where the parameters are set as N=20, Δα=1500, θ=1 and the network consists of 6 hidden layers with 50 neurons per layer. Figure 8 portrays the trajectory of relative L2 error with three different network architectures. Table 6 shows the impact of expanding depth or width of the network on the accuracy of the predicted solutions. Based on Figure 8 and Table 6, it is easy to observe that increasing the number of hidden layer plays a positive role in improving the accuracy of the predicted solutions.

The relative L2 error and CPU time obtained by the multi-output neural network and single output neural network schemes are presented in Table 7, where we select 200 collocation points in the given spatial domain by random sampling method and set N=10, θ=1 and Δα=1500. The error of the proposed multi-output neural network scheme is smaller than that of the single output neural network scheme. For this 1D system, the multi-output neural network scheme is more efficient than the single output neural network scheme.

Example 4.

Now we take space domain Ω=(0,1)×(0,1) and time interval J=(0,12]. Let ω(α)=Γ(3−α) and the exact solution u(x,y,t)=t2sin(πx)sin(πy). Then we arrive at the source term

Here, we apply the training data set shown in Figure 5.

In order to more intuitively demonstrate the feasibility of our proposed method for solving this 2D system, Figure 9 shows the contour plot of u and |u−v|, where the training set consists of 900 collocation points and 120 boundary points selected by the equidistant uniform sampling method and the network is composed of 6 hidden layers with 20 neurons per layer.

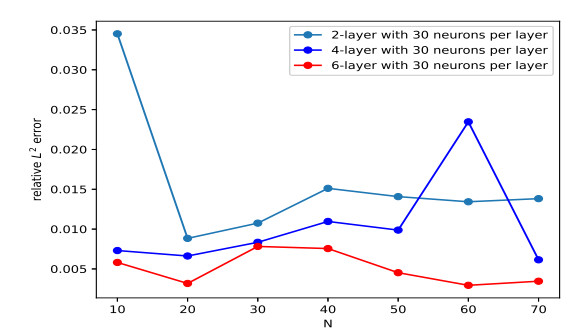

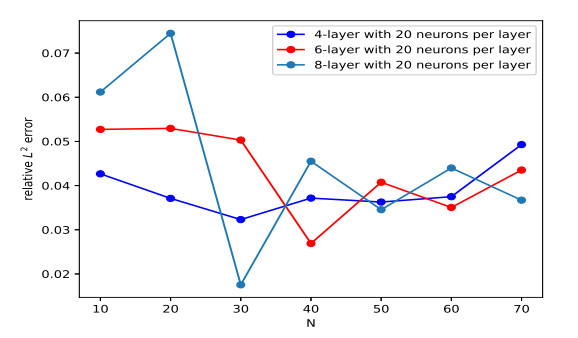

From the behavior of relative L2 error in Figure 10, one can see that the accuracy of the predicted solutions with the fixed network first presents a increasing trend and then gradually presents a downward trend. This is because the approximation ability of neural network reaches saturation with the increase of grid points N. Table 8 shows the relative L2 error calculated by different network architectures. On the whole, the relative L2 error slightly decreases with expanding depth of the network, while it first slightly decreases and then increases with expanding width of the network. To show the precision and efficiency of the multi-output neural network scheme for this 2D system, the relative L2 error and CPU time obtained by the multi-output neural network and single output neural network schemes are shown in Table 9, where we select 40 boundary points and 200 collocation points in the given spatial domain by random sampling method and set N=10, θ=1 and Δα=1500. It illustrates that the multi-output neural network scheme is more accurate and efficient than the single output neural network scheme.

5.

Conclusions

In this article, a multi-output physics informed neural network combined with the Crank-Nicolson scheme including the FBN-θ method and the composite numerical integral formula was constructed to solve 1D and 2D nonlinear time distributed-order models. The calculation process is described in detail. Numerical experiments are provided to prove the effectiveness and feasibility of our algorithm. Compared with the results calculated by a single output neural network combined with the FBN-θ method and the Crank-Nicolson scheme, one can clearly see that the proposed multi-output neural network scheme is more efficient and accurate. Moreover, some numerical methods, such as finite difference or finite element method, need to linearize the nonlinear term, which will give rise to extra costs. The process of linearization can be directly omitted by PINN. Further work will investigate the application of the proposed methodology in high-dimensional problems and practical problems [36,37,38,39,40].

Use of AI tools declaration

The authors declare that they have not used Artificial Intelligence (AI) tools in the creation of this article.

Acknowledgements

The authors would like to thank the editor and all the anonymous referees for their valuable comments, which greatly improved the presentation of the article. This work is supported by the National Natural Science Foundation of China (12061053, 12161063), Natural Science Foundation of Inner Mongolia (2021MS01018), Young innovative talents project of Grassland Talents Project, Program for Innovative Research Team in Universities of Inner Mongolia Autonomous Region (NMGIRT2413, NMGIRT2207), and 2023 Postgraduate Research Innovation Project of Inner Mongolia (S20231026Z).

Conflict of interest

The authors declare that they have no conflict of interest.

DownLoad:

DownLoad:

}

}